|

MyCaffe

1.12.2.41

Deep learning software for Windows C# programmers.

|

|

MyCaffe

1.12.2.41

Deep learning software for Windows C# programmers.

|

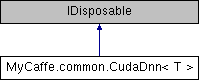

The CudaDnn object is the main interface to the Low-Level Cuda C++ DLL. More...

Public Member Functions | |

| CudaDnn (int nDeviceID, DEVINIT flags=(DEVINIT.CUBLAS|DEVINIT.CURAND), long? lSeed=null, string strPath="", bool bResetFirst=false, bool bEnableMemoryTrace=false) | |

| The CudaDnn constructor. More... | |

| CudaDnn (CudaDnn< T > cuda, bool bEnableGhostMemory) | |

| Alternate CudaDnn constructor. More... | |

| void | Dispose () |

| Disposes this instance freeing up all of its host and GPU memory. More... | |

| void | DisableGhostMemory () |

| Disables the ghost memory, if enabled. More... | |

| void | ResetGhostMemory () |

| Resets the ghost memory by enabling it if this instance was configured to use ghost memory. More... | |

| void | KernelCopy (int nCount, long hSrc, int nSrcOffset, long hDstKernel, long hDst, int nDstOffset, long hHostBuffer, long hHostKernel=-1, long hStream=-1, long hSrcKernel=-1) |

| Copy memory from the look-up tables in one kernel to another. More... | |

| void | KernelAdd (int nCount, long hA, long hDstKernel, long hB, long hC) |

| Add memory from one kernel to memory residing on another kernel. More... | |

| long | KernelCopyNccl (long hSrcKernel, long hSrcNccl) |

| Copies an Nccl handle from one kernel to the current kernel of the current CudaDnn instance. More... | |

| void | SetDeviceID (int nDeviceID=-1, DEVINIT flags=DEVINIT.NONE, long? lSeed=null) |

| Set the device ID used by the current instance of CudaDnn. More... | |

| void | SetRandomSeed (long lSeed) |

| Set the random number generator seed. More... | |

| int | GetDeviceID () |

| Returns the current device id set within Cuda. More... | |

| string | GetDeviceName (int nDeviceID) |

| Query the name of a device. More... | |

| string | GetDeviceP2PInfo (int nDeviceID) |

| Query the peer-to-peer information of a device. More... | |

| string | GetDeviceInfo (int nDeviceID, bool bVerbose=false) |

| Query the device information of a device. More... | |

| void | ResetDevice () |

| Reset the current device. More... | |

| void | SynchronizeDevice () |

| Synchronize the operations on the current device. More... | |

| int | GetMultiGpuBoardGroupID (int nDeviceID) |

| Query the mutli-gpu board group id for a device. More... | |

| int | GetDeviceCount () |

| Query the number of devices (gpu's) installed. More... | |

| bool | CheckMemoryAttributes (long hSrc, int nSrcDeviceID, long hDst, int nDstDeviceID) |

| Check the memory attributes of two memory blocks on different devices to see if they are compatible for peer-to-peer memory transfers. More... | |

| double | GetDeviceMemory (out double dfFree, out double dfUsed, out bool bCudaCallUsed, int nDeviceID=-1) |

| Queries the amount of total, free and used memory on a given GPU. More... | |

| string | GetRequiredCompute (out int nMinMajor, out int nMinMinor) |

| The GetRequiredCompute function returns the Major and Minor compute values required by the current CudaDNN DLL used. More... | |

| bool | DeviceCanAccessPeer (int nSrcDeviceID, int nPeerDeviceID) |

| Query whether or not two devices can access each other via peer-to-peer memory copies. More... | |

| void | DeviceEnablePeerAccess (int nPeerDeviceID) |

| Enables peer-to-peer access between the current device used by the CudaDnn instance and a peer device. More... | |

| void | DeviceDisablePeerAccess (int nPeerDeviceID) |

| Disables peer-to-peer access between the current device used by the CudaDnn instance and a peer device. More... | |

| long | AllocMemory (List< double > rg) |

| Allocate a block of GPU memory and copy a list of doubles to it. More... | |

| long | AllocMemory (List< float > rg) |

| Allocate a block of GPU memory and copy a list of floats to it. More... | |

| long | AllocMemory (double[] rgSrc, long hStream=0) |

| Allocate a block of GPU memory and copy an array of doubles to it, optionally using a stream for the copy. More... | |

| long | AllocMemory (float[] rgSrc, long hStream=0) |

| Allocate a block of GPU memory and copy an array of float to it, optionally using a stream for the copy. More... | |

| long | AllocMemory (T[] rgSrc, long hStream=0, bool bHalfSize=false) |

| Allocate a block of GPU memory and copy an array of type 'T' to it, optionally using a stream for the copy. More... | |

| long | AllocMemory (long lCapacity, bool bHalfSize=false) |

| Allocate a block of GPU memory with a specified capacity. More... | |

| void | FreeMemory (long hMem) |

| Free previously allocated GPU memory. More... | |

| void | CopyDeviceToHost (long lCount, long hGpuSrc, long hHostDst) |

| Copy from GPU memory to Host memory. More... | |

| void | CopyHostToDevice (long lCount, long hHostSrc, long hGpuDst) |

| Copy from Host memory to GPU memory. More... | |

| long | AllocHostBuffer (long lCapacity) |

| Allocate a block of host memory with a specified capacity. More... | |

| void | FreeHostBuffer (long hMem) |

| Free previously allocated host memory. More... | |

| long | GetHostBufferCapacity (long hMem) |

| Returns the host memory capacity. More... | |

| double[] | GetHostMemoryDouble (long hMem) |

| Retrieves the host memory as an array of doubles. More... | |

| float[] | GetHostMemoryFloat (long hMem) |

| Retrieves the host memory as an array of floats. More... | |

| T[] | GetHostMemory (long hMem) |

| Retrieves the host memory as an array of type 'T' More... | |

| double[] | GetMemoryDouble (long hMem, long lCount=-1) |

| Retrieves the GPU memory as an array of doubles. More... | |

| float[] | GetMemoryFloat (long hMem, long lCount=-1) |

| Retrieves the GPU memory as an array of float. More... | |

| T[] | GetMemory (long hMem, long lCount=-1) |

| Retrieves the GPU memory as an array of type 'T' More... | |

| void | SetMemory (long hMem, List< double > rg) |

| Copies a list of doubles into a block of already allocated GPU memory. More... | |

| void | SetMemory (long hMem, List< float > rg) |

| Copies a list of float into a block of already allocated GPU memory. More... | |

| void | SetMemory (long hMem, double[] rgSrc, long hStream=0) |

| Copies an array of double into a block of already allocated GPU memory. More... | |

| void | SetMemory (long hMem, float[] rgSrc, long hStream=0) |

| Copies an array of float into a block of already allocated GPU memory. More... | |

| void | SetMemory (long hMem, T[] rgSrc, long hStream=0, int nCount=-1) |

| Copies an array of type 'T' into a block of already allocated GPU memory. More... | |

| void | SetMemoryAt (long hMem, double[] rgSrc, int nOffset) |

| Copies an array of double into a block of already allocated GPU memory starting at a specific offset. More... | |

| void | SetMemoryAt (long hMem, float[] rgSrc, int nOffset) |

| Copies an array of float into a block of already allocated GPU memory starting at a specific offset. More... | |

| void | SetMemoryAt (long hMem, T[] rgSrc, int nOffset) |

| Copies an array of type 'T' into a block of already allocated GPU memory starting at a specific offset. More... | |

| T[] | SetPixel (long hMem, int nCount, bool bReturnOriginal, int nOffset, params Tuple< int, T >[] rgPixel) |

| Set a pixel value where each pixel is defined a set index, value tuple. More... | |

| void | SetHostMemory (long hMem, T[] rgSrc) |

| Copies an array of type 'T' into a block of already allocated host memory. More... | |

| long | CreateMemoryPointer (long hData, long lOffset, long lCount) |

| Creates a memory pointer into an already existing block of GPU memory. More... | |

| void | FreeMemoryPointer (long hData) |

| Frees a memory pointer. More... | |

| long | CreateMemoryTest (out ulong ulTotalNumBlocks, out double dfMemAllocatedInGB, out ulong ulMemStartAddr, out ulong ulBlockSize, double dfPctToAllocate=1.0) |

| Creates a new memory test on the current GPU. More... | |

| void | FreeMemoryTest (long h) |

| Free a memory test, freeing up all GPU memory used. More... | |

| T[] | RunMemoryTest (long h, MEMTEST_TYPE type, ulong ulBlockStartOffset, ulong ulBlockCount, bool bVerbose, bool bWrite, bool bReadWrite, bool bRead) |

| The RunMemoryTest method runs the memory test from the block start offset through the block count on the memory previously allocated using CreateMemoryTest. More... | |

| long | CreateImageOp (int nNum, double dfBrightnessProb, double dfBrightnessDelta, double dfContrastProb, double dfContrastLower, double dfContrastUpper, double dfSaturationProb, double dfSaturationLower, double dfSaturationUpper, long lRandomSeed=0) |

| Create a new ImageOp used to perform image operations on the GPU. More... | |

| void | FreeImageOp (long h) |

| Free an image op, freeing up all GPU memory used. More... | |

| void | DistortImage (long h, int nCount, int nNum, int nDim, long hX, long hY) |

| Distort an image using the ImageOp handle provided. More... | |

| long | CreateStream (bool bNonBlocking=false, int nIndex=-1) |

| Create a new stream on the current GPU. More... | |

| void | FreeStream (long h) |

| Free a stream. More... | |

| void | SynchronizeStream (long h=0) |

| Synchronize a stream on the current GPU, waiting for its operations to complete. More... | |

| void | SynchronizeThread () |

| Synchronize all kernel threads on the current GPU. More... | |

| long | CreateCuDNN (long hStream=0) |

| Create a new instance of NVIDIA's cuDnn. More... | |

| void | FreeCuDNN (long h) |

| Free an instance of cuDnn. More... | |

| long | CreateNCCL (int nDeviceId, int nCount, int nRank, Guid guid) |

| Create an instance of NVIDIA's NCCL 'Nickel' More... | |

| void | FreeNCCL (long hNccl) |

| Free an instance of NCCL. More... | |

| void | NcclInitializeSingleProcess (params long[] rghNccl) |

| Initializes a set of NCCL instances for use in a single process. More... | |

| void | NcclInitializeMultiProcess (long hNccl) |

| Initializes a set of NCCL instances for use in different processes. More... | |

| void | NcclBroadcast (long hNccl, long hStream, long hX, int nCount) |

| Broadcasts a block of GPU data to all NCCL instances. More... | |

| void | NcclAllReduce (long hNccl, long hStream, long hX, int nCount, NCCL_REDUCTION_OP op, double dfScale=1.0) |

| Performs a reduction on all NCCL instances as specified by the reduction operation. More... | |

| long | CreateExtension (string strExtensionDllPath) |

| Create an instance of an Extension DLL. More... | |

| void | FreeExtension (long hExtension) |

| Free an instance of an Extension. More... | |

| T[] | RunExtension (long hExtension, long lfnIdx, T[] rgParam) |

| Run a function on the extension specified. More... | |

| long | CreateTensorDesc () |

| Create a new instance of a tensor descriptor for use with NVIDIA's cuDnn. More... | |

| void | FreeTensorDesc (long h) |

| Free a tensor descriptor instance. More... | |

| void | SetTensorNdDesc (long hHandle, int[] rgDim, int[] rgStride, bool bHalf=false) |

| Sets the values of a tensor descriptor. More... | |

| void | SetTensorDesc (long hHandle, int n, int c, int h, int w, bool bHalf=false) |

| Sets the values of a tensor descriptor. More... | |

| void | SetTensorDesc (long hHandle, int n, int c, int h, int w, int nStride, int cStride, int hStride, int wStride, bool bHalf=false) |

| Sets the values of a tensor descriptor. More... | |

| void | AddTensor (long hCuDnn, long hSrcDesc, long hSrc, int nSrcOffset, long hDstDesc, long hDst, int nDstOffset) |

| Add two tensors together. More... | |

| void | AddTensor (long hCuDnn, T fAlpha, long hSrcDesc, long hSrc, int nSrcOffset, T fBeta, long hDstDesc, long hDst, int nDstOffset) |

| Add two tensors together. More... | |

| long | CreateFilterDesc () |

| Create a new instance of a filter descriptor for use with NVIDIA's cuDnn. More... | |

| void | FreeFilterDesc (long h) |

| Free a filter descriptor instance. More... | |

| void | SetFilterNdDesc (long hHandle, int[] rgDim, bool bHalf=false) |

| Sets the values of a filter descriptor. More... | |

| void | SetFilterDesc (long hHandle, int n, int c, int h, int w, bool bHalf=false) |

| Sets the values of a filter descriptor. More... | |

| long | CreateConvolutionDesc () |

| Create a new instance of a convolution descriptor for use with NVIDIA's cuDnn. More... | |

| void | FreeConvolutionDesc (long h) |

| Free a convolution descriptor instance. More... | |

| void | SetConvolutionDesc (long hHandle, int hPad, int wPad, int hStride, int wStride, int hDilation, int wDilation, bool bUseTensorCores, bool bHalf=false) |

| Set the values of a convolution descriptor. More... | |

| void | GetConvolutionInfo (long hCuDnn, long hBottomDesc, long hFilterDesc, long hConvDesc, long hTopDesc, ulong lWorkspaceSizeLimitInBytes, bool bUseTensorCores, out CONV_FWD_ALGO algoFwd, out ulong lWsSizeFwd, out CONV_BWD_FILTER_ALGO algoBwdFilter, out ulong lWsSizeBwdFilter, out CONV_BWD_DATA_ALGO algoBwdData, out ulong lWsSizeBwdData, CONV_FWD_ALGO preferredFwdAlgo=CONV_FWD_ALGO.NONE) |

| Queryies the algorithms and workspace sizes used for a given convolution descriptor. More... | |

| void | ConvolutionForward (long hCuDnn, long hBottomDesc, long hBottomData, int nBottomOffset, long hFilterDesc, long hWeight, int nWeightOffset, long hConvDesc, CONV_FWD_ALGO algoFwd, long hWorkspace, int nWorkspaceOffset, ulong lWorkspaceSize, long hTopDesc, long hTopData, int nTopOffset, bool bSyncStream=true) |

| Perform a convolution forward pass. More... | |

| void | ConvolutionForward (long hCuDnn, T fAlpha, long hBottomDesc, long hBottomData, int nBottomOffset, long hFilterDesc, long hWeight, int nWeightOffset, long hConvDesc, CONV_FWD_ALGO algoFwd, long hWorkspace, int nWorkspaceOffset, ulong lWorkspaceSize, T fBeta, long hTopDesc, long hTopData, int nTopOffset, bool bSyncStream=true) |

| Perform a convolution forward pass. More... | |

| void | ConvolutionBackwardBias (long hCuDnn, long hTopDesc, long hTopDiff, int nTopOffset, long hBiasDesc, long hBiasDiff, int nBiasOffset, bool bSyncStream=true) |

| Perform a convolution backward pass on the bias. More... | |

| void | ConvolutionBackwardBias (long hCuDnn, T fAlpha, long hTopDesc, long hTopDiff, int nTopOffset, T fBeta, long hBiasDesc, long hBiasDiff, int nBiasOffset, bool bSyncStream=true) |

| Perform a convolution backward pass on the bias. More... | |

| void | ConvolutionBackwardFilter (long hCuDnn, long hBottomDesc, long hBottomData, int nBottomOffset, long hTopDesc, long hTopDiff, int nTopOffset, long hConvDesc, CONV_BWD_FILTER_ALGO algoBwd, long hWorkspace, int nWorkspaceOffset, ulong lWorkspaceSize, long hFilterDesc, long hWeightDiff, int nWeightOffset, bool bSyncStream) |

| Perform a convolution backward pass on the filter. More... | |

| void | ConvolutionBackwardFilter (long hCuDnn, T fAlpha, long hBottomDesc, long hBottomData, int nBottomOffset, long hTopDesc, long hTopDiff, int nTopOffset, long hConvDesc, CONV_BWD_FILTER_ALGO algoBwd, long hWorkspace, int nWorkspaceOffset, ulong lWorkspaceSize, T fBeta, long hFilterDesc, long hWeightDiff, int nWeightOffset, bool bSyncStream=true) |

| Perform a convolution backward pass on the filter. More... | |

| void | ConvolutionBackwardData (long hCuDnn, long hFilterDesc, long hWeight, int nWeightOffset, long hTopDesc, long hTopDiff, int nTopOffset, long hConvDesc, CONV_BWD_DATA_ALGO algoBwd, long hWorkspace, int nWorkspaceOffset, ulong lWorkspaceSize, long hBottomDesc, long hBottomDiff, int nBottomOffset, bool bSyncStream=true) |

| Perform a convolution backward pass on the data. More... | |

| void | ConvolutionBackwardData (long hCuDnn, T fAlpha, long hFilterDesc, long hWeight, int nWeightOffset, long hTopDesc, long hTopDiff, int nTopOffset, long hConvDesc, CONV_BWD_DATA_ALGO algoBwd, long hWorkspace, int nWorkspaceOffset, ulong lWorkspaceSize, T fBeta, long hBottomDesc, long hBottomDiff, int nBottomOffset, bool bSyncStream=true) |

| Perform a convolution backward pass on the data. More... | |

| long | CreatePoolingDesc () |

| Create a new instance of a pooling descriptor for use with NVIDIA's cuDnn. More... | |

| void | FreePoolingDesc (long h) |

| Free a pooling descriptor instance. More... | |

| void | SetPoolingDesc (long hHandle, PoolingMethod method, int h, int w, int hPad, int wPad, int hStride, int wStride) |

| Set the values of a pooling descriptor. More... | |

| void | PoolingForward (long hCuDnn, long hPoolingDesc, T fAlpha, long hBottomDesc, long hBottomData, T fBeta, long hTopDesc, long hTopData) |

| Perform a pooling forward pass. More... | |

| void | PoolingBackward (long hCuDnn, long hPoolingDesc, T fAlpha, long hTopDataDesc, long hTopData, long hTopDiffDesc, long hTopDiff, long hBottomDataDesc, long hBottomData, T fBeta, long hBottomDiffDesc, long hBottomDiff) |

| Perform a pooling backward pass. More... | |

| void | DeriveBatchNormDesc (long hFwdScaleBiasMeanVarDesc, long hFwdBottomDesc, long hBwdScaleBiasMeanVarDesc, long hBwdBottomDesc, BATCHNORM_MODE mode) |

| Derive the batch norm descriptors for both the forward and backward passes. More... | |

| void | BatchNormForward (long hCuDnn, BATCHNORM_MODE mode, T fAlpha, T fBeta, long hFwdBottomDesc, long hBottomData, long hFwdTopDesc, long hTopData, long hFwdScaleBiasMeanVarDesc, long hScaleData, long hBiasData, double dfFactor, long hGlobalMean, long hGlobalVar, double dfEps, long hSaveMean, long hSaveInvVar, bool bTraining) |

| Run the batch norm forward pass. More... | |

| void | BatchNormBackward (long hCuDnn, BATCHNORM_MODE mode, T fAlphaDiff, T fBetaDiff, T fAlphaParamDiff, T fBetaParamDiff, long hBwdBottomDesc, long hBottomData, long hTopDiffDesc, long hTopDiff, long hBottomDiffDesc, long hBottomDiff, long hBwdScaleBiasMeanVarDesc, long hScaleData, long hScaleDiff, long hBiasDiff, double dfEps, long hSaveMean, long hSaveInvVar) |

| Run the batch norm backward pass. More... | |

| long | CreateDropoutDesc () |

| Create a new instance of a dropout descriptor for use with NVIDIA's cuDnn. More... | |

| void | FreeDropoutDesc (long h) |

| Free a dropout descriptor instance. More... | |

| void | SetDropoutDesc (long hCuDnn, long hDropoutDesc, double dfDropout, long hStates, long lSeed) |

| Set the dropout descriptor values. More... | |

| void | GetDropoutInfo (long hCuDnn, long hBottomDesc, out ulong ulStateCount, out ulong ulReservedCount) |

| Query the dropout state and reserved counts. More... | |

| void | DropoutForward (long hCuDnn, long hDropoutDesc, long hBottomDesc, long hBottomData, long hTopDesc, long hTopData, long hReserved) |

| Performs a dropout forward pass. More... | |

| void | DropoutBackward (long hCuDnn, long hDropoutDesc, long hTopDesc, long hTop, long hBottomDesc, long hBottom, long hReserved) |

| Performs a dropout backward pass. More... | |

| long | CreateLRNDesc () |

| Create a new instance of a LRN descriptor for use with NVIDIA's cuDnn. More... | |

| void | FreeLRNDesc (long h) |

| Free a LRN descriptor instance. More... | |

| void | SetLRNDesc (long hHandle, uint nSize, double fAlpha, double fBeta, double fK) |

| Set the LRN descriptor values. More... | |

| void | LRNCrossChannelForward (long hCuDnn, long hNormDesc, T fAlpha, long hBottomDesc, long hBottomData, T fBeta, long hTopDesc, long hTopData) |

| Perform LRN cross channel forward pass. More... | |

| void | LRNCrossChannelBackward (long hCuDnn, long hNormDesc, T fAlpha, long hTopDataDesc, long hTopData, long hTopDiffDesc, long hTopDiff, long hBottomDataDesc, long hBottomData, T fBeta, long hBottomDiffDesc, long hBottomDiff) |

| Perform LRN cross channel backward pass. More... | |

| void | DivisiveNormalizationForward (long hCuDnn, long hNormDesc, T fAlpha, long hBottomDataDesc, long hBottomData, long hTemp1, long hTemp2, T fBeta, long hTopDataDesc, long hTopData) |

| Performs a Devisive Normalization forward pass. More... | |

| void | DivisiveNormalizationBackward (long hCuDnn, long hNormDesc, T fAlpha, long hBottomDataDesc, long hBottomData, long hTopDiff, long hTemp1, long hTemp2, T fBeta, long hBottomDiffDesc, long hBottomDiff) |

| Performs a Devisive Normalization backward pass. More... | |

| void | TanhForward (long hCuDnn, T fAlpha, long hBottomDataDesc, long hBottomData, T fBeta, long hTopDataDesc, long hTopData) |

| Perform a Tanh forward pass. More... | |

| void | TanhBackward (long hCuDnn, T fAlpha, long hTopDataDesc, long hTopData, long hTopDiffDesc, long hTopDiff, long hBottomDataDesc, long hBottomData, T fBeta, long hBottomDiffDesc, long hBottomDiff) |

| Perform a Tanh backward pass. More... | |

| void | EluForward (long hCuDnn, T fAlpha, long hBottomDataDesc, long hBottomData, T fBeta, long hTopDataDesc, long hTopData) |

| Perform a Elu forward pass. More... | |

| void | EluBackward (long hCuDnn, T fAlpha, long hTopDataDesc, long hTopData, long hTopDiffDesc, long hTopDiff, long hBottomDataDesc, long hBottomData, T fBeta, long hBottomDiffDesc, long hBottomDiff) |

| Perform a Elu backward pass. More... | |

| void | SigmoidForward (long hCuDnn, T fAlpha, long hBottomDataDesc, long hBottomData, T fBeta, long hTopDataDesc, long hTopData) |

| Perform a Sigmoid forward pass. More... | |

| void | SigmoidBackward (long hCuDnn, T fAlpha, long hTopDataDesc, long hTopData, long hTopDiffDesc, long hTopDiff, long hBottomDataDesc, long hBottomData, T fBeta, long hBottomDiffDesc, long hBottomDiff) |

| Perform a Sigmoid backward pass. More... | |

| void | ReLUForward (long hCuDnn, T fAlpha, long hBottomDataDesc, long hBottomData, T fBeta, long hTopDataDesc, long hTopData) |

| Perform a ReLU forward pass. More... | |

| void | ReLUBackward (long hCuDnn, T fAlpha, long hTopDataDesc, long hTopData, long hTopDiffDesc, long hTopDiff, long hBottomDataDesc, long hBottomData, T fBeta, long hBottomDiffDesc, long hBottomDiff) |

| Perform a ReLU backward pass. More... | |

| void | SoftmaxForward (long hCuDnn, SOFTMAX_ALGORITHM alg, SOFTMAX_MODE mode, T fAlpha, long hBottomDataDesc, long hBottomData, T fBeta, long hTopDataDesc, long hTopData) |

| Perform a Softmax forward pass. More... | |

| void | SoftmaxBackward (long hCuDnn, SOFTMAX_ALGORITHM alg, SOFTMAX_MODE mode, T fAlpha, long hTopDataDesc, long hTopData, long hTopDiffDesc, long hTopDiff, T fBeta, long hBottomDiffDesc, long hBottomDiff) |

| Perform a Softmax backward pass. More... | |

| long | CreateRnnDataDesc () |

| Create the RNN Data Descriptor. More... | |

| void | FreeRnnDataDesc (long h) |

| Free an existing RNN Data descriptor. More... | |

| void | SetRnnDataDesc (long hRnnDataDesc, RNN_DATALAYOUT layout, int nMaxSeqLen, int nBatchSize, int nVectorSize, bool bBidirectional=false, int[] rgSeqLen=null) |

| Sets the RNN Data Descriptor values. More... | |

| long | CreateRnnDesc () |

| Create the RNN Descriptor. More... | |

| void | FreeRnnDesc (long h) |

| Free an existing RNN descriptor. More... | |

| void | SetRnnDesc (long hCuDnn, long hRnnDesc, int nHiddenCount, int nNumLayers, long hDropoutDesc, RNN_MODE mode, bool bUseTensorCores, RNN_DIRECTION direction=RNN_DIRECTION.RNN_UNIDIRECTIONAL) |

| Sets the RNN Descriptor values. More... | |

| int | GetRnnParamCount (long hCuDnn, long hRnnDesc, long hXDesc) |

| Returns the RNN parameter count. More... | |

| ulong | GetRnnWorkspaceCount (long hCuDnn, long hRnnDesc, long hXDesc, out ulong nReservedCount) |

| Returns the workspace and reserved counts. More... | |

| void | GetRnnLinLayerParams (long hCuDnn, long hRnnDesc, int nLayer, long hXDesc, long hWtDesc, long hWtData, int nLinLayer, out int nWtCount, out long hWt, out int nBiasCount, out long hBias) |

| Returns the linear layer parameters (weights). More... | |

| void | RnnForward (long hCuDnn, long hRnnDesc, long hXDesc, long hXData, long hHxDesc, long hHxData, long hCxDesc, long hCxData, long hWtDesc, long hWtData, long hYDesc, long hYData, long hHyDesc, long hHyData, long hCyDesc, long hCyData, long hWorkspace, ulong nWsCount, long hReserved, ulong nResCount, bool bTraining) |

| Run the RNN through a forward pass. More... | |

| void | RnnBackwardData (long hCuDnn, long hRnnDesc, long hYDesc, long hYData, long hYDiff, long hHyDesc, long hHyDiff, long hCyDesc, long hCyDiff, long hWtDesc, long hWtData, long hHxDesc, long hHxData, long hCxDesc, long hCxData, long hXDesc, long hXDiff, long hdHxDesc, long hHxDiff, long hdCxDesc, long hCxDiff, long hWorkspace, ulong nWsCount, long hReserved, ulong nResCount) |

| Run the RNN backward pass through the data. More... | |

| void | RnnBackwardWeights (long hCuDnn, long hRnnDesc, long hXDesc, long hXData, long hHxDesc, long hHxData, long hYDesc, long hYData, long hWorkspace, ulong nWsCount, long hWtDesc, long hWtDiff, long hReserved, ulong nResCount) |

| Run the RNN backward pass on the weights. More... | |

| bool | IsRnn8Supported () |

| Returns whether or not RNN8 is supported. More... | |

| long | CreateRnn8 () |

| Create the RNN8. More... | |

| void | FreeRnn8 (long h) |

| Free an existing RNN8. More... | |

| void | SetRnn8 (long hCuDnn, long hRnn, bool bTraining, RNN_DATALAYOUT layout, RNN_MODE cellMode, RNN_BIAS_MODE biasMode, int nSequenceLen, int nBatchSize, int nInputs, int nHidden, int nOutputs, int nProjection, int nNumLayers, float fDropout, ulong lSeed, bool bBidirectional=false) |

| Set the RNN8 parameters. More... | |

| void | GetRnn8MemorySizes (long hCuDnn, long hRnn, out ulong szWtCount, out ulong szWorkSize, out ulong szReservedSize) |

| Returns the memory sizes required for the RNN8. More... | |

| void | InitializeRnn8Weights (long hCuDnn, long hRnn, long hWt, RNN_FILLER_TYPE wtFt, double fWtVal, double fWtVal2, RNN_FILLER_TYPE biasFt, double fBiasVal, double fBiasVal2) |

| Initialize the RNN8 weights More... | |

| void | Rnn8Forward (long hCuDnn, long hRnn, long hX, long hY, long hhX, long hhY, long hcX, long hcY, long hWts, long hWork, long hReserved) |

| Calculate the forward pass through the RNN8. More... | |

| void | Rnn8Backward (long hCuDnn, long hRnn, long hY, long hdY, long hX, long hdX, long hhX, long hdhY, long hdhX, long hcX, long hdcY, long hdcX, long hWt, long hdWt, long hWork, long hReserved) |

| Calculate the backward pass through the RNN8 for both data and weights. More... | |

| long | AllocPCAData (int nM, int nN, int nK, out int nCount) |

| Allocates the GPU memory for the PCA Data. More... | |

| long | AllocPCAScores (int nM, int nN, int nK, out int nCount) |

| Allocates the GPU memory for the PCA scores. More... | |

| long | AllocPCALoads (int nM, int nN, int nK, out int nCount) |

| Allocates the GPU memory for the PCA loads. More... | |

| long | AllocPCAEigenvalues (int nM, int nN, int nK, out int nCount) |

| Allocates the GPU memory for the PCA eigenvalues. More... | |

| long | CreatePCA (int nMaxIterations, int nM, int nN, int nK, long hData, long hScoresResult, long hLoadsResult, long hResiduals=0, long hEigenvalues=0) |

| Creates a new PCA instance and returns the handle to it. More... | |

| bool | RunPCA (long hPCA, int nSteps, out int nCurrentK, out int nCurrentIteration) |

| Runs a number of steps of the iterative PCA algorithm. More... | |

| void | FreePCA (long hPCA) |

| Free the PCA instance associated with handle. More... | |

| long | CreateSSD (int nNumClasses, bool bShareLocation, int nLocClasses, int nBackgroundLabelId, bool bUseDiffcultGt, SSD_MINING_TYPE miningType, SSD_MATCH_TYPE matchType, float fOverlapThreshold, bool bUsePriorForMatching, SSD_CODE_TYPE codeType, bool bEncodeVariantInTgt, bool bBpInside, bool bIgnoreCrossBoundaryBbox, bool bUsePriorForNms, SSD_CONF_LOSS_TYPE confLossType, SSD_LOC_LOSS_TYPE locLossType, float fNegPosRatio, float fNegOverlap, int nSampleSize, bool bMapObjectToAgnostic, bool bNmsParam, float? fNmsThreshold=null, int? nNmsTopK=null, float? fNmsEta=null) |

| Create an instance of the SSD GPU support. More... | |

| void | SetupSSD (long hSSD, int nNum, int nNumPriors, int nNumGt) |

| Setup the SSD GPU support. More... | |

| void | FreeSSD (long hSSD) |

| Free the instance of SSD GPU support. More... | |

| int | SsdMultiBoxLossForward (long hSSD, int nLocDataCount, long hLocGpuData, int nConfDataCount, long hConfGpuData, int nPriorDataCount, long hPriorGpuData, int nGtDataCount, long hGtGpuData, out List< DictionaryMap< List< int > > > rgAllMatchIndices, out List< List< int > > rgrgAllNegIndices, out int nNumNegs) |

| Performs the SSD MultiBoxLoss forward operation. More... | |

| void | SsdEncodeLocPrediction (long hSSD, int nLocPredCount, long hLocPred, int nLocGtCount, long hLocGt) |

| Encodes the SSD data into the location prediction and location ground truths. More... | |

| void | SsdEncodeConfPrediction (long hSSD, int nConfPredCount, long hConfPred, int nConfGtCount, long hConfGt) |

| Encodes the SSD data into the confidence prediction and confidence ground truths. More... | |

| long | CreateLayerNorm (int nGpuID, int nCount, int nOuterNum, int nChannels, int nInnerNum, float fEps=1e-10f) |

| Create the Cuda version of LayerNorm More... | |

| void | FreeLayerNorm (long hLayerNorm) |

| Free the instance of LayerNorm GPU support. More... | |

| void | LayerNormForward (long hLayerNorm, long hXdata, long hYdata) |

| Run the LayerNorm forward pass. More... | |

| void | LayerNormBackward (long hLayerNorm, long hYdata, long hYdiff, long hXdiff) |

| Run the LayerNorm backward pass. More... | |

| void | set (int nCount, long hHandle, double fVal, int nIdx=-1) |

| Set the values of GPU memory to a specified value of type More... | |

| void | set (int nCount, long hHandle, float fVal, int nIdx=-1) |

| Set the values of GPU memory to a specified value of type More... | |

| void | set (int nCount, long hHandle, T fVal, int nIdx=-1, int nXOff=0) |

| Set the values of GPU memory to a specified value of type 'T'. More... | |

| double[] | get_double (int nCount, long hHandle, int nIdx=-1) |

| Queries the GPU memory by copying it into an array of More... | |

| float[] | get_float (int nCount, long hHandle, int nIdx=-1) |

| Queries the GPU memory by copying it into an array of More... | |

| T[] | get (int nCount, long hHandle, int nIdx=-1) |

| Queries the GPU memory by copying it into an array of type 'T'. More... | |

| void | copy (int nCount, long hSrc, long hDst, int nSrcOffset=0, int nDstOffset=0, long hStream=-1, bool? bSrcHalfSizeOverride=null, bool? bDstHalfSizeOverride=null) |

| Copy data from one block of GPU memory to another. More... | |

| void | copy (int nCount, int nNum, int nDim, long hSrc1, long hSrc2, long hDst, long hSimilar, bool bInvert=false) |

| Copy similar items of length 'nDim' from hSrc1 (where hSimilar(i) = 1) and dissimilar items of length 'nDim' from hSrc2 (where hSimilar(i) = 0). More... | |

| void | copy_batch (int nCount, int nNum, int nDim, long hSrcData, long hSrcLbl, int nDstCount, long hDstCache, long hWorkDevData, int nLabelStart, int nLabelCount, int nCacheSize, long hCacheHostCursors, long hWorkDataHost) |

| Copy a batch of labeled items into a cache organized by label where older data is removed and replaced by newer data. More... | |

| void | copy_sequence (int nK, int nNum, int nDim, long hSrcData, long hSrcLbl, int nSrcCacheCount, long hSrcCache, int nLabelStart, int nLabelCount, int nCacheSize, long hCacheHostCursors, bool bOutputLabels, List< long > rghTop, List< int > rgnTopCount, long hWorkDataHost, bool bCombinePositiveAndNegative=false, int nSeed=0) |

| Copy a sequence of cached items, organized by label, into an anchor, positive (if nK > 0), and negative blobs. More... | |

| void | copy_sequence (int n, long hSrc, int nSrcStep, int nSrcStartIdx, int nCopyCount, int nCopyDim, long hDst, int nDstStep, int nDstStartIdx, int nSrcSpatialDim, int nDstSpatialDim, int nSrcSpatialDimStartIdx=0, int nDstSpatialDimStartIdx=0, int nSpatialDimCount=-1) |

| Copy a sequence from a source to a destination and allow for skip steps. More... | |

| void | copy_expand (int n, int nNum, int nDim, long hX, long hA) |

| Expand a vector of length 'nNum' into a matrix of size 'nNum' x 'nDim' by copying each value of the vector into all elements of the corresponding matrix row. More... | |

| void | fill (int n, int nDim, long hSrc, int nSrcOff, int nCount, long hDst) |

| Fill data from the source data 'n' times in the destination. More... | |

| void | sort (int nCount, long hY) |

| Sort the data in the GPU memory specified. More... | |

| void | gemm (bool bTransA, bool bTransB, int m, int n, int k, double fAlpha, long hA, long hB, double fBeta, long hC) |

| Perform a matrix-matrix multiplication operation: C = alpha transB (B) transA (A) + beta C More... | |

| void | gemm (bool bTransA, bool bTransB, int m, int n, int k, float fAlpha, long hA, long hB, float fBeta, long hC) |

| Perform a matrix-matrix multiplication operation: C = alpha transB (B) transA (A) + beta C More... | |

| void | gemm (bool bTransA, bool bTransB, int m, int n, int k, T fAlpha, long hA, long hB, T fBeta, long hC, int nAOffset=0, int nBOffset=0, int nCOffset=0, int nGroups=1, int nGroupOffsetA=0, int nGroupOffsetB=0, int nGroupOffsetC=0) |

| Perform a matrix-matrix multiplication operation: C = alpha transB (B) transA (A) + beta C More... | |

| void | gemm (bool bTransA, bool bTransB, int m, int n, int k, double fAlpha, long hA, long hB, double fBeta, long hC, uint lda, uint ldb, uint ldc) |

| Perform a matrix-matrix multiplication operation: C = alpha transB (B) transA (A) + beta C More... | |

| void | gemm (bool bTransA, bool bTransB, int m, int n, int k, double fAlpha, long hA, long hB, double fBeta, long hC, uint lda, uint ldb, uint ldc, uint stridea, uint strideb, uint stridec, uint batch_count) |

| Perform a matrix-matrix multiplication operation: C = alpha transB (B) transA (A) + beta C More... | |

| void | geam (bool bTransA, bool bTransB, int m, int n, double fAlpha, long hA, long hB, double fBeta, long hC) |

| Perform a matrix-matrix addition/transposition operation: C = alpha transA (A) + beta transB (B) More... | |

| void | geam (bool bTransA, bool bTransB, int m, int n, float fAlpha, long hA, long hB, float fBeta, long hC) |

| Perform a matrix-matrix addition/transposition operation: C = alpha transA (A) + beta transB (B) More... | |

| void | geam (bool bTransA, bool bTransB, int m, int n, T fAlpha, long hA, long hB, T fBeta, long hC, int nAOffset=0, int nBOffset=0, int nCOffset=0) |

| Perform a matrix-matrix multiplication operation: C = alpha transB (B) transA (A) + beta C More... | |

| void | gemv (bool bTransA, int m, int n, double fAlpha, long hA, long hX, double fBeta, long hY) |

| Perform a matrix-vector multiplication operation: y = alpha transA (A) x + beta y (where x and y are vectors) More... | |

| void | gemv (bool bTransA, int m, int n, float fAlpha, long hA, long hX, float fBeta, long hY) |

| Perform a matrix-vector multiplication operation: y = alpha transA (A) x + beta y (where x and y are vectors) More... | |

| void | gemv (bool bTransA, int m, int n, T fAlpha, long hA, long hX, T fBeta, long hY, int nAOffset=0, int nXOffset=0, int nYOffset=0) |

| Perform a matrix-vector multiplication operation: y = alpha transA (A) x + beta y (where x and y are vectors) More... | |

| void | ger (int m, int n, double fAlpha, long hX, long hY, long hA) |

| Perform a vector-vector multiplication operation: A = x * (fAlpha * y) (where x and y are vectors and A is an m x n Matrix) More... | |

| void | ger (int m, int n, float fAlpha, long hX, long hY, long hA) |

| Perform a vector-vector multiplication operation: A = x * (fAlpha * y) (where x and y are vectors and A is an m x n Matrix) More... | |

| void | ger (int m, int n, T fAlpha, long hX, long hY, long hA) |

| Perform a vector-vector multiplication operation: A = x * (fAlpha * y) (where x and y are vectors and A is an m x n Matrix) More... | |

| void | axpy (int n, double fAlpha, long hX, long hY) |

| Multiply the vector X by a scalar and add the result to the vector Y. More... | |

| void | axpy (int n, float fAlpha, long hX, long hY) |

| Multiply the vector X by a scalar and add the result to the vector Y. More... | |

| void | axpy (int n, T fAlpha, long hX, long hY, int nXOff=0, int nYOff=0) |

| Multiply the vector X by a scalar and add the result to the vector Y. More... | |

| void | axpby (int n, double fAlpha, long hX, double fBeta, long hY) |

| Scale the vector x and then multiply the vector X by a scalar and add the result to the vector Y. More... | |

| void | axpby (int n, float fAlpha, long hX, float fBeta, long hY) |

| Scale the vector x and then multiply the vector X by a scalar and add the result to the vector Y. More... | |

| void | axpby (int n, T fAlpha, long hX, T fBeta, long hY) |

| Scale the vector x by Alpha and scale vector y by Beta and then add both together. More... | |

| void | mulbsx (int n, long hA, int nAOff, long hX, int nXOff, int nC, int nSpatialDim, bool bTranspose, long hB, int nBOff) |

| Multiply a matrix with a vector. More... | |

| void | divbsx (int n, long hA, int nAOff, long hX, int nXOff, int nC, int nSpatialDim, bool bTranspose, long hB, int nBOff) |

| Divide a matrix by a vector. More... | |

| void | matmul (uint nOuterCount, int m, int n, int k, long hA, long hB, long hC, double dfScale=1.0, bool bTransA=false, bool bTransB=false) |

| Perform matmul operation hC = matmul(hA, hB), where hA, hB and hC are all in row-major format. More... | |

| void | transposeHW (int n, int c, int h, int w, long hSrc, long hDst) |

| Transpose a n*c number of matrices along the height and width dimensions. All matrices are in row-major format. More... | |

| void | set_bounds (int n, double dfMin, double dfMax, long hX) |

| Set the bounds of all items within the data to a set range of values. More... | |

| void | scal (int n, double fAlpha, long hX, int nXOff=0) |

| Scales the data in X by a scaling factor. More... | |

| void | scal (int n, float fAlpha, long hX, int nXOff=0) |

| Scales the data in X by a scaling factor. More... | |

| void | scal (int n, T fAlpha, long hX, int nXOff=0) |

| Scales the data in X by a scaling factor. More... | |

| double | dot_double (int n, long hX, long hY) |

| Computes the dot product of X and Y. More... | |

| float | dot_float (int n, long hX, long hY) |

| Computes the dot product of X and Y. More... | |

| T | dot (int n, long hX, long hY, int nXOff=0, int nYOff=0) |

| Computes the dot product of X and Y. More... | |

| double | asum_double (int n, long hX, int nXOff=0) |

| Computes the sum of absolute values in X. More... | |

| float | asum_float (int n, long hX, int nXOff=0) |

| Computes the sum of absolute values in X. More... | |

| T | asum (int n, long hX, int nXOff=0) |

| Computes the sum of absolute values in X. More... | |

| void | scale (int n, double fAlpha, long hX, long hY) |

| Scales the values in X and places them in Y. More... | |

| void | scale (int n, float fAlpha, long hX, long hY) |

| Scales the values in X and places them in Y. More... | |

| void | scale (int n, T fAlpha, long hX, long hY, int nXOff=0, int nYOff=0) |

| Scales the values in X and places them in Y. More... | |

| void | scale_to_range (int n, long hX, long hY, double fMin, double fMax) |

| Scales the values in X and places the result in Y (can also run inline where X = Y). More... | |

| double | erf (double dfVal) |

| Calculates the erf() function. More... | |

| float | erf (float fVal) |

| Calculates the erf() function. More... | |

| T | erf (T fVal) |

| Calculates the erf() function. More... | |

| void | mask (int n, int nMaskDim, T fSearch, T fReplace, long hX, long hMask, long hY) |

| Mask the mask the data in the source with the mask by replacing all values 'fSearch' found in the mask with 'fReplace' in the destination. More... | |

| void | mask (int n, int nMaskDim, double fSearch, double fReplace, long hX, long hMask, long hY) |

| Mask the mask the data in the source with the mask by replacing all values 'fSearch' found in the mask with 'fReplace' in the destination. More... | |

| void | mask (int n, int nMaskDim, float fSearch, float fReplace, long hX, long hMask, long hY) |

| Mask the mask the data in the source with the mask by replacing all values 'fSearch' found in the mask with 'fReplace' in the destination. More... | |

| void | mask_batch (int n, int nBatch, int nMaskDim, T fSearch, T fReplace, long hX, long hMask, long hY) |

| Mask the mask the batch of data in the source with the mask by replacing all values 'fSearch' found in the mask with 'fReplace' in the destination. More... | |

| void | mask_batch (int n, int nBatch, int nMaskDim, double fSearch, double fReplace, long hX, long hMask, long hY) |

| Mask the mask the batch of data in the source with the mask by replacing all values 'fSearch' found in the mask with 'fReplace' in the destination. More... | |

| void | mask_batch (int n, int nBatch, int nMaskDim, float fSearch, float fReplace, long hX, long hMask, long hY) |

| Mask the mask the batch of data in the source with the mask by replacing all values 'fSearch' found in the mask with 'fReplace' in the destination. More... | |

| void | interp2 (int nChannels, long hData1, int nX1, int nY1, int nHeight1, int nWidth1, int nHeight1A, int nWidth1A, long hData2, int nX2, int nY2, int nHeight2, int nWidth2, int nHeight2A, int nWidth2A, bool bBwd=false) |

| Interpolates between two sizes within the spatial dimensions. More... | |

| void | add_scalar (int n, double fAlpha, long hY) |

| Adds a scalar value to each element of Y. More... | |

| void | add_scalar (int n, float fAlpha, long hY) |

| Adds a scalar value to each element of Y. More... | |

| void | add_scalar (int n, T fAlpha, long hY, int nYOff=0) |

| Adds a scalar value to each element of Y. More... | |

| void | add (int n, long hA, long hB, long hC, long hY) |

| Adds A, B and C and places the result in Y. More... | |

| void | add (int n, long hA, long hB, long hY) |

| Adds A to B and places the result in Y. More... | |

| void | add (int n, long hA, long hB, long hY, double dfAlpha) |

| Adds A to (B times scalar) and places the result in Y. More... | |

| void | add (int n, long hA, long hB, long hY, float fAlpha) |

| Adds A to (B times scalar) and places the result in Y. More... | |

| void | add (int n, long hA, long hB, long hY, double dfAlphaA, double dfAlphaB, int nAOff=0, int nBOff=0, int nYOff=0) |

| Adds A to (B times scalar) and places the result in Y. More... | |

| void | sub (int n, long hA, long hB, long hY, int nAOff=0, int nBOff=0, int nYOff=0, int nB=0) |

| Subtracts B from A and places the result in Y. More... | |

| void | mul (int n, long hA, long hB, long hY, int nAOff=0, int nBOff=0, int nYOff=0) |

| Multiplies each element of A with each element of B and places the result in Y. More... | |

| void | sub_and_dot (int n, int nN, int nInnerNum, long hA, long hB, long hY, int nAOff, int nBOff, int nYOff) |

| Subtracts every nInnterNum element of B from A and performs a dot product on the result. More... | |

| void | mul_scalar (int n, double fAlpha, long hY) |

| Mutlipy each element of Y by a scalar. More... | |

| void | mul_scalar (int n, float fAlpha, long hY) |

| Mutlipy each element of Y by a scalar. More... | |

| void | mul_scalar (int n, T fAlpha, long hY) |

| Mutlipy each element of Y by a scalar. More... | |

| void | div (int n, long hA, long hB, long hY) |

| Divides each element of A by each element of B and places the result in Y. More... | |

| void | abs (int n, long hA, long hY) |

| Calculates the absolute value of A and places the result in Y. More... | |

| void | exp (int n, long hA, long hY) |

| Calculates the exponent value of A and places the result in Y. More... | |

| void | exp (int n, long hA, long hY, int nAOff, int nYOff, double dfBeta) |

| Calculates the exponent value of A * beta and places the result in Y. More... | |

| void | log (int n, long hA, long hY) |

| Calculates the log value of A and places the result in Y. More... | |

| void | log (int n, long hA, long hY, double dfBeta, double dfAlpha=0) |

| Calculates the log value of (A * beta) + alpha, and places the result in Y. More... | |

| void | powx (int n, long hA, double fAlpha, long hY, int nAOff=0, int nYOff=0) |

| Calculates the A raised to the power alpha and places the result in Y. More... | |

| void | powx (int n, long hA, float fAlpha, long hY, int nAOff=0, int nYOff=0) |

| Calculates the A raised to the power alpha and places the result in Y. More... | |

| void | powx (int n, long hA, T fAlpha, long hY, int nAOff=0, int nYOff=0) |

| Calculates the A raised to the power alpha and places the result in Y. More... | |

| void | sign (int n, long hX, long hY, int nXOff=0, int nYOff=0) |

| Computes the sign of each element of X and places the result in Y. More... | |

| void | sqrt (int n, long hX, long hY) |

| Computes the square root of each element of X and places the result in Y. More... | |

| void | sqrt_scale (int nCount, long hX, long hY) |

| Scale the data by the sqrt of the data. y = sqrt(abs(x)) * sign(x) More... | |

| void | compare_signs (int n, long hA, long hB, long hY) |

| Compares the signs of each value in A and B and places the result in Y. More... | |

| void | max (int n, long hA, long hB, long hY) |

| Calculates the max of A and B and places the result in Y. This max is only computed on a per item basis, so the shape of Y = the shape of A and B and Y(0) contains the max of A(0) and B(0), etc. More... | |

| void | max_bwd (int n, long hAdata, long hBdata, long hYdiff, long hAdiff, long hBdiff) |

| Propagates the Y diff back to the max of A or B and places the result in A if its data has the max, or B if its data has the max. More... | |

| void | min (int n, long hA, long hB, long hY) |

| Calculates the min of A and B and places the result in Y. This min is only computed on a per item basis, so the shape of Y = the shape of A and B and Y(0) contains the min of A(0) and B(0), etc. More... | |

| double | max (int n, long hA, out long lPos, int nAOff=0, long hWork=0) |

| Finds the maximum value of A. More... | |

| double | min (int n, long hA, out long lPos, int nAOff=0, long hWork=0) |

| Finds the minimum value of A. More... | |

| Tuple< double, double, double, double > | minmax (int n, long hA, long hWork1, long hWork2, bool bDetectNans=false, int nAOff=0) |

| Finds the minimum and maximum values within A. More... | |

| void | minmax (int n, long hA, long hWork1, long hWork2, int nK, long hMin, long hMax, bool bNonZeroOnly) |

| Finds up to 'nK' minimum and maximum values within A. More... | |

| void | transpose (int n, long hX, long hY, long hXCounts, long hYCounts, long hMapping, int nNumAxes, long hBuffer) |

| Perform a transpose on X producing Y, similar to the numpy.transpose operation. More... | |

| double | sumsq (int n, long hW, long hA, int nAOff=0) |

| Calculates the sum of squares of A. More... | |

| double | sumsqdiff (int n, long hW, long hA, long hB, int nAOff=0, int nBOff=0) |

| Calculates the sum of squares of differences between A and B More... | |

| void | width (int n, long hMean, long hMin, long hMax, double dfAlpha, long hWidth) |

| Calculates the width values. More... | |

| bool | contains_point (int n, long hMean, long hWidth, long hX, long hWork, int nXOff=0) |

| Returns true if the point is contained within the bounds. More... | |

| void | denan (int n, long hX, double dfReplacement) |

| Replaces all NAN values witin X with a replacement value. More... | |

| void | im2col (long hDataIm, int nDataImOffset, int nChannels, int nHeight, int nWidth, int nKernelH, int nKernelW, int nPadH, int nPadW, int nStrideH, int nStrideW, int nDilationH, int nDilationW, long hDataCol, int nDataColOffset) |

| Rearranges image blocks into columns. More... | |

| void | im2col_nd (long hDataIm, int nDataImOffset, int nNumSpatialAxes, int nImCount, int nChannelAxis, long hImShape, long hColShape, long hKernelShape, long hPad, long hStride, long hDilation, long hDataCol, int nDataColOffset) |

| Rearranges image blocks into columns. More... | |

| void | col2im (long hDataCol, int nDataColOffset, int nChannels, int nHeight, int nWidth, int nKernelH, int nKernelW, int nPadH, int nPadW, int nStrideH, int nStrideW, int nDilationH, int nDilationW, long hDataIm, int nDataImOffset) |

| Rearranges the columns into image blocks. More... | |

| void | col2im_nd (long hDataCol, int nDataColOffset, int nNumSpatialAxes, int nColCount, int nChannelAxis, long hImShape, long hColShape, long hKernelShape, long hPad, long hStride, long hDilation, long hDataIm, int nDataImOffset) |

| Rearranges the columns into image blocks. More... | |

| void | channel_min (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hY, bool bReturnIdx=false) |

| Calculates the minimum value within each channel of X and places the result in Y. More... | |

| void | channel_max (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hY, bool bReturnIdx=false) |

| Calculates the maximum value within each channel of X and places the result in Y. More... | |

| void | channel_mean (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hY) |

| Calculates the mean value of each channel of X and places the result in Y. More... | |

| void | channel_compare (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hY) |

| Compares the values of the channels from X and places the result in Y where 1 is set if the values are equal otherwise 0 is set. More... | |

| void | channel_fillfrom (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hY, DIR dir) |

| Fills each channel with the the values stored in Src data where the X data continains nOuterNum x nChannels of data, (e.g. one item per channel) that is then copied to all nInnerNum elements of each channel in Y More... | |

| void | channel_fill (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, int nLabelDim, long hLabels, long hY) |

| Fills each channel with the channel item of Y with the data of X matching the label index specified by hLabels. More... | |

| void | channel_sub (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hA, long hX, long hY) |

| Subtracts the values across the channels of X from A and places the result in Y. More... | |

| void | channel_sub (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hY) |

| Subtracts the values across the channels from X and places the result in Y. More... | |

| void | channel_sum (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hY, bool bSumAcrossChannels=true, DIR dir=DIR.FWD, int nChannelsY=-1) |

| Calculates the sum the the values either across or within each channel (depending on bSumAcrossChannels setting) of X and places the result in Y. More... | |

| void | channel_div (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hY, int nMethod=1) |

| Divides the values of the channels from X and places the result in Y. More... | |

| void | channel_mul (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hY, int nMethod=1) |

| Multiplies the values of the channels from X and places the result in Y. More... | |

| void | channel_mulv (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hA, long hX, long hC) |

| Multiplies the values in vector X by each channel in matrix A and places the result in matrix C. More... | |

| void | channel_scale (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hA, long hY) |

| Multiplies the values of the channels from X with the scalar values in B and places the result in Y. More... | |

| void | channel_dot (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hA, long hY) |

| Calculates the dot product the the values within each channel of X and places the result in Y. More... | |

| void | channel_duplicate (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hY) |

| Duplicates each channel 'nInnerNum' of times in the destination. More... | |

| void | channel_percentile (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hY, double dfPercentile) |

| Calculates the percentile along axis = 0. More... | |

| void | channel_op_fwd (OP op, int nCount, int nC, int nN1, int nSD1, int nN2, int nSD2, long hA, long hB, long hY) |

| Performs a channel operation forward on the data. More... | |

| void | channel_op_bwd (OP op, int nCount, int nC, int nN1, int nSD1, int nN2, int nSD2, int nCy, int nSDy, long hA, long hB, long hY, long hAd, long hBd, long hYd, long hWork) |

| Performs a channel operation backward on the data. More... | |

| void | channel_add (int nCount, int nOuterNum, int nChannels, int nBlocks, int nInnerNum, int nOffset, long hX, long hY, DIR dir) |

| Add data along channels similar to numpy split function but where the data is added instead of copied. More... | |

| void | channel_copy (int nCount, int nOuterNum, int nChannels, int nBlocks, int nInnerNum, int nOffset, long hX, long hY, DIR dir) |

| Copy data along channels similar to numpy split function. More... | |

| void | channel_copyall (int nCount, int nOuterNum, int nChannels, int nInnerNum, long hX, long hY) |

| Copy all data from X (shape 1,c,sd) to each num in Y (shape n,c,sd). More... | |

| void | sum (int nCount, int nOuterNum, int nInnerNum, long hX, long hY) |

| Calculates the sum of inner values of X and places the result in Y. More... | |

| void | rng_setseed (long lSeed) |

| Sets the random number generator seed used by random number operations. More... | |

| void | rng_uniform (int n, double fMin, double fMax, long hY) |

| Fill Y with random numbers using a uniform random distribution. More... | |

| void | rng_uniform (int n, float fMin, float fMax, long hY) |

| Fill Y with random numbers using a uniform random distribution. More... | |

| void | rng_uniform (int n, T fMin, T fMax, long hY) |

| Fill Y with random numbers using a uniform random distribution. More... | |

| void | rng_gaussian (int n, double fMu, double fSigma, long hY) |

| Fill Y with random numbers using a gaussian random distribution. More... | |

| void | rng_gaussian (int n, float fMu, float fSigma, long hY) |

| Fill Y with random numbers using a gaussian random distribution. More... | |

| void | rng_gaussian (int n, T fMu, T fSigma, long hY) |

| Fill Y with random numbers using a gaussian random distribution. More... | |

| void | rng_bernoulli (int n, double fNonZeroProb, long hY) |

| Fill Y with random numbers using a bernoulli random distribution. More... | |

| void | rng_bernoulli (int n, float fNonZeroProb, long hY) |

| Fill Y with random numbers using a bernoulli random distribution. More... | |

| void | rng_bernoulli (int n, T fNonZeroProb, long hY) |

| Fill Y with random numbers using a bernoulli random distribution. More... | |

| void | accuracy_fwd (int nCount, int nOuterNum, int nInnerNum, long hBottomData, long hBottomLabel, long hAccData, long hAccTotals, int? nIgnoreLabel, bool bLastElementOnly, int nBatch) |

| Performs the forward pass for the accuracy layer More... | |

| void | batchreidx_fwd (int nCount, int nInnerDim, long hBottomData, long hPermutData, long hTopData) |

| Performs the forward pass for batch re-index More... | |

| void | batchreidx_bwd (int nCount, int nInnerDim, long hTopDiff, long hTopIdx, long hBegins, long hCounts, long hBottomDiff) |

| Performs the backward pass for batch re-index More... | |

| void | embed_fwd (int nCount, long hBottomData, long hWeight, int nM, int nN, int nK, long hTopData) |

| Performs the forward pass for embed More... | |

| void | embed_bwd (int nCount, long hBottomData, long hTopDiff, int nM, int nN, int nK, long hWeightDiff) |

| Performs the backward pass for embed More... | |

| void | pooling_fwd (POOLING_METHOD method, int nCount, long hBottomData, int num, int nChannels, int nHeight, int nWidth, int nPooledHeight, int nPooledWidth, int nKernelH, int nKernelW, int nStrideH, int nStrideW, int nPadH, int nPadW, long hTopData, long hMask, long hTopMask) |

| Performs the forward pass for pooling using Cuda More... | |

| void | pooling_bwd (POOLING_METHOD method, int nCount, long hTopDiff, int num, int nChannels, int nHeight, int nWidth, int nPooledHeight, int nPooledWidth, int nKernelH, int nKernelW, int nStrideH, int nStrideW, int nPadH, int nPadW, long hBottomDiff, long hMask, long hTopMask) |

| Performs the backward pass for pooling using Cuda More... | |

| void | unpooling_fwd (POOLING_METHOD method, int nCount, long hBottomData, int num, int nChannels, int nHeight, int nWidth, int nPooledHeight, int nPooledWidth, int nKernelH, int nKernelW, int nStrideH, int nStrideW, int nPadH, int nPadW, long hTopData, long hMask) |

| Performs the forward pass for unpooling using Cuda More... | |

| void | unpooling_bwd (POOLING_METHOD method, int nCount, long hTopDiff, int num, int nChannels, int nHeight, int nWidth, int nPooledHeight, int nPooledWidth, int nKernelH, int nKernelW, int nStrideH, int nStrideW, int nPadH, int nPadW, long hBottomDiff, long hMask) |

| Performs the backward pass for unpooling using Cuda More... | |

| void | clip_fwd (int nCount, long hBottomData, long hTopData, T fMin, T fMax) |

| Performs a Clip forward pass in Cuda. More... | |

| void | clip_bwd (int nCount, long hTopDiff, long hBottomData, long hBottomDiff, T fMin, T fMax) |

| Performs a Clip backward pass in Cuda. More... | |

| void | math_fwd (int nCount, long hBottomData, long hTopData, MATH_FUNCTION function) |

| Performs a Math function forward pass in Cuda. More... | |

| void | math_bwd (int nCount, long hTopDiff, long hTopData, long hBottomDiff, long hBottomData, MATH_FUNCTION function) |

| Performs a Math function backward pass in Cuda. More... | |

| void | mean_error_loss_bwd (int nCount, long hPredicted, long hTarget, long hBottomDiff, MEAN_ERROR merr) |

| Performs a Mean Error Loss backward pass in Cuda. More... | |

| void | mish_fwd (int nCount, long hBottomData, long hTopData, double dfThreshold) |

| Performs a Mish forward pass in Cuda. More... | |

| void | mish_bwd (int nCount, long hTopDiff, long hTopData, long hBottomDiff, long hBottomData, double dfThreshold, int nMethod=0) |

| Performs a Mish backward pass in Cuda. More... | |

| void | gelu_fwd (int nCount, long hBottomData, long hTopData, bool bEnableBertVersion) |

| Performs a GELU forward pass in Cuda. More... | |

| void | gelu_bwd (int nCount, long hTopDiff, long hTopData, long hBottomDiff, long hBottomData, bool bEnableBertVersion) |

| Performs a GELU backward pass in Cuda. More... | |

| void | silu_fwd (int nCount, long hBottomData, long hTopData) |

| Performs the Sigmoid-weighted Linear Unit (SiLU) activation forward pass in Cuda. More... | |

| void | silu_bwd (int nCount, long hTopDiff, long hTopData, long hBottomDiff, long hBottomData) |

| Performs the Sigmoid-weighted Linear Unit (SiLU) activation backward pass in Cuda. More... | |

| void | softplus_fwd (int nCount, long hBottomData, long hTopData) |

| Performs the Softplus function forward, a smooth approximation of the ReLU function More... | |

| void | softplus_bwd (int nCount, long hTopDiff, long hTopData, long hBottomDiff, long hBottomData) |

| Performs the Softplus function backward, a smooth approximation of the ReLU function More... | |

| void | lecun_fwd (int nCount, long hBottomData, long hTopData) |

| Performs the LeCun's Tanh function forward More... | |

| void | lecun_bwd (int nCount, long hTopDiff, long hTopData, long hBottomDiff, long hBottomData) |

| Performs the LeCun's Tanh function backward More... | |

| void | serf_fwd (int nCount, long hBottomData, long hTopData, double dfThreshold) |

| Performs a Serf forward pass in Cuda. More... | |

| void | serf_bwd (int nCount, long hTopDiff, long hTopData, long hBottomDiff, long hBottomData, double dfThreshold) |

| Performs a Serf backward pass in Cuda. More... | |

| void | tanh_fwd (int nCount, long hBottomData, long hTopData) |

| Performs a TanH forward pass in Cuda. More... | |

| void | tanh_bwd (int nCount, long hTopDiff, long hTopData, long hBottomDiff) |

| Performs a TanH backward pass in Cuda. More... | |

| void | sigmoid_fwd (int nCount, long hBottomData, long hTopData) |

| Performs a Sigmoid forward pass in Cuda. More... | |

| void | sigmoid_bwd (int nCount, long hTopDiff, long hTopData, long hBottomDiff) |

| Performs a Sigmoid backward pass in Cuda. More... | |

| void | swish_bwd (int nCount, long hTopDiff, long hTopData, long hSigmoidOutputData, long hBottomDiff, double dfBeta) |

| Performs a Swish backward pass in Cuda. More... | |

| void | relu_fwd (int nCount, long hBottomData, long hTopData, T fNegativeSlope) |

| Performs a Rectifier Linear Unit (ReLU) forward pass in Cuda. More... | |

| void | relu_bwd (int nCount, long hTopDiff, long hTopData, long hBottomDiff, T fNegativeSlope) |

| Performs a Rectifier Linear Unit (ReLU) backward pass in Cuda. More... | |

| void | elu_fwd (int nCount, long hBottomData, long hTopData, double dfAlpha) |

| Performs a Exponential Linear Unit (ELU) forward pass in Cuda. More... | |

| void | elu_bwd (int nCount, long hTopDiff, long hTopData, long hBottomData, long hBottomDiff, double dfAlpha) |

| Performs a Exponential Linear Unit (ELU) backward pass in Cuda. More... | |

| void | dropout_fwd (int nCount, long hBottomData, long hMask, uint uiThreshold, T fScale, long hTopData) |

| Performs a dropout forward pass in Cuda. More... | |

| void | dropout_bwd (int nCount, long hTopDiff, long hMask, uint uiThreshold, T fScale, long hBottomDiff) |

| Performs a dropout backward pass in Cuda. More... | |

| void | bnll_fwd (int nCount, long hBottomData, long hTopData) |

| Performs a binomial normal log liklihod (BNLL) forward pass in Cuda. More... | |

| void | bnll_bwd (int nCount, long hTopDiff, long hBottomData, long hBottomDiff) |

| Performs a binomial normal log liklihod (BNLL) backward pass in Cuda. More... | |

| void | prelu_fwd (int nCount, int nChannels, int nDim, long hBottomData, long hTopData, long hSlopeData, int nDivFactor) |

| Performs Parameterized Rectifier Linear Unit (ReLU) forward pass in Cuda. More... | |

| void | prelu_bwd_param (int nCDim, int nNum, int nTopOffset, long hTopDiff, long hBottomData, long hBackBuffDiff) |

| Performs Parameterized Rectifier Linear Unit (ReLU) backward param pass in Cuda. More... | |

| void | prelu_bwd (int nCount, int nChannels, int nDim, long hTopDiff, long hBottomData, long hBottomDiff, long hSlopeData, int nDivFactor) |

| Performs Parameterized Rectifier Linear Unit (ReLU) backward pass in Cuda. More... | |

| void | softmaxloss_fwd (int nCount, long hProbData, long hLabel, long hLossData, int nOuterNum, int nDim, int nInnerNum, long hCounts, int? nIgnoreLabel) |

| Performs Softmax Loss forward pass in Cuda. More... | |

| void | softmaxloss_bwd (int nCount, long hTopData, long hLabel, long hBottomDiff, int nOuterNum, int nDim, int nInnerNum, long hCounts, int? nIgnoreLabel) |

| Performs Softmax Loss backward pass in Cuda. More... | |

| void | nllloss_fwd (int nCount, long hProbData, long hLabel, long hLossData, int nOuterNum, int nDim, int nInnerNum, long hCounts, int? nIgnoreLabel) |

| Performs NLL Loss forward pass in Cuda. More... | |

| void | nllloss_bwd (int nCount, long hTopData, long hLabel, long hBottomDiff, int nOuterNum, int nDim, int nInnerNum, long hCounts, int? nIgnoreLabel) |

| Performs NLL Loss backward pass in Cuda. More... | |

| void | max_fwd (int nCount, long hBottomDataA, long hBottomDataB, int nIdx, long hTopData, long hMask) |

| Performs a max forward pass in Cuda. More... | |

| void | max_bwd (int nCount, long hTopDiff, int nIdx, long hMask, long hBottomDiff) |

| Performs a max backward pass in Cuda. More... | |

| void | min_fwd (int nCount, long hBottomDataA, long hBottomDataB, int nIdx, long hTopData, long hMask) |

| Performs a min forward pass in Cuda. More... | |

| void | min_bwd (int nCount, long hTopDiff, int nIdx, long hMask, long hBottomDiff) |

| Performs a min backward pass in Cuda. More... | |

| void | crop_fwd (int nCount, int nNumAxes, long hSrcStrides, long hDstStrides, long hOffsets, long hBottomData, long hTopData) |

| Performs the crop forward operation. More... | |

| void | crop_bwd (int nCount, int nNumAxes, long hSrcStrides, long hDstStrides, long hOffsets, long hBottomDiff, long hTopDiff) |

| Performs the crop backward operation. More... | |

| void | concat_fwd (int nCount, long hBottomData, int nNumConcats, int nConcatInputSize, int nTopConcatAxis, int nBottomConcatAxis, int nOffsetConcatAxis, long hTopData) |

| Performs a concat forward pass in Cuda. More... | |

| void | concat_bwd (int nCount, long hTopDiff, int nNumConcats, int nConcatInputSize, int nTopConcatAxis, int nBottomConcatAxis, int nOffsetConcatAxis, long hBottomDiff) |

| Performs a concat backward pass in Cuda. More... | |

| void | slice_fwd (int nCount, long hBottomData, int nNumSlices, int nSliceSize, int nBottomSliceAxis, int nTopSliceAxis, int nOffsetSliceAxis, long hTopData) |

| Performs a slice forward pass in Cuda. More... | |

| void | slice_bwd (int nCount, long hTopDiff, int nNumSlices, int nSliceSize, int nBottomSliceAxis, int nTopSliceAxis, int nOffsetSliceAxis, long hBottomDiff) |

| Performs a slice backward pass in Cuda. More... | |

| void | tile_fwd (int nCount, long hBottomData, int nInnerDim, int nTiles, int nBottomTileAxis, long hTopData) |

| Performs a tile forward pass in Cuda. More... | |

| void | tile_bwd (int nCount, long hTopDiff, int nTileSize, int nTiles, int nBottomTileAxis, long hBottomDiff) |

| Performs a tile backward pass in Cuda. More... | |

| void | bias_fwd (int nCount, long hBottomData, long hBiasData, int nBiasDim, int nInnerDim, long hTopData) |

| Performs a bias forward pass in Cuda. More... | |

| void | scale_fwd (int nCount, long hX, long hScaleData, int nScaleDim, int nInnerDim, long hY, long hBiasData=0) |

| Performs a scale forward pass in Cuda. More... | |

| void | threshold_fwd (int nCount, double dfThreshold, long hX, long hY) |

| Performs a threshold pass in Cuda. More... | |

| void | cll_bwd (int nCount, int nChannels, double dfMargin, bool bLegacyVersion, double dfAlpha, long hY, long hDiff, long hDistSq, long hBottomDiff) |

| Performs a contrastive loss layer backward pass in Cuda. More... | |

| void | smoothl1_fwd (int nCount, long hX, long hY) |

| Performs the forward operation for the SmoothL1 loss. More... | |

| void | smoothl1_bwd (int nCount, long hX, long hY) |

| Performs the backward operation for the SmoothL1 loss. More... | |

| void | permute (int nCount, long hBottom, bool bFwd, long hPermuteOrder, long hOldSteps, long hNewSteps, int nNumAxes, long hTop) |

| Performs data permutation on the input and reorders the data which is placed in the output. More... | |

| void | gather_fwd (int nCount, long hBottom, long hTop, int nAxis, int nDim, int nDimAtAxis, int nM, int nN, long hIdx) |

| Performs a gather forward pass where data at specifies indexes along a given axis are copied to the output data. More... | |

| void | gather_bwd (int nCount, long hTop, long hBottom, int nAxis, int nDim, int nDimAtAxis, int nM, int nN, long hIdx) |

| Performs a gather backward pass where data at specifies indexes along a given axis are copied to the output data. More... | |

| void | lrn_fillscale (int nCount, long hBottomData, int nNum, int nChannels, int nHeight, int nWidth, int nSize, T fAlphaOverSize, T fK, long hScaleData) |

| Performs the fill scale operation used to calculate the LRN cross channel forward pass in Cuda. More... | |

| void | lrn_computeoutput (int nCount, long hBottomData, long hScaleData, T fNegativeBeta, long hTopData) |

| Computes the output used to calculate the LRN cross channel forward pass in Cuda. More... | |

| void | lrn_computediff (int nCount, long hBottomData, long hTopData, long hScaleData, long hTopDiff, int nNum, int nChannels, int nHeight, int nWidth, int nSize, T fNegativeBeta, T fCacheRatio, long hBottomDiff) |

| Computes the diff used to calculate the LRN cross channel backward pass in Cuda. More... | |

| void | sgd_update (int nCount, long hNetParamsDiff, long hHistoryData, T fMomentum, T fLocalRate) |

| Perform the Stochastic Gradient Descent (SGD) update More... | |

| void | nesterov_update (int nCount, long hNetParamsDiff, long hHistoryData, T fMomentum, T fLocalRate) |

| Perform the Nesterov update More... | |

| void | adagrad_update (int nCount, long hNetParamsDiff, long hHistoryData, T fDelta, T fLocalRate) |

| Perform the AdaGrad update More... | |

| void | adadelta_update (int nCount, long hNetParamsDiff, long hHistoryData1, long hHistoryData2, T fMomentum, T fDelta, T fLocalRate) |

| Perform the AdaDelta update More... | |

| void | adam_update (int nCount, long hNetParamsDiff, long hValM, long hValV, T fBeta1, T fBeta2, T fEpsHat, T fLearningRate, T fCorrection) |

| Perform the Adam update More... | |

| void | adamw_update (int nCount, long hNetParamsDiff, long hValM, long hValV, T fBeta1, T fBeta2, T fEpsHat, T fLearningRate, T fDecayRate, long hNetParamsData, int nStep) |

| Perform the AdamW update More... | |

| void | rmsprop_update (int nCount, long hNetParamsDiff, long hHistoryData, T fRmsDecay, T fDelta, T fLocalRate) |

| Perform the RMSProp update More... | |

| void | lstm_fwd (int t, int nN, int nH, int nI, long hWeight_h, long hWeight_i, long hClipData, int nClipOffset, long hTopData, int nTopOffset, long hCellData, int nCellOffset, long hPreGateData, int nPreGateOffset, long hGateData, int nGateOffset, long hHT1Data, int nHT1Offset, long hCT1Data, int nCT1Offset, long hHtoGateData, long hContext=0, long hWeight_c=0, long hCtoGetData=0) |

| Peforms the simple LSTM foward pass in Cuda. More... | |

| void | lstm_bwd (int t, int nN, int nH, int nI, double dfClippingThreshold, long hWeight_h, long hClipData, int nClipOffset, long hTopDiff, int nTopOffset, long hCellData, long hCellDiff, int nCellOffset, long hPreGateDiff, int nPreGateOffset, long hGateData, long hGateDiff, int nGateOffset, long hCT1Data, int nCT1Offset, long hDHT1Diff, int nDHT1Offset, long hDCT1Diff, int nDCT1Offset, long hHtoHData, long hContextDiff=0, long hWeight_c=0) |

| Peforms the simple LSTM backward pass in Cuda. More... | |

| void | lstm_unit_fwd (int nCount, int nHiddenDim, int nXCount, long hX, long hX_acts, long hC_prev, long hCont, long hC, long hH) |

| Peforms the simple LSTM foward pass in Cuda for a given LSTM unit. More... | |

| void | lstm_unit_bwd (int nCount, int nHiddenDim, int nXCount, long hC_prev, long hX_acts, long hC, long hH, long hCont, long hC_diff, long hH_diff, long hC_prev_diff, long hX_acts_diff, long hX_diff) |

| Peforms the simple LSTM backward pass in Cuda for a given LSTM unit. More... | |

| void | coeff_sum_fwd (int nCount, int nDim, int nNumOffset, double dfCoeff, long hCoeffData, long hBottom, long hTop) |

| Performs a coefficient sum foward pass in Cuda. More... | |

| void | coeff_sum_bwd (int nCount, int nDim, int nNumOffset, double dfCoeff, long hCoeffData, long hTopDiff, long hBottomDiff) |

| Performs a coefficient sum backward pass in Cuda. More... | |

| void | coeff_sub_fwd (int nCount, int nDim, int nNumOffset, double dfCoeff, long hCoeffData, long hBottom, long hTop) |

| Performs a coefficient sub foward pass in Cuda. More... | |

| void | coeff_sub_bwd (int nCount, int nDim, int nNumOffset, double dfCoeff, long hCoeffData, long hTopDiff, long hBottomDiff) |

| Performs a coefficient sub backward pass in Cuda. More... | |

| void | sigmoid_cross_entropy_fwd (int nCount, long hInput, long hTarget, long hLoss, bool bHasIgnoreLabel, int nIgnoreLabel, long hCountData) |

| Performs a sigmoid cross entropy forward pass in Cuda. More... | |

| void | sigmoid_cross_entropy_bwd (int nCount, int nIgnoreLabel, long hTarget, long hBottomDiff) |

| Performs a sigmoid cross entropy backward pass in Cuda when an ignore label is specified. More... | |

| void | softmax_cross_entropy_fwd (int nCount, long hProbData, long hLabel, long hLossDiff, long hLossData, int nOuterNum, int nDim, int nInnerNum, long hCounts, int? nIgnoreLabel) |

| Performs a softmax cross entropy forward pass in Cuda. More... | |

| void | softmax_cross_entropy_bwd (int nCount, int nIgnoreLabel, long hTarget, long hBottomDiff) |

| Performs a softmax cross entropy backward pass in Cuda when an ignore label is specified. More... | |

| void | debug () |

| The debug function is uses only during debugging the debug version of the low-level DLL. More... | |

| void | matrix_meancenter_by_column (int nWidth, int nHeight, long hA, long hB, long hY, bool bNormalize=false) |

| Mean center the data by columns, where each column is summed and then subtracted from each column value. More... | |

| void | gaussian_blur (int n, int nChannels, int nHeight, int nWidth, double dfSigma, long hX, long hY) |

| The gaussian_blur runs a Gaussian blurring operation over each channel of the data using the sigma. More... | |

| double | hamming_distance (int n, double dfThreshold, long hA, long hB, long hY, int nOffA=0, int nOffB=0, int nOffY=0) |

| The hamming_distance calculates the Hamming Distance between X and Y both of length n. More... | |

| void | calc_dft_coefficients (int n, long hX, int m, long hY) |

| Calculates the discrete Fourier Transform (DFT) coefficients across the frequencies 1...n/2 (Nyquest Limit) for the array of values in host memory referred to by hA. Return values are placed in the host memory referenced by hY. More... | |

| double[] | calculate_batch_distances (DistanceMethod distMethod, double dfThreshold, int nItemDim, long hSrc, long hTargets, long hWork, int[,] rgOffsets) |

| The calculate_batch_distances method calculates a set of distances based on the DistanceMethod specified. More... | |

| void | ReportMemory (Log log, string strLocation) |

| Report the memory use on the current GPU managed by the CudaDnn object. More... | |

Static Public Member Functions | |

| static string | GetCudaDnnDllPath () |

| Returns the path to the CudaDnnDll module to use for low level CUDA processing. More... | |

| static void | SetDefaultCudaPath (string strPath) |

| Used to optionally set the default path to the Low-Level Cuda Dnn DLL file. More... | |

| static ulong | basetype_size (bool bUseHalfSize) |

| Returns the base type size in bytes. More... | |

| static ulong | ConvertByteSizeToCount (ulong ulSizeInBytes) |

| Converts the byte size into the number of items in the base data type of float or double. More... | |

Protected Member Functions | |

| virtual void | Dispose (bool bDisposing) |

| Disposes this instance freeing up all of its host and GPU memory. More... | |

Properties | |

| ulong | TotalMemoryUsed [get] |

| Returns the total amount of GPU memory used by this instance. More... | |

| string | TotalMemoryUsedAsText [get] |

| Returns the total amount of memory used. More... | |

| long | KernelHandle [get] |

| Returns the Low-Level kernel handle used for this instance. Each Low-Level kernel maintains its own set of look-up tables for memory, streams, cuDnn constructs, etc. More... | |

| string | Path [get] |

| Specifies the file path used to load the Low-Level Cuda DNN Dll file. More... | |

| static string | DefaultPath [get] |

| Specifies the default path used t load the Low-Level Cuda DNN Dll file. More... | |

| int | OriginalDeviceID [get] |