|

MyCaffe

1.12.2.41

Deep learning software for Windows C# programmers.

|

|

MyCaffe

1.12.2.41

Deep learning software for Windows C# programmers.

|

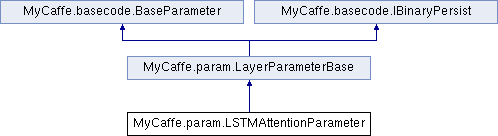

Specifies the parameters for the LSTMAttentionLayer that provides an attention based LSTM layer used for decoding in an encoder/decoder based model. More...

Public Member Functions | |

| LSTMAttentionParameter () | |

| Constructor for the parameter. More... | |

| override object | Load (System.IO.BinaryReader br, bool bNewInstance=true) |

| Load the parameter from a binary reader. More... | |

| override void | Copy (LayerParameterBase src) |

| Copy on parameter to another. More... | |

| override LayerParameterBase | Clone () |

| Creates a new copy of this instance of the parameter. More... | |

| override RawProto | ToProto (string strName) |

| Convert the parameter into a RawProto. More... | |

Public Member Functions inherited from MyCaffe.param.LayerParameterBase Public Member Functions inherited from MyCaffe.param.LayerParameterBase | |

| LayerParameterBase () | |

| Constructor for the parameter. More... | |

| virtual string | PrepareRunModelInputs () |

| This method gives derivative classes a chance specify model inputs required by the run model. More... | |

| virtual void | PrepareRunModel (LayerParameter p) |

| This method gives derivative classes a chance to prepare the layer for a run-model. More... | |

| void | Save (BinaryWriter bw) |

| Save this parameter to a binary writer. More... | |

| abstract object | Load (BinaryReader br, bool bNewInstance=true) |

| Load the parameter from a binary reader. More... | |

Public Member Functions inherited from MyCaffe.basecode.BaseParameter Public Member Functions inherited from MyCaffe.basecode.BaseParameter | |

| BaseParameter () | |

| Constructor for the parameter. More... | |

| virtual bool | Compare (BaseParameter p) |

| Compare this parameter to another parameter. More... | |

Static Public Member Functions | |

| static LSTMAttentionParameter | FromProto (RawProto rp) |

| Parses the parameter from a RawProto. More... | |

Static Public Member Functions inherited from MyCaffe.basecode.BaseParameter Static Public Member Functions inherited from MyCaffe.basecode.BaseParameter | |

| static double | ParseDouble (string strVal) |

| Parse double values using the US culture if the decimal separator = '.', then using the native culture, and if then lastly trying the US culture to handle prototypes containing '.' as the separator, yet parsed in a culture that does not use '.' as a decimal. More... | |

| static bool | TryParse (string strVal, out double df) |

| Parse double values using the US culture if the decimal separator = '.', then using the native culture, and if then lastly trying the US culture to handle prototypes containing '.' as the separator, yet parsed in a culture that does not use '.' as a decimal. More... | |

| static float | ParseFloat (string strVal) |

| Parse float values using the US culture if the decimal separator = '.', then using the native culture, and if then lastly trying the US culture to handle prototypes containing '.' as the separator, yet parsed in a culture that does not use '.' as a decimal. More... | |

| static bool | TryParse (string strVal, out float f) |

| Parse doufloatble values using the US culture if the decimal separator = '.', then using the native culture, and if then lastly trying the US culture to handle prototypes containing '.' as the separator, yet parsed in a culture that does not use '.' as a decimal. More... | |

Properties | |

| uint | num_output [getset] |

| Specifies the number of outputs for the layer. More... | |

| uint | num_output_ip [getset] |

| Specifies the number of IP outputs for the layer. Note, when 0, no inner product is performed. More... | |

| double | clipping_threshold [getset] |

| Specifies the gradient clipping threshold, default = 0.0 (i.e. no clipping). More... | |

| FillerParameter | weight_filler [getset] |

| Specifies the filler parameters for the weight filler. More... | |

| FillerParameter | bias_filler [getset] |

| Specifies the filler parameters for the bias filler. More... | |

| bool | enable_clockwork_forgetgate_bias [getset] |

| When enabled, the forget gate bias is set to 5.0. More... | |

| bool | enable_attention [getset] |

| (default=false) When enabled, attention is applied to the input state on each cycle through the LSTM. Attention is used with encoder/decoder models. When disabled, this layer operates like a standard LSTM layer with input in the shape T,B,I, where T=timesteps, b=batch and i=input. More... | |

Additional Inherited Members | |

Public Types inherited from MyCaffe.param.LayerParameterBase Public Types inherited from MyCaffe.param.LayerParameterBase | |

| enum | LABEL_TYPE { NONE , SINGLE , MULTIPLE , ONLY_ONE } |

| Defines the label type. More... | |

Specifies the parameters for the LSTMAttentionLayer that provides an attention based LSTM layer used for decoding in an encoder/decoder based model.

The AttentionLayer implementation was inspired by the C# Seq2SeqLearn implementation by mashmawy for language translation,

And also inspired by the C# ChatBot implementation by HectorPulido which uses Seq2SeqLearn

Definition at line 28 of file LSTMAttentionParameter.cs.

| MyCaffe.param.LSTMAttentionParameter.LSTMAttentionParameter | ( | ) |

Constructor for the parameter.

Definition at line 39 of file LSTMAttentionParameter.cs.

|

virtual |

Creates a new copy of this instance of the parameter.

Implements MyCaffe.param.LayerParameterBase.

Definition at line 146 of file LSTMAttentionParameter.cs.

|

virtual |

Copy on parameter to another.

| src | Specifies the parameter to copy. |

Implements MyCaffe.param.LayerParameterBase.

Definition at line 132 of file LSTMAttentionParameter.cs.

|

static |

Parses the parameter from a RawProto.

| rp | Specifies the RawProto to parse. |

Definition at line 184 of file LSTMAttentionParameter.cs.

| override object MyCaffe.param.LSTMAttentionParameter.Load | ( | System.IO.BinaryReader | br, |

| bool | bNewInstance = true |

||

| ) |

Load the parameter from a binary reader.

| br | Specifies the binary reader. |

| bNewInstance | When true a new instance is created (the default), otherwise the existing instance is loaded from the binary reader. |

Definition at line 120 of file LSTMAttentionParameter.cs.

|

virtual |

Convert the parameter into a RawProto.

| strName | Specifies the name to associate with the RawProto. |

Implements MyCaffe.basecode.BaseParameter.

Definition at line 158 of file LSTMAttentionParameter.cs.

|

getset |

Specifies the filler parameters for the bias filler.

Definition at line 89 of file LSTMAttentionParameter.cs.

|

getset |

Specifies the gradient clipping threshold, default = 0.0 (i.e. no clipping).

Definition at line 67 of file LSTMAttentionParameter.cs.

|

getset |

(default=false) When enabled, attention is applied to the input state on each cycle through the LSTM. Attention is used with encoder/decoder models. When disabled, this layer operates like a standard LSTM layer with input in the shape T,B,I, where T=timesteps, b=batch and i=input.

Definition at line 113 of file LSTMAttentionParameter.cs.

|

getset |

When enabled, the forget gate bias is set to 5.0.

Definition at line 102 of file LSTMAttentionParameter.cs.

|

getset |

Specifies the number of outputs for the layer.

Definition at line 47 of file LSTMAttentionParameter.cs.

|

getset |

Specifies the number of IP outputs for the layer. Note, when 0, no inner product is performed.

Definition at line 57 of file LSTMAttentionParameter.cs.

|

getset |

Specifies the filler parameters for the weight filler.

Definition at line 78 of file LSTMAttentionParameter.cs.