|

MyCaffe

1.12.2.41

Deep learning software for Windows C# programmers.

|

|

MyCaffe

1.12.2.41

Deep learning software for Windows C# programmers.

|

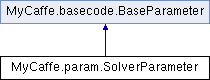

The SolverParameter is a parameter for the solver, specifying the train and test networks. More...

Public Types | |

| enum | EvaluationType { CLASSIFICATION , DETECTION } |

| Defines the evaluation method used in the SSD algorithm. More... | |

| enum | SnapshotFormat { BINARYPROTO = 1 } |

| Defines the format of each snapshot. More... | |

| enum | SolverType { SGD = 0 , NESTEROV = 1 , ADAGRAD = 2 , RMSPROP = 3 , ADADELTA = 4 , ADAM = 5 , LBFGS = 6 , ADAMW = 7 , _MAX = 8 } |

| Defines the type of solver. More... | |

| enum | LearningRatePolicyType { FIXED , STEP , EXP , INV , MULTISTEP , POLY , SIGMOID } |

| Defines the learning rate policy to use. More... | |

| enum | RegularizationType { NONE , L1 , L2 } |

| Defines the regularization type. When enabled, weight_decay is used. More... | |

Public Member Functions | |

| SolverParameter () | |

| The SolverParameter constructor. More... | |

| SolverParameter | Clone () |

| Creates a new copy of the SolverParameter. More... | |

| override RawProto | ToProto (string strName) |

| Converts the SolverParameter into a RawProto. More... | |

| string | DebugString () |

| Returns a debug string for the SolverParameter. More... | |

Public Member Functions inherited from MyCaffe.basecode.BaseParameter Public Member Functions inherited from MyCaffe.basecode.BaseParameter | |

| BaseParameter () | |

| Constructor for the parameter. More... | |

| virtual bool | Compare (BaseParameter p) |

| Compare this parameter to another parameter. More... | |

Static Public Member Functions | |

| static SolverParameter | FromProto (RawProto rp) |

| Parses a new SolverParameter from a RawProto. More... | |

Static Public Member Functions inherited from MyCaffe.basecode.BaseParameter Static Public Member Functions inherited from MyCaffe.basecode.BaseParameter | |

| static double | ParseDouble (string strVal) |

| Parse double values using the US culture if the decimal separator = '.', then using the native culture, and if then lastly trying the US culture to handle prototypes containing '.' as the separator, yet parsed in a culture that does not use '.' as a decimal. More... | |

| static bool | TryParse (string strVal, out double df) |

| Parse double values using the US culture if the decimal separator = '.', then using the native culture, and if then lastly trying the US culture to handle prototypes containing '.' as the separator, yet parsed in a culture that does not use '.' as a decimal. More... | |

| static float | ParseFloat (string strVal) |

| Parse float values using the US culture if the decimal separator = '.', then using the native culture, and if then lastly trying the US culture to handle prototypes containing '.' as the separator, yet parsed in a culture that does not use '.' as a decimal. More... | |

| static bool | TryParse (string strVal, out float f) |

| Parse doufloatble values using the US culture if the decimal separator = '.', then using the native culture, and if then lastly trying the US culture to handle prototypes containing '.' as the separator, yet parsed in a culture that does not use '.' as a decimal. More... | |

Properties | |

| bool | output_average_results [getset] |

| Specifies to average loss results before they are output - this can be faster when there are a lot of results in a cycle. More... | |

| string | custom_trainer [getset] |

| Specifies the Name of the custom trainer (if any) - this is an optional setting used by exteral software to provide a customized training process. Each custom trainer must implement the IDnnCustomTraininer interface which contains a 'Name'property - the named returned from this property is the value set here as the 'custom_trainer'. More... | |

| string | custom_trainer_properties [getset] |

| Specifies the custom trainer properties (if any) - this is an optional setting used by exteral software to provide the propreties for a customized training process. More... | |

| NetParameter | net_param [getset] |

| Inline train net param, possibly combined with one or more test nets. More... | |

| NetParameter | train_net_param [getset] |

| Inline train net param, possibly combined with one or more test nets. More... | |

| List< NetParameter > | test_net_param [getset] |

| Inline test net params. More... | |

| NetState | train_state [getset] |

| The states for the train/test nets. Must be unspecified or specified once per net. More... | |

| List< NetState > | test_state [getset] |

| The states for the train/test nets. Must be unspecified or specified once per net. More... | |

| List< int > | test_iter [getset] |

| The number of iterations for each test. More... | |

| int | test_interval [getset] |

| The number of iterations between two testing phases. More... | |

| bool | test_compute_loss [getset] |

| Test the compute loss. More... | |

| bool | test_initialization [getset] |

| If true, run an initial test pass before the first iteration, ensuring memory availability and printing the starting value of the loss. More... | |

| double | base_lr [getset] |

| The base learning rate (default = 0.01). More... | |

| int | display [getset] |

| The number of iterations between displaying info. If display = 0, no info will be displayed. More... | |

| int | average_loss [getset] |

| Display the loss averaged over the last average_loss iterations. More... | |

| int | max_iter [getset] |

| The maximum number of iterations. More... | |

| int | iter_size [getset] |

| Accumulate gradients over 'iter_size' x 'batch_size' instances. More... | |

| LearningRatePolicyType | LearningRatePolicy [getset] |

| The learning rate decay policy. More... | |

| string | lr_policy [getset] |

| The learning rate decay policy. More... | |

| double | gamma [getset] |

| Specifies the 'gamma' parameter to compute the 'step', 'exp', 'inv', and 'sigmoid' learning policy (default = 0.1). More... | |

| double | power [getset] |

| The 'power' parameter to compute the learning rate. More... | |

| double | momentum [getset] |

| Specifies the momentum value - used by all solvers EXCEPT the 'AdaGrad' and 'RMSProp' solvers. For these latter solvers, momentum should = 0. More... | |

| double | weight_decay [getset] |

| Specifies the weight decay (default = 0.0005). More... | |

| RegularizationType | Regularization [getset] |

| Specifies the regularization type (default = L2). More... | |

| string | regularization_type [getset] |

| Specifies the regularization type (default = 'L2'). More... | |

| int | stepsize [getset] |

| The stepsize for learning rate policy 'step'. More... | |

| List< int > | stepvalue [getset] |

| The step values for learning rate policy 'multistep'. More... | |

| double | clip_gradients [getset] |

| Set clip_gradients to >= 0 to clip parameter gradients to that L2 norm, whenever their actual L2 norm is larger. More... | |

| bool | enable_clip_gradient_status [getset] |

| Optionally, enable status output when gradients are clipped (default = true) More... | |

| int | snapshot [getset] |

| Specifies the snapshot interval. More... | |

| string | snapshot_prefix [getset] |

| The prefix for the snapshot. More... | |

| bool | snapshot_diff [getset] |

| Whether to snapshot diff in the results or not. Snapshotting diff will help debugging but the final protocol buffer size will be much larger. More... | |

| SnapshotFormat | snapshot_format [getset] |

| The snapshot format. More... | |

| bool | snapshot_include_weights [getset] |

| Specifies whether or not the snapshot includes the trained weights. The default = true. More... | |

| bool | snapshot_include_state [getset] |

| Specifies whether or not the snapshot includes the solver state. The default = false. Including the solver state will slow down the time of each snapshot. More... | |

| int | device_id [getset] |

| The device id that will be used when run on the GPU. More... | |

| long | random_seed [getset] |

| If non-negative, the seed with which the Solver will initialize the caffe random number generator – useful for repoducible results. Otherwise (and by default) initialize using a seed derived from the system clock. More... | |

| SolverType | type [getset] |

| Specifies the solver type. More... | |

| double | delta [getset] |

| Numerical stability for RMSProp, AdaGrad, AdaDelta, Adam and AdamW solvers (default = 1e-08). More... | |

| double | momentum2 [getset] |

| An additional momentum property for the Adam and AdamW solvers (default = 0.999). More... | |

| double | rms_decay [getset] |

| Specifies the 'RMSProp' decay value used by the 'RMSProp' solver (default = 0.95). More... | |

| double | adamw_decay [getset] |

| Specifies the 'AdamW' detached weight decay value used by the 'AdamW' solver (default = 0.1). More... | |

| bool | debug_info [getset] |

| If true, print information about the state of the net that may help with debugging learning problems. More... | |

| int | lbgfs_corrections [getset] |

| Specifies the number of lbgfs corrections used with the L-BGFS solver. More... | |

| bool | snapshot_after_train [getset] |

| If false, don't save a snapshot after training finishes. More... | |

| EvaluationType | eval_type [getset] |

| Specifies the evaluation type to use when using Single-Shot Detection (SSD) - (default = NONE, SSD not used). More... | |

| ApVersion | ap_version [getset] |

| Specifies the AP Version to use for average precision when using Single-Shot Detection (SSD) - (default = INTEGRAL). More... | |

| bool | show_per_class_result [getset] |

| Specifies whether or not to display results per class when using Single-Shot Detection (SSD) - (default = false). More... | |

| int | accuracy_average_window [getset] |

| Specifies the window over which to average the accuracies (default = 0 which ignores averaging). More... | |

The SolverParameter is a parameter for the solver, specifying the train and test networks.

Exactly one train net must be specified using one of the following fields: train_net_param, train_net, net_param, net

One or more of the test nets may be specified using any of the following fields: test_net_param, test_net, net_param, net

If more than one test net field is specified (e.g., both net and test_net are specified), they will be evaluated in the field order given above: (1) test_net_param, (2) test_net, (3) net_param/net

A test_iter must be specified for each test_net. A test_level and/or test_stage may also be specified for each test_net.

Definition at line 31 of file SolverParameter.cs.

Defines the evaluation method used in the SSD algorithm.

| Enumerator | |

|---|---|

| CLASSIFICATION | Specifies to run a standard classification evaluation. |

| DETECTION | Specifies detection evaluation used in the SSD algorithm. |

Definition at line 86 of file SolverParameter.cs.

Defines the learning rate policy to use.

Definition at line 181 of file SolverParameter.cs.

Defines the regularization type. When enabled, weight_decay is used.

| Enumerator | |

|---|---|

| NONE | Specifies to not use regularization. |

| L1 | Specifies L1 regularization. |

| L2 | Specifies L2 regularization. |

Definition at line 216 of file SolverParameter.cs.

Defines the format of each snapshot.

| Enumerator | |

|---|---|

| BINARYPROTO | Save snapshots in the binary prototype format. |

Definition at line 101 of file SolverParameter.cs.

Defines the type of solver.

| Enumerator | |

|---|---|

| SGD | Use Stochastic Gradient Descent solver with momentum updates weights by a linear combination of the negative gradient and the previous weight update.

|

| NESTEROV | Use Nesterov's accelerated gradient, similar to SGD, but error gradient is computed on the weights with added momentum.

|

| ADAGRAD | Use Gradient based optimization like SGD that tries to find rarely seen features

|

| RMSPROP | Use RMS Prop gradient based optimization like SGD.

|

| ADADELTA | Use AdaDelta gradient based optimization like SGD. See ADADELTA: An Adaptive Learning Rate Method by Zeiler, Matthew D., 2012. |

| ADAM | Use Adam gradient based optimization like SGD that includes 'adaptive momentum estimation' and can be thougth of as a generalization of AdaGrad.

|

| LBFGS | Use the L-BFGS solver based on the implementation of minFunc by Marc Schmidt.

|

| ADAMW | Use AdamW gradient based optimization like Adam that includes a detached weight decay.

|

Definition at line 112 of file SolverParameter.cs.

| MyCaffe.param.SolverParameter.SolverParameter | ( | ) |

The SolverParameter constructor.

Definition at line 235 of file SolverParameter.cs.

| SolverParameter MyCaffe.param.SolverParameter.Clone | ( | ) |

Creates a new copy of the SolverParameter.

Definition at line 244 of file SolverParameter.cs.

| string MyCaffe.param.SolverParameter.DebugString | ( | ) |

Returns a debug string for the SolverParameter.

Definition at line 1342 of file SolverParameter.cs.

|

static |

Parses a new SolverParameter from a RawProto.

| rp | Specifies the RawProto representing the SolverParameter. |

Definition at line 1092 of file SolverParameter.cs.

|

virtual |

Converts the SolverParameter into a RawProto.

| strName | Specifies a name given to the RawProto. |

Implements MyCaffe.basecode.BaseParameter.

Definition at line 965 of file SolverParameter.cs.

|

getset |

Specifies the window over which to average the accuracies (default = 0 which ignores averaging).

Definition at line 954 of file SolverParameter.cs.

|

getset |

Specifies the 'AdamW' detached weight decay value used by the 'AdamW' solver (default = 0.1).

Decay applied to detached weight decay.

Definition at line 877 of file SolverParameter.cs.

|

getset |

Specifies the AP Version to use for average precision when using Single-Shot Detection (SSD) - (default = INTEGRAL).

Definition at line 933 of file SolverParameter.cs.

|

getset |

Display the loss averaged over the last average_loss iterations.

Definition at line 423 of file SolverParameter.cs.

|

getset |

The base learning rate (default = 0.01).

Definition at line 401 of file SolverParameter.cs.

|

getset |

Set clip_gradients to >= 0 to clip parameter gradients to that L2 norm, whenever their actual L2 norm is larger.

Definition at line 706 of file SolverParameter.cs.

|

getset |

Specifies the Name of the custom trainer (if any) - this is an optional setting used by exteral software to provide a customized training process. Each custom trainer must implement the IDnnCustomTraininer interface which contains a 'Name'property - the named returned from this property is the value set here as the 'custom_trainer'.

Definition at line 268 of file SolverParameter.cs.

|

getset |

Specifies the custom trainer properties (if any) - this is an optional setting used by exteral software to provide the propreties for a customized training process.

Note all spaces are replaced with '~' characters to avoid parsing errors.

Definition at line 283 of file SolverParameter.cs.

|

getset |

If true, print information about the state of the net that may help with debugging learning problems.

Definition at line 889 of file SolverParameter.cs.

|

getset |

Numerical stability for RMSProp, AdaGrad, AdaDelta, Adam and AdamW solvers (default = 1e-08).

Definition at line 838 of file SolverParameter.cs.

|

getset |

The device id that will be used when run on the GPU.

Definition at line 797 of file SolverParameter.cs.

|

getset |

The number of iterations between displaying info. If display = 0, no info will be displayed.

Definition at line 413 of file SolverParameter.cs.

|

getset |

Optionally, enable status output when gradients are clipped (default = true)

Definition at line 716 of file SolverParameter.cs.

|

getset |

Specifies the evaluation type to use when using Single-Shot Detection (SSD) - (default = NONE, SSD not used).

Definition at line 922 of file SolverParameter.cs.

|

getset |

Specifies the 'gamma' parameter to compute the 'step', 'exp', 'inv', and 'sigmoid' learning policy (default = 0.1).

Definition at line 575 of file SolverParameter.cs.

|

getset |

Accumulate gradients over 'iter_size' x 'batch_size' instances.

Definition at line 445 of file SolverParameter.cs.

|

getset |

Specifies the number of lbgfs corrections used with the L-BGFS solver.

Definition at line 900 of file SolverParameter.cs.

|

getset |

The learning rate decay policy.

The currently implemented learning rate policies are as follows:

where base_lr, max_iter, gamma, step, stepvalue and power are defined int the solver protocol buffer, and iter is the current iteration.

Definition at line 474 of file SolverParameter.cs.

|

getset |

The learning rate decay policy.

The currently implemented learning rate policies are as follows:

where base_lr, max_iter, gamma, step, stepvalue and power are defined int the solver protocol buffer, and iter is the current iteration.

Definition at line 564 of file SolverParameter.cs.

|

getset |

The maximum number of iterations.

Definition at line 434 of file SolverParameter.cs.

|

getset |

Specifies the momentum value - used by all solvers EXCEPT the 'AdaGrad' and 'RMSProp' solvers. For these latter solvers, momentum should = 0.

Definition at line 598 of file SolverParameter.cs.

|

getset |

An additional momentum property for the Adam and AdamW solvers (default = 0.999).

Definition at line 849 of file SolverParameter.cs.

|

getset |

Inline train net param, possibly combined with one or more test nets.

Definition at line 293 of file SolverParameter.cs.

|

getset |

Specifies to average loss results before they are output - this can be faster when there are a lot of results in a cycle.

Definition at line 255 of file SolverParameter.cs.

|

getset |

The 'power' parameter to compute the learning rate.

Definition at line 586 of file SolverParameter.cs.

|

getset |

If non-negative, the seed with which the Solver will initialize the caffe random number generator – useful for repoducible results. Otherwise (and by default) initialize using a seed derived from the system clock.

Definition at line 809 of file SolverParameter.cs.

|

getset |

Specifies the regularization type (default = L2).

The regularization types supported are:

Definition at line 623 of file SolverParameter.cs.

|

getset |

Specifies the regularization type (default = 'L2').

The regularization types supported are:

Definition at line 673 of file SolverParameter.cs.

|

getset |

Specifies the 'RMSProp' decay value used by the 'RMSProp' solver (default = 0.95).

MeanSquare(t) = rms_decay * MeanSquare(t-1) + (1 - rms_decay) * SquareGradient(t)

Definition at line 863 of file SolverParameter.cs.

|

getset |

Specifies whether or not to display results per class when using Single-Shot Detection (SSD) - (default = false).

Definition at line 944 of file SolverParameter.cs.

|

getset |

Specifies the snapshot interval.

Definition at line 727 of file SolverParameter.cs.

|

getset |

If false, don't save a snapshot after training finishes.

Definition at line 911 of file SolverParameter.cs.

|

getset |

Whether to snapshot diff in the results or not. Snapshotting diff will help debugging but the final protocol buffer size will be much larger.

Definition at line 750 of file SolverParameter.cs.

|

getset |

The snapshot format.

Currently only the Binary Proto Buffer format is supported.

Definition at line 764 of file SolverParameter.cs.

|

getset |

Specifies whether or not the snapshot includes the solver state. The default = false. Including the solver state will slow down the time of each snapshot.

Definition at line 786 of file SolverParameter.cs.

|

getset |

Specifies whether or not the snapshot includes the trained weights. The default = true.

Definition at line 775 of file SolverParameter.cs.

|

getset |

The prefix for the snapshot.

Definition at line 738 of file SolverParameter.cs.

|

getset |

The stepsize for learning rate policy 'step'.

Definition at line 684 of file SolverParameter.cs.

|

getset |

The step values for learning rate policy 'multistep'.

Definition at line 695 of file SolverParameter.cs.

|

getset |

Test the compute loss.

Definition at line 379 of file SolverParameter.cs.

|

getset |

If true, run an initial test pass before the first iteration, ensuring memory availability and printing the starting value of the loss.

Definition at line 391 of file SolverParameter.cs.

|

getset |

The number of iterations between two testing phases.

Definition at line 369 of file SolverParameter.cs.

|

getset |

The number of iterations for each test.

Definition at line 358 of file SolverParameter.cs.

|

getset |

Inline test net params.

Definition at line 313 of file SolverParameter.cs.

|

getset |

The states for the train/test nets. Must be unspecified or specified once per net.

By default, all states will have solver = true; train_state will have phase = TRAIN, and all test_state's will have phase = TESET. Other defaults are set according to NetState defaults.

Definition at line 347 of file SolverParameter.cs.

|

getset |

Inline train net param, possibly combined with one or more test nets.

Definition at line 303 of file SolverParameter.cs.

|

getset |

The states for the train/test nets. Must be unspecified or specified once per net.

By default, all states will have solver = true; train_state will have phase = TRAIN, and all test_state's will have phase = TESET. Other defaults are set according to NetState defaults.

Definition at line 330 of file SolverParameter.cs.

|

getset |

Specifies the solver type.

Definition at line 827 of file SolverParameter.cs.

|

getset |

Specifies the weight decay (default = 0.0005).

Definition at line 608 of file SolverParameter.cs.