This tutorial will guide you through the steps to create and train the LSTM [1] based Recurrent Char-RNN as described by [2] and inspired by adepierre [3].

Step 1 – Create the Project

In the first step we need to create the project that contains the DataGeneral gym, which is then uses the streaming database to feed the Shakespeare training data to the network. The project will also use the Char-RNN model and SGD solver.

First select the Add Project (![]() ) button at the bottom of the Solutions window, which will display the New Project dialog.

) button at the bottom of the Solutions window, which will display the New Project dialog.

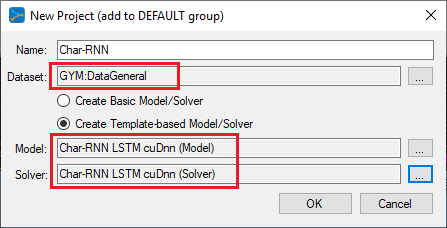

RECOMMENDED: To setup the cuDNN based LSTM model (which is 5x faster than the Caffe version), fill out the New Project dialog as shown below.

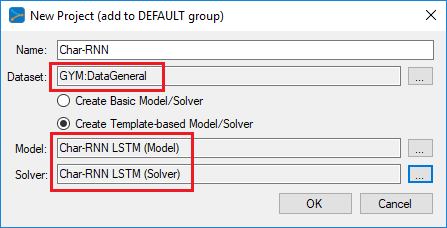

Alternatively, to setup the Caffe based LSTM model, fill out the New Project dialog as shown below.

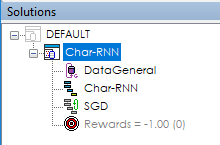

Upon selecting OK on the New Project dialog, the new Char-RNN project will be displayed in the Solutions window.

Step 2 – Review The Model

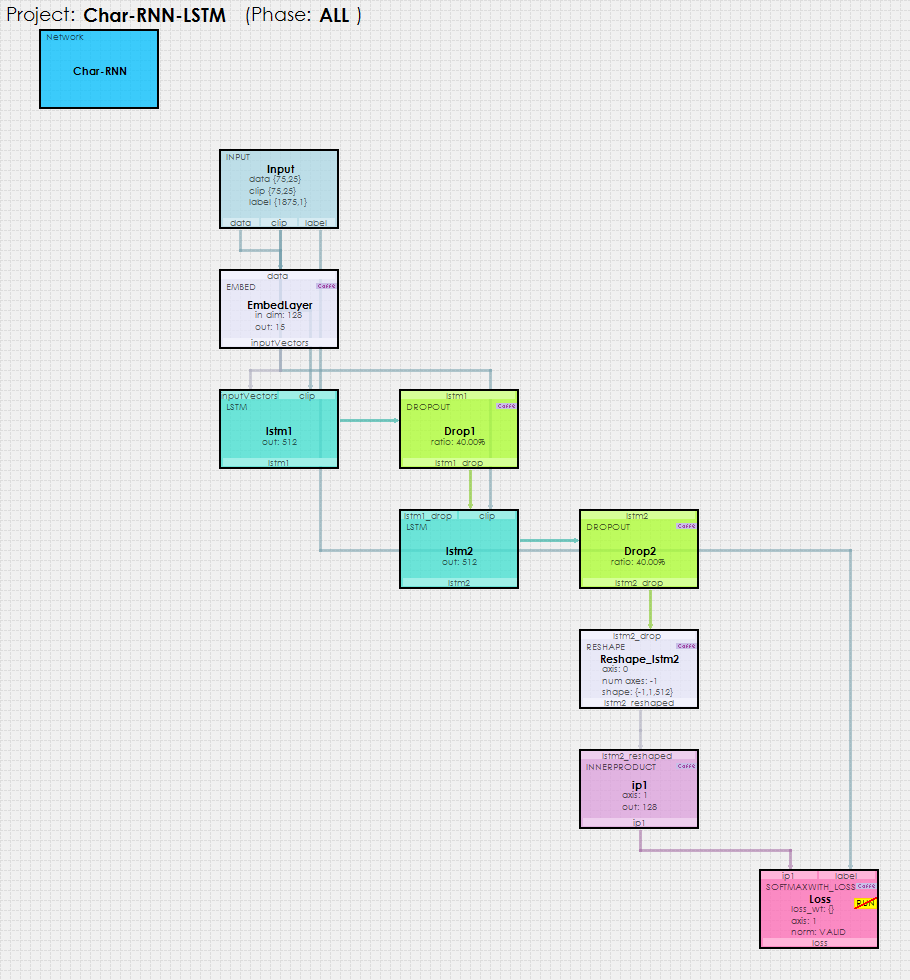

Now that you have created the project, lets open up the model to see how it is organized. To review the model, double click on the Char-RNN model within the new Char-RNN project, which will open up the Model Editor window.

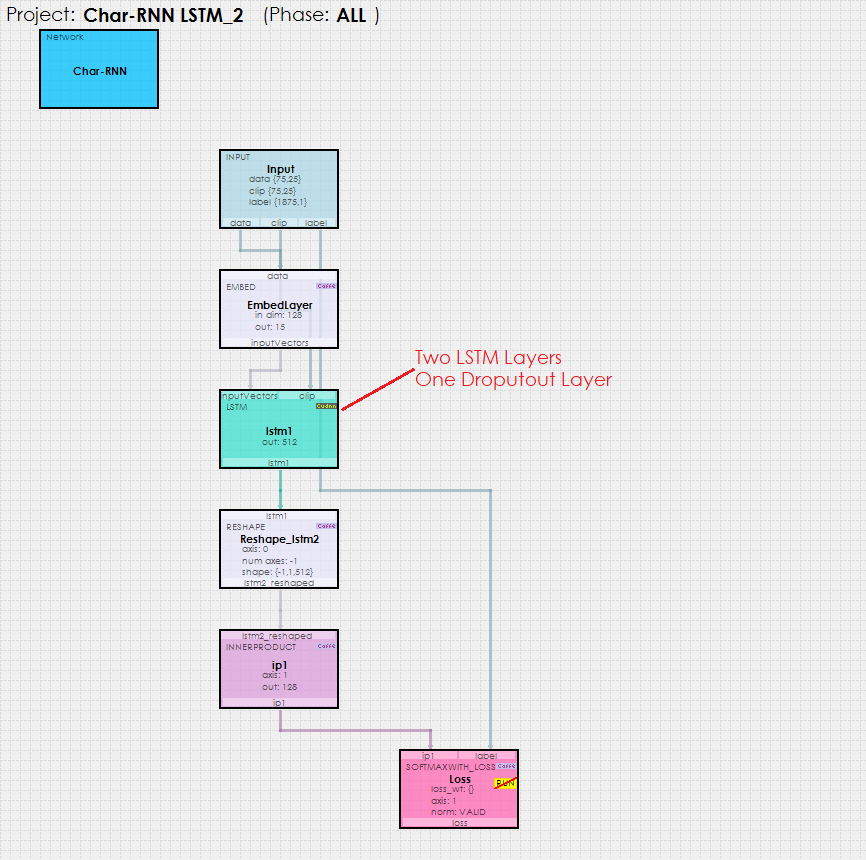

The Char-RNN model uses an Input layer whos blobs are then filled by the MyCaffeTrainerRNN with the data fed to it via the DataGeneral gym.

The input data is fed into an EMBED layer which is used to learn a 15 element output for each character value. The EMBED outputs are then fed into the first of two LSTM layers

At the end of the network an INNER_PRODUCT layer calculates the probabilities for the next character based on the sequence of input characters.

But what actually happens within each LSTM layer to make this happen?

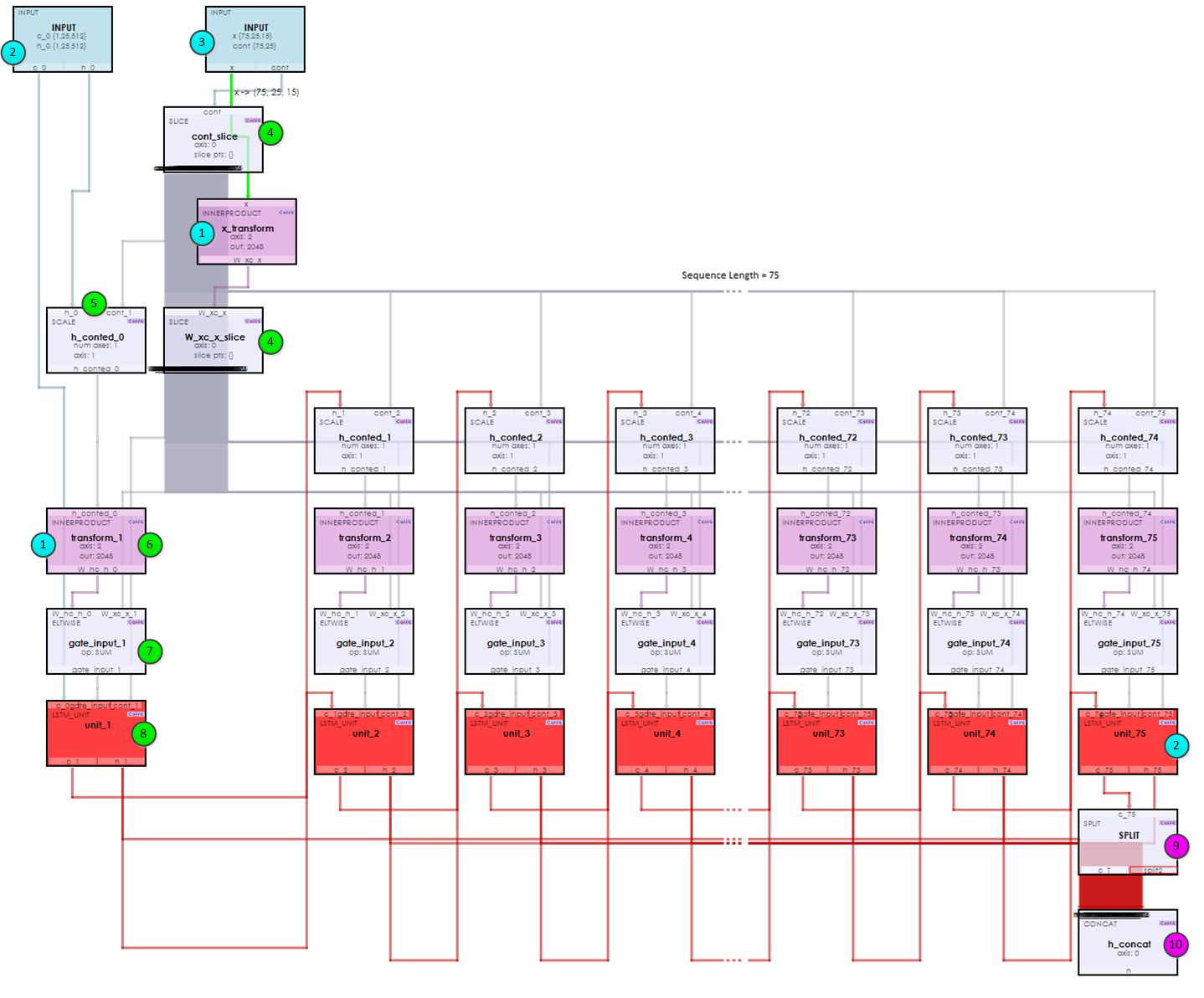

Each LSTM layer contains an Unrolled Net which unrolls the recurrent operations. As shown below, you can see that each of the 75 elements within the sequence are ‘unrolled’ into a set of layers: SCALE, INNER_PRODUCT, RESHAPE and LSTM_UNIT. Each set process the data and feeds it into the next thus forming the recurrent nature of the network.

During the initialization (steps 1-3) the weights are loaded and data is fed into the x input blob. Next in steps 4-8, the recurrent nature of the network processes the each item within the sequence. Upon reaching the end the results are concatenated to form the output in steps 9 and 10. This same process occurs in both LSTM layers – so the full Char-RNN actually has over 600 layers. Amazingly all of this processing for both the forward and backward pass happens in around 64 milliseconds on a Titan Xp running in TCC mode!

Step 1b – Using the CUDNN LSTM Engine

To get up to a 5x speed increase, the LSTM model above can be simplified down to what looks like a single LSTM layer that uses the CUDNN engine.

By changing the first LSTM layer engine from CAFFE (or DEFAULT) to CUDNN, the second LSTM layer and two DROPOUT layers can be removed to create the simpler, but faster model shown above.

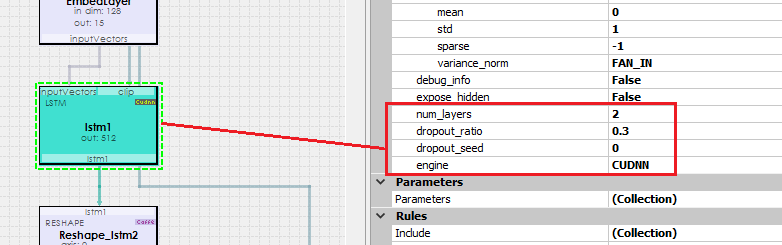

Changing the LSTM engine to CUDNN enables the ‘num_layers’, ‘dropout_ratio’ and ‘dropout_seed’ settings.

num_layers – this setting specifies the number of LSTM layers to use, which in our case is 2. Optionally, a dropout operation is performed between each layer.

dropout_ratio – this setting specifies the percentage of dropout to apply, where 0 = none, 0.3 = 30%, etc.

dropout_seed – this setting specifies the seed used to initialize the dropout layers.

Once you are done configuring the LSTM layer to use the CUDNN engine, you are ready to start training, and do so with a big speed up!

Step 2 – Training

The new MyCaffeTrainerRNN (which is used in a similar way to the MyCaffeTrainerRL) is used to train the model. This trainer takes care of querying the MyCaffe streaming database for data and feeding it into the INPUT layer of the Char-RNN model.

Solver Settings

The SGD solver is used to train the Char-RNN model with the following settings, which are already set in the project created above.

Learning Rate (base_lr) = 0.05

The MyCaffeTrainerRNN that trains the open MyCaffe project uses the following specific settings.

Trainer Type = RNN.SIMPLE; use the policy gradient trainer.

ConnectionCount = 1; specifies that we are only using one connection to the MyCaffe streaming database.

Connection0_CustomQueryName= StdTextFileQuery; specifies to use the standard text file query which returns the block of characters from each file.

Connection0_CustomQueryParam=FilePath~C:\ProgramData\MyCaffe\test_data\data\char-rnn|; specifies the directory where the text files to load reside.

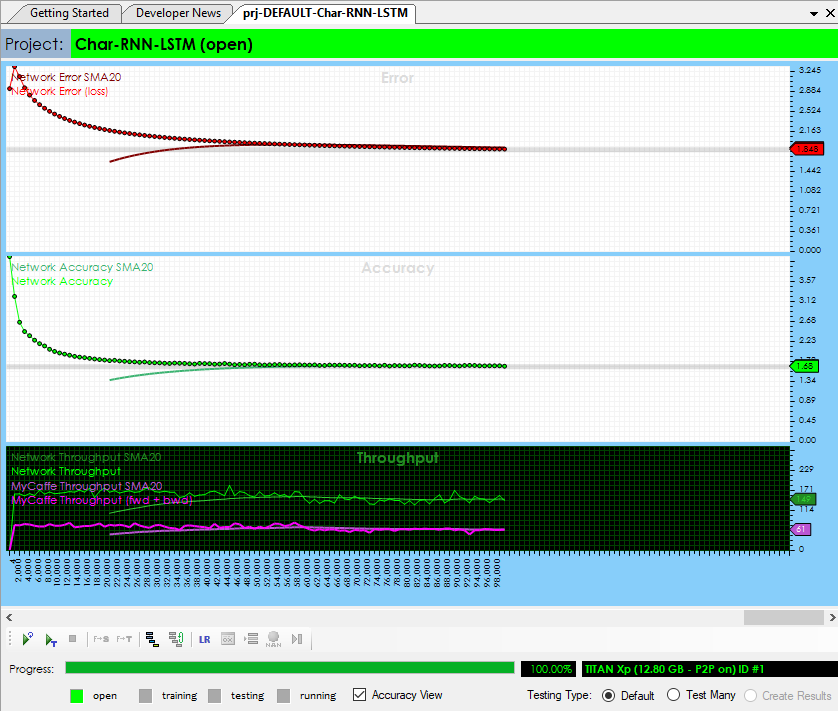

Training

Now that you are all set up, you are ready to start training the model. Double click on the Char-RNN project to open its Project window. To start training, select the Run Training (![]() ) button in the bottom left corner of the Project window.

) button in the bottom left corner of the Project window.

Step 3 – Running The Model

Once trained, you are ready to run the model to create a Shakespeare like sonnet. To do this, select the Test Many testing type (radio button in the bottom right of the Project window) and press the Test (![]() ) button.

) button.

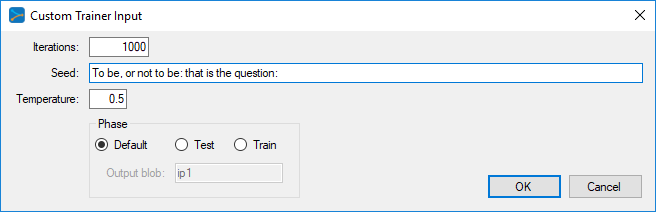

From the Custom Trainer Input dialog, enter the settings such as the number of Iterations (characters to output), Temperature and Seed to use. The Temperature is used to randomly select from characters that are close to the maximum probability, which has shown to produce better results.

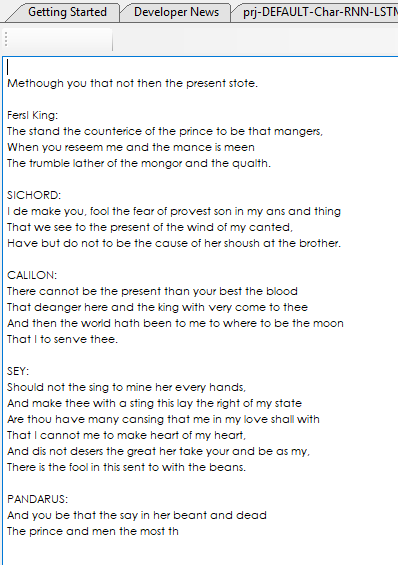

Once completed, the results are displayed in an output window.

Congratulations! You have now created your first Shakespeare sonnet using the SignalPop AI Designer!

To see the SignalPop AI Designer in action with other models, see the Examples page.

[1] J. Donahue, L. A. Hendricks, S. Guadarrama, M. Rohrbach, S. Venugopalan, K. Saenko and T. Darrell, (2014), Long-term Recurrent Convolutional Networks for Visual Recognition and Description, Arxiv.

[2] Karpathy, A., (2015), The Unreasonable Effectiveness of Recurrent Neural Networks, Andrej Karpathy blog.

[3] adepierre, (2017), adepierre/caffe-char-rnn Github, GitHub.com.