How Can We Help?

Loading Data onto the GPU

Loading Data

Once you have the data, loading your data into the AI system is the first step one must take with any AI system. This step involves acquiring, transforming and loading the data onto the GPU, for once on the GPU the algorithms making up the AI data pipeline can operate on the data in a massively parallel manner which is very fast.

When loading data, our goal is to load data from the CPU memory, transform it into the form we would like to use for training, testing or running and then save the transformed data in GPU memory.

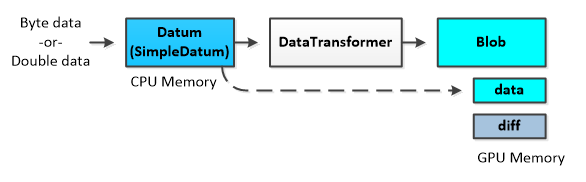

To do this we first load the data on the CPU side into one or more SimpleDatum (or Datum) object which takes either an array of bytes (typically used with black-and-white or color images) or an array of double values as input. Next, the DataTransformer is used to apply the transformations (specified by the TransformationParameter) to the data which produces the final array of float or double values that are to be saved in GPU memory.

The GPU memory is managed by the Blob object which takes care of transferring data between the CPU and GPU. Each Blob manages two parallel blocks of GPU memory that are of equal size. When training a deep learning model, the data block of GPU memory is used by the forward pass of each layer, whereas the diff block of GPU is used to store the gradients calculated during the backward pass.

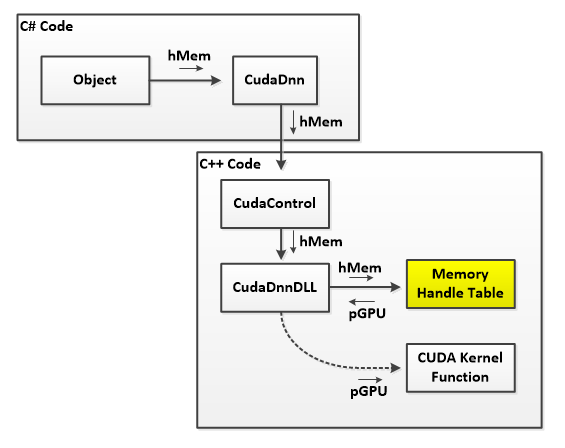

Once the data resides on the GPU, the Blob gpu_data handle is used to access the GPU memory. The CudaDnn methods often pass a handle to GPU memory (such as the gpu_data handle above) to the function implemented in the low-level CudaDnnDLL.

Internally, the CudaDnnDLL uses the handle to look-up the GPU memory pointer in the state that the DLL manages. The pointer to the actual GPU memory is then passed to the CUDA kernel function implementing the AI operation.

Each instance of the CudaDnn object, manage a separate state within the CudaDnnDLL which includes all memory and CUDA descriptors allocated by the CudaDnn instance.

Coding Examples

The following sections demonstrate different ways of loading data from the CPU to the GPU.

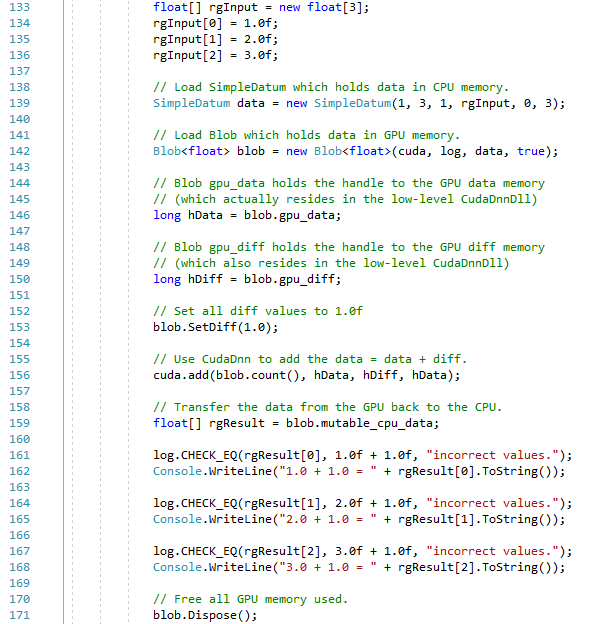

Loading a Blob and Adding Values.

In our first example, we load an array of 3 items and use it to initialize a SimpleDatum sized with a shape = { channels = 1, width = 3, height = 1 } and initialized with the values 1.0, 2.0 and 3.0. Next, this data is transferred from the SimpleDatum on the CPU to the Blob which stores the data on the GPU. And finally, the CudaDnn object is used to demonstrate a simple addition, which takes place on the GPU.

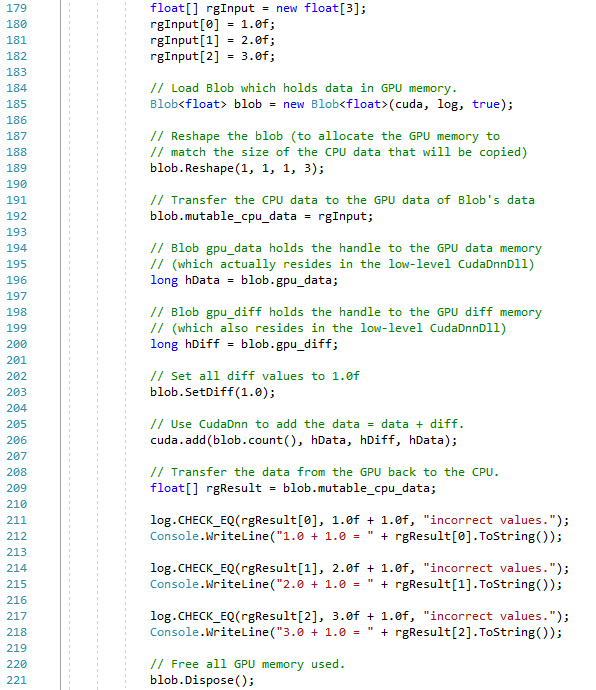

Loading a Blob without SimpleDatum

When working with Blobs, using the SimpleDatum to transfer data from the CPU to the GPU memory managed by the Blob is optional. Blobs also support directly transferring data between the CPU and GPU. This next sample shows how to do the same simple addition but without the use of a SimpleDatum.

To download the code for these samples, please see the BlobUsage samples on GitHub.