|

MyCaffe

1.12.2.41

Deep learning software for Windows C# programmers.

|

|

MyCaffe

1.12.2.41

Deep learning software for Windows C# programmers.

|

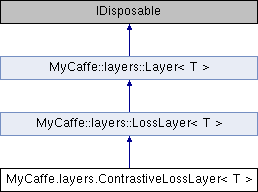

The ContrastiveLossLayer computes the contrastive loss ![]() where

where ![]() . This layer is initialized with the MyCaffe.param.ContrastiveLossParameter.

More...

. This layer is initialized with the MyCaffe.param.ContrastiveLossParameter.

More...

Public Member Functions | |

| ContrastiveLossLayer (CudaDnn< T > cuda, Log log, LayerParameter p) | |

| The ContrastiveLossLayer constructor. More... | |

| override bool | AllowForceBackward (int nBottomIdx) |

| Unlike most loss layers, in the ContrastiveLossLayer we can backpropagate to the first two inputs. More... | |

| override void | LayerSetUp (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Setup the layer. More... | |

| override void | Reshape (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Reshape the bottom (input) and top (output) blobs. More... | |

Public Member Functions inherited from MyCaffe.layers.LossLayer< T > Public Member Functions inherited from MyCaffe.layers.LossLayer< T > | |

| LossLayer (CudaDnn< T > cuda, Log log, LayerParameter p) | |

| The LossLayer constructor. More... | |

| double | GetNormalizer (LossParameter.NormalizationMode normalization_mode, int nOuterNum, int nInnerNum, int nValidCount) |

| Returns the normalizer used to normalize the loss. More... | |

| override bool | AllowForceBackward (int nBottomIdx) |

| We usually cannot backpropagate to the labels; ignore force_backward for these inputs. More... | |

| override void | LayerSetUp (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Setup the layer. More... | |

| override void | Reshape (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Reshape the bottom (input) and top (output) blobs. More... | |

Public Member Functions inherited from MyCaffe.layers.Layer< T > Public Member Functions inherited from MyCaffe.layers.Layer< T > | |

| Layer (CudaDnn< T > cuda, Log log, LayerParameter p) | |

| The Layer constructor. More... | |

| void | Dispose () |

| Releases all GPU and host resources used by the Layer. More... | |

| virtual void | ConnectLoss (LossLayer< T > layer) |

| Called to connect the loss OnLoss event to a specified layer (typically the data layer). More... | |

| virtual BlobCollection< T > | PreProcessInput (PropertySet customInput, out int nSeqLen, BlobCollection< T > colBottom=null) |

| The PreprocessInput allows derivative data layers to convert a property set of input data into the bottom blob collection used as intput. More... | |

| virtual bool | PreProcessInput (string strEncInput, int? nDecInput, BlobCollection< T > colBottom) |

| Preprocess the input data for the RUN phase. More... | |

| virtual List< Tuple< string, int, double > > | PostProcessOutput (Blob< T > blobSofmtax, int nK=1) |

| The PostProcessOutput allows derivative data layers to post-process the results, converting them back into text results (e.g., detokenizing). More... | |

| virtual List< Tuple< string, int, double > > | PostProcessLogitsOutput (int nCurIdx, Blob< T > blobLogits, Layer< T > softmax, int nAxis, int nK=1) |

| The PostProcessLogitsOutput allows derivative data layers to post-process the results, converting them back into text results (e.g., detokenizing). More... | |

| virtual string | PostProcessFullOutput (Blob< T > blobSoftmax) |

| The PostProcessFullOutput allows derivative data layers to post-process the results, usually be detokenizing the data in the blobSoftmax. More... | |

| virtual string | PostProcessOutput (int nIdx) |

| Convert the index to the word. More... | |

| virtual void | SetOnDebug (EventHandler< GetWorkBlobArgs< T > > fn) |

| Set the OnDebug event. More... | |

| virtual void | ResetOnDebug (EventHandler< GetWorkBlobArgs< T > > fn) |

| Reset the OnDebug event, disabling it. More... | |

| virtual bool | ReInitializeParameters (WEIGHT_TARGET target) |

| Re-initialize the parameters of the layer. More... | |

| void | SetNetReshapeRequest () |

| Called by the Net when requesting a reshape. More... | |

| void | SetPhase (Phase phase) |

| Changes the layer's Phase to the one specified. More... | |

| void | Setup (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Implements common Layer setup functionality. More... | |

| virtual void | SetNetParameterUsed (NetParameter np) |

| This function allows other layers to gather needed information from the NetParameters if any, and is called when initialzing the Net. More... | |

| void | ConvertToBase (BlobCollection< T > col) |

| ConvertToBase converts any blobs in a collection that are in half size to the base size. More... | |

| double | Forward (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Given the bottom (input) Blobs, this function computes the top (output) Blobs and the loss. More... | |

| void | Backward (BlobCollection< T > colTop, List< bool > rgbPropagateDown, BlobCollection< T > colBottom) |

| Given the top Blob error gradients, compute the bottom Blob error gradients. More... | |

| double | loss (int nTopIdx) |

| Returns the scalar loss associated with the top Blob at a given index. More... | |

| void | set_loss (int nTopIdx, double dfLoss) |

| Sets the loss associated with a top Blob at a given index. More... | |

| bool | param_propagate_down (int nParamIdx) |

| Returns whether or not the Layer should compute gradients w.r.t. a parameter at a particular index given by a parameter index. More... | |

| void | set_param_propagate_down (int nParamIdx, bool bPropagate) |

| Sets whether or not the Layer should compute gradients w.r.t. a parameter at a particular index given by a parameter index. More... | |

| void | SetEnablePassthrough (bool bEnable) |

| Enables/disables the pass-through mode. More... | |

Protected Member Functions | |

| override void | dispose () |

| Releases all GPU and host resources used by the Layer. More... | |

| override void | forward (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| The forward computation. More... | |

| override void | backward (BlobCollection< T > colTop, List< bool > rgbPropagateDown, BlobCollection< T > colBottom) |

| Computes the infogain loss error gradient w.r.t the inputs. More... | |

Protected Member Functions inherited from MyCaffe.layers.LossLayer< T > Protected Member Functions inherited from MyCaffe.layers.LossLayer< T > | |

| void | callLossEvent (Blob< T > blob) |

| This method is called by the loss layer to pass the blob data to the OnLoss event (if implemented) More... | |

| virtual double | get_normalizer (LossParameter.NormalizationMode normalization_mode, int nValidCount) |

| Returns the normalizer used to normalize the loss. More... | |

Protected Member Functions inherited from MyCaffe.layers.Layer< T > Protected Member Functions inherited from MyCaffe.layers.Layer< T > | |

| void | dispose (ref Layer< T > l) |

| Helper method used to dispose internal layers. More... | |

| void | dispose (ref Blob< T > b) |

| Helper method used to dispose internal blobs. More... | |

| void | dispose (ref BlobCollection< T > rg, bool bSetToNull=true) |

| Dispose the blob collection. More... | |

| GetIterationArgs | getCurrentIteration () |

| Fires the OnGetIteration event to query the current iteration. More... | |

| long | convert_to_full (int nCount, long hMem) |

| Convert half memory to full memory. More... | |

| void | convert (BlobCollection< T > col) |

| Convert a collection of blobs from / to half size. More... | |

| virtual bool | reshapeNeeded (BlobCollection< T > colBottom, BlobCollection< T > colTop, bool bReset=true) |

| Tests the shapes of both the bottom and top blobs and if they are the same as the previous sizing, returns false indicating that no reshape is needed. More... | |

| bool | compareShapes (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Compare the shapes of the top and bottom and if the same, return true, otherwise false. More... | |

| void | setShapes (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Set the internal shape sizes - used when determining if a Reshape is necessary. More... | |

| virtual void | setup_internal_blobs (BlobCollection< T > col) |

| Derivative layers should add all internal blobws to the 'col' provided. More... | |

| void | CheckBlobCounts (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Called by the Layer::Setup function to check the number of bottom (input) and top (output) Blobs provided match the expected number of blobs expected via the {EactNum,Min,Max}{Bottom,Top}Blobs functions. More... | |

| void | SetLossWeights (BlobCollection< T > colTop) |

| Called by Layer::Setup to initialize the weights associated with any top (output) Blobs in the loss function ans store non-zero loss weights in the diff Blob. More... | |

| LayerParameter | convertLayerParam (LayerParameter pChild, LayerParameter pParent) |

| Called to convert a parent LayerParameterEx, used in blob sharing, with a child layer parameter. More... | |

| bool | shareParameter (Blob< T > b, List< int > rgMinShape, bool bAllowEndsWithComparison=false) |

| Attempts to share a parameter Blob if another parameter Blob with the same name and accpetable size is found. More... | |

| bool | shareLayerBlob (Blob< T > b, List< int > rgMinShape) |

| Attempts to share a Layer Blob if another parameter Blob with the same name and acceptable size is found. More... | |

| bool | shareLayerBlobs (Layer< T > layer) |

| Attempts to share the Layer blobs and internal_blobs with matching names and sizes with those in another matching layer. More... | |

| virtual WorkspaceArgs | getWorkspace () |

| Returns the WorkspaceArgs used to share a workspace between Layers. More... | |

| virtual bool | setWorkspace (ulong lSizeInBytes) |

| Sets the workspace size (in items) and returns true if set, false otherwise. More... | |

| void | check_nan (Blob< T > b) |

| Checks a Blob for NaNs and throws an exception if found. More... | |

| T | convert (double df) |

| Converts a double to a generic. More... | |

| T | convert (float f) |

| Converts a float to a generic. More... | |

| double | convertD (T df) |

| Converts a generic to a double value. More... | |

| float | convertF (T df) |

| Converts a generic to a float value. More... | |

| double[] | convertD (T[] rg) |

| Converts an array of generic values into an array of double values. More... | |

| T[] | convert (double[] rg) |

| Converts an array of double values into an array of generic values. More... | |

| float[] | convertF (T[] rg) |

| Converts an array of float values into an array of generic values. More... | |

| T[] | convert (float[] rg) |

| Converts an array of float values into an array of generic values. More... | |

| int | val_at (T[] rg, int nIdx) |

| Returns the integer value at a given index in a generic array. More... | |

| Size | size_at (Blob< T > b) |

| Returns the Size of a given two element Blob, such as one that stores Blob size information. More... | |

Properties | |

| override int | ExactNumBottomBlobs [get] |

| Returns -1 specifying a variable number of bottoms More... | |

| override int | MinBottomBlobs [get] |

| Returns the minumum number of bottom blobs: featA, featB, label More... | |

| override int | MaxBottomBlobs [get] |

| Returns the minumum number of bottom blobs: featA, featB, label, centroids More... | |

| override int | ExactNumTopBlobs [get] |

| Returns -1 specifying a variable number of tops. More... | |

| override int | MinTopBlobs [get] |

| Specifies the minimum number of required top (output) Blobs: loss More... | |

| override int | MaxTopBlobs [get] |

| Specifies the maximum number of required top (output) Blobs: loss, matches More... | |

Properties inherited from MyCaffe.layers.LossLayer< T > Properties inherited from MyCaffe.layers.LossLayer< T > | |

| override int | ExactNumBottomBlobs [get] |

| Returns the exact number of required bottom (intput) Blobs: prediction, label More... | |

| override int | ExactNumTopBlobs [get] |

| Returns the exact number of required top (output) Blobs: loss More... | |

| override bool | AutoTopBlobs [get] |

| For convenience and backwards compatibility, insturct the Net to automatically allocate a single top Blob for LossLayers, into which they output their singleton loss, (even if the user didn't specify one in the prototxt, etc.). More... | |

Properties inherited from MyCaffe.layers.Layer< T > Properties inherited from MyCaffe.layers.Layer< T > | |

| LayerParameter.? LayerType | parent_layer_type [get] |

| Optionally, specifies the parent layer type (e.g. LOSS, etc.) More... | |

| virtual bool | SupportsPreProcessing [get] |

| Should return true when PreProcessing methods are overriden. More... | |

| virtual bool | SupportsPostProcessing [get] |

| Should return true when pre PostProcessing methods are overriden. More... | |

| virtual bool | SupportsPostProcessingLogits [get] |

| Should return true when pre PostProcessingLogits methods are overriden. More... | |

| virtual bool | SupportsPostProcessingFullOutput [get] |

| Should return true when PostProcessingFullOutput is supported. More... | |

| BlobCollection< T > | blobs [get] |

| Returns the collection of learnable parameter Blobs for the Layer. More... | |

| BlobCollection< T > | internal_blobs [get] |

| Returns the collection of internal Blobs used by the Layer. More... | |

| LayerParameter | layer_param [get] |

| Returns the LayerParameter for this Layer. More... | |

| LayerParameter.LayerType | type [get] |

| Returns the LayerType of this Layer. More... | |

| virtual int | ExactNumBottomBlobs [get] |

| Returns the exact number of bottom (input) Blobs required by the Layer, or -1 if no exact number is required. More... | |

| virtual int | MinBottomBlobs [get] |

| Returns the minimum number of bottom (input) Blobs required by the Layer, or -1 if no minimum number is required. More... | |

| virtual int | MaxBottomBlobs [get] |

| Returns the maximum number of bottom (input) Blobs required by the Layer, or -1 if no maximum number is required. More... | |

| virtual int | ExactNumTopBlobs [get] |

| Returns the exact number of top (output) Blobs required by the Layer, or -1 if no exact number is required. More... | |

| virtual int | MinTopBlobs [get] |

| Returns the minimum number of top (output) Blobs required by the Layer, or -1 if no minimum number is required. More... | |

| virtual int | MaxTopBlobs [get] |

| Returns the maximum number of top (output) Blobs required by the Layer, or -1 if no maximum number is required. More... | |

| virtual bool | EqualNumBottomTopBlobs [get] |

| Returns true if the Layer requires and equal number of bottom (input) and top (output) Blobs. More... | |

| virtual bool | AutoTopBlobs [get] |

| Return whether "anonymous" top (output) Blobs are created automatically by the Layer. More... | |

| double | forward_timing [get] |

| Returns the timing of the last forward pass in milliseconds. More... | |

| double | forward_timing_average [get] |

| Returns the average timing of the forward passes in milliseconds. More... | |

| double | backward_timing [get] |

| Returns the timing of the last backward pass in milliseconds. More... | |

| double | backward_timing_average [get] |

| Returns the average timing of the backward passes in milliseconds. More... | |

Additional Inherited Members | |

Static Public Member Functions inherited from MyCaffe.layers.Layer< T > Static Public Member Functions inherited from MyCaffe.layers.Layer< T > | |

| static Layer< T > | Create (CudaDnn< T > cuda, Log log, LayerParameter p, CancelEvent evtCancel, IXDatabaseBase db=null, TransferInput trxinput=null) |

| Create a new Layer based on the LayerParameter. More... | |

Static Public Attributes inherited from MyCaffe.layers.LossLayer< T > Static Public Attributes inherited from MyCaffe.layers.LossLayer< T > | |

| const double | kLOG_THRESHOLD = 1e-20 |

| Specifies the minimum threshold for loss values. More... | |

Protected Attributes inherited from MyCaffe.layers.LossLayer< T > Protected Attributes inherited from MyCaffe.layers.LossLayer< T > | |

| bool | m_bIgnoreLabels = false |

| Set to true when labels are to be ignored. More... | |

| LossParameter.NormalizationMode | m_normalization = LossParameter.NormalizationMode.NONE |

| Specifies the normalization mode used to normalize the loss. More... | |

| int | m_nOuterNum = 0 |

| Specifies the outer num, such as the batch count (e.g. count(0, axis)). Each derivative class must set this value appropriately. More... | |

| int | m_nInnerNum = 0 |

| Specifies the inner num, such as the channel + height + width (e.g. count(axis + 1)). Each derivative class must set this value appropriately. More... | |

Protected Attributes inherited from MyCaffe.layers.Layer< T > Protected Attributes inherited from MyCaffe.layers.Layer< T > | |

| LayerParameter.LayerType | m_type = LayerParameter.LayerType._MAX |

| Specifies the Layer type. More... | |

| CudaDnn< T > | m_cuda |

| Specifies the CudaDnn connection to Cuda. More... | |

| Log | m_log |

| Specifies the Log for output. More... | |

| LayerParameter | m_param |

| Specifies the LayerParameter describing the Layer. More... | |

| Phase | m_phase |

| Specifies the Phase under which the Layer is run. More... | |

| BlobCollection< T > | m_colBlobs |

| Specifies the learnable parameter Blobs of the Layer. More... | |

| BlobCollection< T > | m_colInternalBlobs = new BlobCollection<T>() |

| Specifies internal blobs used by the layer. More... | |

| DictionaryMap< bool > | m_rgbParamPropagateDown |

| Specifies whether or not to compute the learnable diff of each parameter Blob. More... | |

| DictionaryMap< double > | m_rgLoss |

| Specifies the loss values that indeicate whether each top (output) Blob has a non-zero weight in the objective function.. More... | |

| T | m_tOne |

| Specifies a generic type equal to 1.0. More... | |

| T | m_tZero |

| Specifies a generic type equal to 0.0. More... | |

| bool | m_bEnablePassthrough = false |

| Enables/disables the pass-through mode for the layer. Default = false. More... | |

| bool | m_bUseHalfSize = false |

| Specifies that the half size of the top (if any) should be converted to the base size. More... | |

| bool | m_bConvertTopOnFwd = false |

| Specifies whether or not the layer should convert the top on the forward pass when using half sized memory (typically only done with input data). More... | |

| bool | m_bConvertTopOnBwd = true |

| Specifies whether or not to convert the top on the backward pass when using half sized memory (typically not done on loss layers). More... | |

| bool | m_bConvertBottom = true |

| Specifies whether or not the layer should convert the bottom when using half sized memory. More... | |

| bool | m_bReshapeOnForwardNeeded = true |

| Specifies whether or not the reshape on forward is needed or not. More... | |

| bool | m_bNetReshapeRequest = false |

| Specifies whether the reshape is requested from a Net.Reshape call or not. More... | |

| LayerParameter.? LayerType | m_parentLayerType = null |

| Specifies the layer type of the parent. More... | |

Events inherited from MyCaffe.layers.LossLayer< T > Events inherited from MyCaffe.layers.LossLayer< T > | |

| EventHandler< LossArgs > | OnLoss |

| Specifies the loss event called on each learning cycle. More... | |

Events inherited from MyCaffe.layers.Layer< T > Events inherited from MyCaffe.layers.Layer< T > | |

| EventHandler< WorkspaceArgs > | OnGetWorkspace |

| Specifies the OnGetWorkspace event that fires when the getWorkspace() function is called by a layer to get a shareable workspace to conserve GPU memory. More... | |

| EventHandler< WorkspaceArgs > | OnSetWorkspace |

| Specifies the OnSetWorkspace event that fires when the setWorkspace() function is called by a layer to get a shareable workspace to conserve GPU memory. More... | |

| EventHandler< GetIterationArgs > | OnGetIteration |

| Specifies the OnGetIteration event that fires when a layer needs to get the current iteration from the solver. More... | |

| EventHandler< GetWorkBlobArgs< T > > | OnDebug |

| Specifies the OnGetWorkBlob event that is only supported when debugging to get a work blob from the primary Net holding this layer. More... | |

The ContrastiveLossLayer computes the contrastive loss ![]() where

where ![]() . This layer is initialized with the MyCaffe.param.ContrastiveLossParameter.

. This layer is initialized with the MyCaffe.param.ContrastiveLossParameter.

This can be used to train siamese networks.

| T | Specifies the base type float or double. Using float is recommended to conserve GPU memory. |

Definition at line 33 of file ContrastiveLossLayer.cs.

| MyCaffe.layers.ContrastiveLossLayer< T >.ContrastiveLossLayer | ( | CudaDnn< T > | cuda, |

| Log | log, | ||

| LayerParameter | p | ||

| ) |

The ContrastiveLossLayer constructor.

| cuda | Specifies the CudaDnn connection to Cuda. |

| log | Specifies the Log for output. |

| p | provides LossParameter loss_param, with options:

|

Definition at line 58 of file ContrastiveLossLayer.cs.

|

virtual |

Unlike most loss layers, in the ContrastiveLossLayer we can backpropagate to the first two inputs.

Reimplemented from MyCaffe.layers.Layer< T >.

Definition at line 183 of file ContrastiveLossLayer.cs.

|

protectedvirtual |

Computes the infogain loss error gradient w.r.t the inputs.

Computes the gradients with respect to the two input vectors (bottom[0] and bottom[1]), but not the similarity label (bottom[2]).

| colTop | top output blob vector (length 1), providing the error gradient with respect to the outputs.

|

| rgbPropagateDown | see Layer::Backward. propagate_down[1] must be false as we can't compute gradients with respect to the labels (similarly for progagate_down[2] and the infogain matrix, if provided as bottom[2]). |

| colBottom | bottom input blob vector (length 2)

|

Implements MyCaffe.layers.Layer< T >.

Definition at line 476 of file ContrastiveLossLayer.cs.

|

protectedvirtual |

Releases all GPU and host resources used by the Layer.

Reimplemented from MyCaffe.layers.Layer< T >.

Definition at line 80 of file ContrastiveLossLayer.cs.

|

protectedvirtual |

The forward computation.

| colBottom | bottom input blob vector (length 3)

|

| colTop | top output blob vector (length 1)

|

This can be used to train siamese networks.

Implements MyCaffe.layers.Layer< T >.

Definition at line 265 of file ContrastiveLossLayer.cs.

|

virtual |

Setup the layer.

| colBottom | Specifies the collection of bottom (input) Blobs. |

| colTop | Specifies the collection of top (output) Blobs. |

Implements MyCaffe.layers.Layer< T >.

Definition at line 196 of file ContrastiveLossLayer.cs.

|

virtual |

Reshape the bottom (input) and top (output) blobs.

| colBottom | Specifies the collection of bottom (input) Blobs. |

| colTop | Specifies the collection of top (output) Blobs. |

Implements MyCaffe.layers.Layer< T >.

Definition at line 219 of file ContrastiveLossLayer.cs.

|

get |

Returns -1 specifying a variable number of bottoms

Definition at line 130 of file ContrastiveLossLayer.cs.

|

get |

Returns -1 specifying a variable number of tops.

Definition at line 158 of file ContrastiveLossLayer.cs.

|

get |

Returns the minumum number of bottom blobs: featA, featB, label, centroids

The centroids are calculated for each class by the DecodeLayer and are only used when 'enable_centroid_learning' = True.

Definition at line 150 of file ContrastiveLossLayer.cs.

|

get |

Specifies the maximum number of required top (output) Blobs: loss, matches

Definition at line 174 of file ContrastiveLossLayer.cs.

|

get |

Returns the minumum number of bottom blobs: featA, featB, label

Definition at line 138 of file ContrastiveLossLayer.cs.

|

get |

Specifies the minimum number of required top (output) Blobs: loss

Definition at line 166 of file ContrastiveLossLayer.cs.