|

MyCaffe

1.12.2.41

Deep learning software for Windows C# programmers.

|

|

MyCaffe

1.12.2.41

Deep learning software for Windows C# programmers.

|

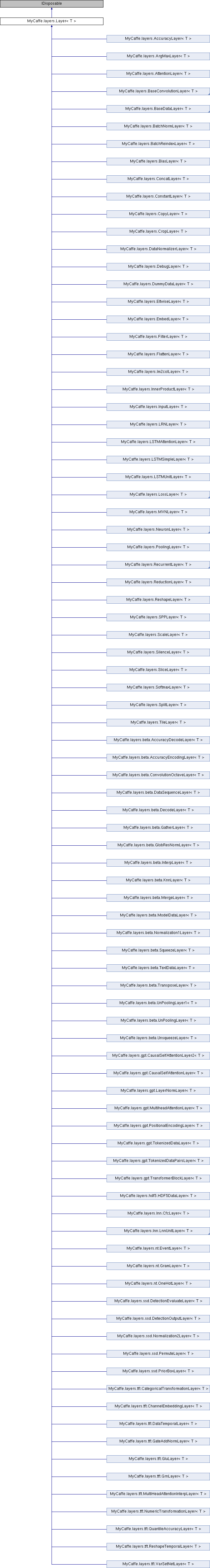

An interface for the units of computation which can be composed into a Net. More...

Public Member Functions | |

| Layer (CudaDnn< T > cuda, Log log, LayerParameter p) | |

| The Layer constructor. More... | |

| void | Dispose () |

| Releases all GPU and host resources used by the Layer. More... | |

| virtual void | ConnectLoss (LossLayer< T > layer) |

| Called to connect the loss OnLoss event to a specified layer (typically the data layer). More... | |

| virtual BlobCollection< T > | PreProcessInput (PropertySet customInput, out int nSeqLen, BlobCollection< T > colBottom=null) |

| The PreprocessInput allows derivative data layers to convert a property set of input data into the bottom blob collection used as intput. More... | |

| virtual bool | PreProcessInput (string strEncInput, int? nDecInput, BlobCollection< T > colBottom) |

| Preprocess the input data for the RUN phase. More... | |

| virtual List< Tuple< string, int, double > > | PostProcessOutput (Blob< T > blobSofmtax, int nK=1) |

| The PostProcessOutput allows derivative data layers to post-process the results, converting them back into text results (e.g., detokenizing). More... | |

| virtual List< Tuple< string, int, double > > | PostProcessLogitsOutput (int nCurIdx, Blob< T > blobLogits, Layer< T > softmax, int nAxis, int nK=1) |

| The PostProcessLogitsOutput allows derivative data layers to post-process the results, converting them back into text results (e.g., detokenizing). More... | |

| virtual string | PostProcessFullOutput (Blob< T > blobSoftmax) |

| The PostProcessFullOutput allows derivative data layers to post-process the results, usually be detokenizing the data in the blobSoftmax. More... | |

| virtual string | PostProcessOutput (int nIdx) |

| Convert the index to the word. More... | |

| virtual void | SetOnDebug (EventHandler< GetWorkBlobArgs< T > > fn) |

| Set the OnDebug event. More... | |

| virtual void | ResetOnDebug (EventHandler< GetWorkBlobArgs< T > > fn) |

| Reset the OnDebug event, disabling it. More... | |

| virtual bool | ReInitializeParameters (WEIGHT_TARGET target) |

| Re-initialize the parameters of the layer. More... | |

| void | SetNetReshapeRequest () |

| Called by the Net when requesting a reshape. More... | |

| void | SetPhase (Phase phase) |

| Changes the layer's Phase to the one specified. More... | |

| void | Setup (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Implements common Layer setup functionality. More... | |

| abstract void | LayerSetUp (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Performs Layer specific setup. Derived layers should override this function as well as the Reshape function. More... | |

| virtual void | SetNetParameterUsed (NetParameter np) |

| This function allows other layers to gather needed information from the NetParameters if any, and is called when initialzing the Net. More... | |

| abstract void | Reshape (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Adjust the shapes of top blobs and internal buffers to accomodate the shapes of the bottom blobs. More... | |

| void | ConvertToBase (BlobCollection< T > col) |

| ConvertToBase converts any blobs in a collection that are in half size to the base size. More... | |

| double | Forward (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Given the bottom (input) Blobs, this function computes the top (output) Blobs and the loss. More... | |

| void | Backward (BlobCollection< T > colTop, List< bool > rgbPropagateDown, BlobCollection< T > colBottom) |

| Given the top Blob error gradients, compute the bottom Blob error gradients. More... | |

| double | loss (int nTopIdx) |

| Returns the scalar loss associated with the top Blob at a given index. More... | |

| void | set_loss (int nTopIdx, double dfLoss) |

| Sets the loss associated with a top Blob at a given index. More... | |

| virtual bool | AllowForceBackward (int nBottomIdx) |

| Return whether to allow More... | |

| bool | param_propagate_down (int nParamIdx) |

| Returns whether or not the Layer should compute gradients w.r.t. a parameter at a particular index given by a parameter index. More... | |

| void | set_param_propagate_down (int nParamIdx, bool bPropagate) |

| Sets whether or not the Layer should compute gradients w.r.t. a parameter at a particular index given by a parameter index. More... | |

| void | SetEnablePassthrough (bool bEnable) |

| Enables/disables the pass-through mode. More... | |

Static Public Member Functions | |

| static Layer< T > | Create (CudaDnn< T > cuda, Log log, LayerParameter p, CancelEvent evtCancel, IXDatabaseBase db=null, TransferInput trxinput=null) |

| Create a new Layer based on the LayerParameter. More... | |

Protected Member Functions | |

| virtual void | dispose () |

| Releases all GPU and host resources used by the Layer. More... | |

| void | dispose (ref Layer< T > l) |

| Helper method used to dispose internal layers. More... | |

| void | dispose (ref Blob< T > b) |

| Helper method used to dispose internal blobs. More... | |

| void | dispose (ref BlobCollection< T > rg, bool bSetToNull=true) |

| Dispose the blob collection. More... | |

| GetIterationArgs | getCurrentIteration () |

| Fires the OnGetIteration event to query the current iteration. More... | |

| long | convert_to_full (int nCount, long hMem) |

| Convert half memory to full memory. More... | |

| void | convert (BlobCollection< T > col) |

| Convert a collection of blobs from / to half size. More... | |

| virtual bool | reshapeNeeded (BlobCollection< T > colBottom, BlobCollection< T > colTop, bool bReset=true) |

| Tests the shapes of both the bottom and top blobs and if they are the same as the previous sizing, returns false indicating that no reshape is needed. More... | |

| bool | compareShapes (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Compare the shapes of the top and bottom and if the same, return true, otherwise false. More... | |

| void | setShapes (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Set the internal shape sizes - used when determining if a Reshape is necessary. More... | |

| abstract void | forward (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| This forward abstract function must be overriden by each derived Layer class to compute the top (output) Blobs for this layer. More... | |

| abstract void | backward (BlobCollection< T > colTop, List< bool > rgbPropagateDown, BlobCollection< T > colBottom) |

| This backward abstract function must be overriden by each derived Layer class to compute the bottom (intput) Blob diffs for this Layer. More... | |

| virtual void | setup_internal_blobs (BlobCollection< T > col) |

| Derivative layers should add all internal blobws to the 'col' provided. More... | |

| void | CheckBlobCounts (BlobCollection< T > colBottom, BlobCollection< T > colTop) |

| Called by the Layer::Setup function to check the number of bottom (input) and top (output) Blobs provided match the expected number of blobs expected via the {EactNum,Min,Max}{Bottom,Top}Blobs functions. More... | |

| void | SetLossWeights (BlobCollection< T > colTop) |

| Called by Layer::Setup to initialize the weights associated with any top (output) Blobs in the loss function ans store non-zero loss weights in the diff Blob. More... | |

| LayerParameter | convertLayerParam (LayerParameter pChild, LayerParameter pParent) |

| Called to convert a parent LayerParameterEx, used in blob sharing, with a child layer parameter. More... | |

| bool | shareParameter (Blob< T > b, List< int > rgMinShape, bool bAllowEndsWithComparison=false) |

| Attempts to share a parameter Blob if another parameter Blob with the same name and accpetable size is found. More... | |

| bool | shareLayerBlob (Blob< T > b, List< int > rgMinShape) |

| Attempts to share a Layer Blob if another parameter Blob with the same name and acceptable size is found. More... | |

| bool | shareLayerBlobs (Layer< T > layer) |

| Attempts to share the Layer blobs and internal_blobs with matching names and sizes with those in another matching layer. More... | |

| virtual WorkspaceArgs | getWorkspace () |

| Returns the WorkspaceArgs used to share a workspace between Layers. More... | |

| virtual bool | setWorkspace (ulong lSizeInBytes) |

| Sets the workspace size (in items) and returns true if set, false otherwise. More... | |

| void | check_nan (Blob< T > b) |

| Checks a Blob for NaNs and throws an exception if found. More... | |

| T | convert (double df) |

| Converts a double to a generic. More... | |

| T | convert (float f) |

| Converts a float to a generic. More... | |

| double | convertD (T df) |

| Converts a generic to a double value. More... | |

| float | convertF (T df) |

| Converts a generic to a float value. More... | |

| double[] | convertD (T[] rg) |

| Converts an array of generic values into an array of double values. More... | |

| T[] | convert (double[] rg) |

| Converts an array of double values into an array of generic values. More... | |

| float[] | convertF (T[] rg) |

| Converts an array of float values into an array of generic values. More... | |

| T[] | convert (float[] rg) |

| Converts an array of float values into an array of generic values. More... | |

| int | val_at (T[] rg, int nIdx) |

| Returns the integer value at a given index in a generic array. More... | |

| Size | size_at (Blob< T > b) |

| Returns the Size of a given two element Blob, such as one that stores Blob size information. More... | |

Protected Attributes | |

| LayerParameter.LayerType | m_type = LayerParameter.LayerType._MAX |

| Specifies the Layer type. More... | |

| CudaDnn< T > | m_cuda |

| Specifies the CudaDnn connection to Cuda. More... | |

| Log | m_log |

| Specifies the Log for output. More... | |

| LayerParameter | m_param |

| Specifies the LayerParameter describing the Layer. More... | |

| Phase | m_phase |

| Specifies the Phase under which the Layer is run. More... | |

| BlobCollection< T > | m_colBlobs |

| Specifies the learnable parameter Blobs of the Layer. More... | |

| BlobCollection< T > | m_colInternalBlobs = new BlobCollection<T>() |

| Specifies internal blobs used by the layer. More... | |

| DictionaryMap< bool > | m_rgbParamPropagateDown |

| Specifies whether or not to compute the learnable diff of each parameter Blob. More... | |

| DictionaryMap< double > | m_rgLoss |

| Specifies the loss values that indeicate whether each top (output) Blob has a non-zero weight in the objective function.. More... | |

| T | m_tOne |

| Specifies a generic type equal to 1.0. More... | |

| T | m_tZero |

| Specifies a generic type equal to 0.0. More... | |

| bool | m_bEnablePassthrough = false |

| Enables/disables the pass-through mode for the layer. Default = false. More... | |

| bool | m_bUseHalfSize = false |

| Specifies that the half size of the top (if any) should be converted to the base size. More... | |

| bool | m_bConvertTopOnFwd = false |

| Specifies whether or not the layer should convert the top on the forward pass when using half sized memory (typically only done with input data). More... | |

| bool | m_bConvertTopOnBwd = true |

| Specifies whether or not to convert the top on the backward pass when using half sized memory (typically not done on loss layers). More... | |

| bool | m_bConvertBottom = true |

| Specifies whether or not the layer should convert the bottom when using half sized memory. More... | |

| bool | m_bReshapeOnForwardNeeded = true |

| Specifies whether or not the reshape on forward is needed or not. More... | |

| bool | m_bNetReshapeRequest = false |

| Specifies whether the reshape is requested from a Net.Reshape call or not. More... | |

| LayerParameter.? LayerType | m_parentLayerType = null |

| Specifies the layer type of the parent. More... | |

Properties | |

| LayerParameter.? LayerType | parent_layer_type [get] |

| Optionally, specifies the parent layer type (e.g. LOSS, etc.) More... | |

| virtual bool | SupportsPreProcessing [get] |

| Should return true when PreProcessing methods are overriden. More... | |

| virtual bool | SupportsPostProcessing [get] |

| Should return true when pre PostProcessing methods are overriden. More... | |

| virtual bool | SupportsPostProcessingLogits [get] |

| Should return true when pre PostProcessingLogits methods are overriden. More... | |

| virtual bool | SupportsPostProcessingFullOutput [get] |

| Should return true when PostProcessingFullOutput is supported. More... | |

| BlobCollection< T > | blobs [get] |

| Returns the collection of learnable parameter Blobs for the Layer. More... | |

| BlobCollection< T > | internal_blobs [get] |

| Returns the collection of internal Blobs used by the Layer. More... | |

| LayerParameter | layer_param [get] |

| Returns the LayerParameter for this Layer. More... | |

| LayerParameter.LayerType | type [get] |

| Returns the LayerType of this Layer. More... | |

| virtual int | ExactNumBottomBlobs [get] |

| Returns the exact number of bottom (input) Blobs required by the Layer, or -1 if no exact number is required. More... | |

| virtual int | MinBottomBlobs [get] |

| Returns the minimum number of bottom (input) Blobs required by the Layer, or -1 if no minimum number is required. More... | |

| virtual int | MaxBottomBlobs [get] |

| Returns the maximum number of bottom (input) Blobs required by the Layer, or -1 if no maximum number is required. More... | |

| virtual int | ExactNumTopBlobs [get] |

| Returns the exact number of top (output) Blobs required by the Layer, or -1 if no exact number is required. More... | |

| virtual int | MinTopBlobs [get] |

| Returns the minimum number of top (output) Blobs required by the Layer, or -1 if no minimum number is required. More... | |

| virtual int | MaxTopBlobs [get] |

| Returns the maximum number of top (output) Blobs required by the Layer, or -1 if no maximum number is required. More... | |

| virtual bool | EqualNumBottomTopBlobs [get] |

| Returns true if the Layer requires and equal number of bottom (input) and top (output) Blobs. More... | |

| virtual bool | AutoTopBlobs [get] |

| Return whether "anonymous" top (output) Blobs are created automatically by the Layer. More... | |

| double | forward_timing [get] |

| Returns the timing of the last forward pass in milliseconds. More... | |

| double | forward_timing_average [get] |

| Returns the average timing of the forward passes in milliseconds. More... | |

| double | backward_timing [get] |

| Returns the timing of the last backward pass in milliseconds. More... | |

| double | backward_timing_average [get] |

| Returns the average timing of the backward passes in milliseconds. More... | |

Events | |

| EventHandler< WorkspaceArgs > | OnGetWorkspace |

| Specifies the OnGetWorkspace event that fires when the getWorkspace() function is called by a layer to get a shareable workspace to conserve GPU memory. More... | |

| EventHandler< WorkspaceArgs > | OnSetWorkspace |

| Specifies the OnSetWorkspace event that fires when the setWorkspace() function is called by a layer to get a shareable workspace to conserve GPU memory. More... | |

| EventHandler< GetIterationArgs > | OnGetIteration |

| Specifies the OnGetIteration event that fires when a layer needs to get the current iteration from the solver. More... | |

| EventHandler< GetWorkBlobArgs< T > > | OnDebug |

| Specifies the OnGetWorkBlob event that is only supported when debugging to get a work blob from the primary Net holding this layer. More... | |

An interface for the units of computation which can be composed into a Net.

Layers must implement an override to the forward function, in which they take their input (bottom) Blobs (if any) and compute their output Blobs (if any). They may also implement aan override to the backward function, in which they compute the error gradients with respect to their input Blob's, given the error gradients with their output Blobs.

| T | Specifies the base type float or double. Using float is recommended to conserve GPU memory. |

| MyCaffe.layers.Layer< T >.Layer | ( | CudaDnn< T > | cuda, |

| Log | log, | ||

| LayerParameter | p | ||

| ) |

The Layer constructor.

Setup code for derivative classes should go into an override of the LayerSetup function where the dimensionsn of the Blobs are provided to the Layer.

| cuda | Specifies the CudaDnn connection to Cuda. |

| log | Specifies the Log for output. |

| p | Specifies the LayerParameter that contains the settings of the Layer. |

|

virtual |

Return whether to allow

force_backward for a given bottom (input) Blob index.

If AllowForceBackward(i) == false, the

setting will be ignored and backpropagate to Blob i only if it needs gradient information. (as is done when

| nBottomIdx | Specifies the index of the bottom (input) item to force. |

Reimplemented in MyCaffe.layers.beta.TripletLossLayer< T >, MyCaffe.layers.ssd.SmoothL1LossLayer< T >, MyCaffe.layers.QuantileLossLayer< T >, MyCaffe.layers.ContrastiveLossLayer< T >, MyCaffe.layers.EuclideanLossLayer< T >, MyCaffe.layers.LossLayer< T >, MyCaffe.layers.LSTMUnitLayer< T >, and MyCaffe.layers.RecurrentLayer< T >.

| void MyCaffe.layers.Layer< T >.Backward | ( | BlobCollection< T > | colTop, |

| List< bool > | rgbPropagateDown, | ||

| BlobCollection< T > | colBottom | ||

| ) |

Given the top Blob error gradients, compute the bottom Blob error gradients.

The Backward function calls the overriden backward function implemented by each specific Layer derivative, to compute the bottom (input) Blob diffs given the top (output) Blob diffs.

| colTop | Specifies a collection of top (output) Blobs, whos diff fields store the gradient of the error with respect to themselves. |

| rgbPropagateDown | Specifies a List with equal length to the bottom, with each element indicating whether or not to propagate the error gradients down to the bottom Blob at the corresponding index. |

| colBottom | Specifies a collection of bottom (input) Blobs, whos diff fields are filled with the gradient of the error with respect to themselves after the Backward function is run. |

|

protectedpure virtual |

This backward abstract function must be overriden by each derived Layer class to compute the bottom (intput) Blob diffs for this Layer.

| colTop | Specifies a collection of top (output) Blobs, whos diff fields store the gradient of the error with respect to themselves. |

| rgbPropagateDown | Specifies a List with equal length to the bottom, with each element indicating whether or not to propagate the error gradients down to the bottom Blob at the corresponding index. |

| colBottom | Specifies a collection of bottom (input) Blobs, whos diff fields are filled with the gradient of the error with respect to themselves after the Backward function is run. |

Implemented in MyCaffe.layers.beta.AccuracyDecodeLayer< T >, MyCaffe.layers.beta.AccuracyEncodingLayer< T >, MyCaffe.layers.AttentionLayer< T >, MyCaffe.layers.beta.ConvolutionOctaveLayer< T >, MyCaffe.layers.CopyLayer< T >, MyCaffe.layers.beta.DataSequenceLayer< T >, MyCaffe.layers.beta.DecodeLayer< T >, MyCaffe.layers.beta.GatherLayer< T >, MyCaffe.layers.beta.GlobResNormLayer< T >, MyCaffe.layers.beta.InterpLayer< T >, MyCaffe.layers.beta.KnnLayer< T >, MyCaffe.layers.LSTMAttentionLayer< T >, MyCaffe.layers.beta.MeanErrorLossLayer< T >, MyCaffe.layers.beta.MergeLayer< T >, MyCaffe.layers.beta.MishLayer< T >, MyCaffe.layers.beta.ModelDataLayer< T >, MyCaffe.layers.beta.Normalization1Layer< T >, MyCaffe.layers.beta.SerfLayer< T >, MyCaffe.layers.beta.SqueezeLayer< T >, MyCaffe.layers.beta.TextDataLayer< T >, MyCaffe.layers.beta.TransposeLayer< T >, MyCaffe.layers.beta.TripletLossLayer< T >, MyCaffe.layers.beta.UnPoolingLayer< T >, MyCaffe.layers.beta.UnPoolingLayer1< T >, MyCaffe.layers.beta.UnsqueezeLayer< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer2< T >, MyCaffe.layers.gpt.GeluLayer< T >, MyCaffe.layers.gpt.LayerNormLayer< T >, MyCaffe.layers.gpt.MultiheadAttentionLayer< T >, MyCaffe.layers.gpt.NLLLossLayer< T >, MyCaffe.layers.gpt.PositionalEncodingLayer< T >, MyCaffe.layers.gpt.TokenizedDataLayer< T >, MyCaffe.layers.gpt.TokenizedDataPairsLayer< T >, MyCaffe.layers.gpt.TransformerBlockLayer< T >, MyCaffe.layers.hdf5.HDF5DataLayer< T >, MyCaffe.layers.lnn.CfcLayer< T >, MyCaffe.layers.lnn.CfcUnitLayer< T >, MyCaffe.layers.lnn.LeCunLayer< T >, MyCaffe.layers.lnn.LtcUnitLayer< T >, MyCaffe.layers.lnn.SiLULayer< T >, MyCaffe.layers.lnn.SoftPlusLayer< T >, MyCaffe.layers.nt.EventLayer< T >, MyCaffe.layers.nt.GramLayer< T >, MyCaffe.layers.nt.OneHotLayer< T >, MyCaffe.layers.nt.ScalarLayer< T >, MyCaffe.layers.nt.TVLossLayer< T >, MyCaffe.layers.ssd.DetectionEvaluateLayer< T >, MyCaffe.layers.ssd.DetectionOutputLayer< T >, MyCaffe.layers.ssd.MultiBoxLossLayer< T >, MyCaffe.layers.ssd.Normalization2Layer< T >, MyCaffe.layers.ssd.PermuteLayer< T >, MyCaffe.layers.ssd.PriorBoxLayer< T >, MyCaffe.layers.ssd.SmoothL1LossLayer< T >, MyCaffe.layers.tft.CategoricalTransformationLayer< T >, MyCaffe.layers.tft.ChannelEmbeddingLayer< T >, MyCaffe.layers.tft.DataTemporalLayer< T >, MyCaffe.layers.tft.GateAddNormLayer< T >, MyCaffe.layers.tft.GluLayer< T >, MyCaffe.layers.tft.GrnLayer< T >, MyCaffe.layers.tft.MultiHeadAttentionInterpLayer< T >, MyCaffe.layers.tft.NumericTransformationLayer< T >, MyCaffe.layers.tft.QuantileAccuracyLayer< T >, MyCaffe.layers.QuantileLossLayer< T >, MyCaffe.layers.tft.ReshapeTemporalLayer< T >, MyCaffe.layers.tft.VarSetNetLayer< T >, MyCaffe.layers.AbsValLayer< T >, MyCaffe.layers.AccuracyLayer< T >, MyCaffe.layers.ArgMaxLayer< T >, MyCaffe.layers.BaseDataLayer< T >, MyCaffe.layers.BatchNormLayer< T >, MyCaffe.layers.BatchReindexLayer< T >, MyCaffe.layers.BiasLayer< T >, MyCaffe.layers.BNLLLayer< T >, MyCaffe.layers.ClipLayer< T >, MyCaffe.layers.ConcatLayer< T >, MyCaffe.layers.ConstantLayer< T >, MyCaffe.layers.ContrastiveLossLayer< T >, MyCaffe.layers.ConvolutionLayer< T >, MyCaffe.layers.CropLayer< T >, MyCaffe.layers.DataNormalizerLayer< T >, MyCaffe.layers.DebugLayer< T >, MyCaffe.layers.DeconvolutionLayer< T >, MyCaffe.layers.DropoutLayer< T >, MyCaffe.layers.DummyDataLayer< T >, MyCaffe.layers.EltwiseLayer< T >, MyCaffe.layers.ELULayer< T >, MyCaffe.layers.EmbedLayer< T >, MyCaffe.layers.EuclideanLossLayer< T >, MyCaffe.layers.ExpLayer< T >, MyCaffe.layers.FilterLayer< T >, MyCaffe.layers.FlattenLayer< T >, MyCaffe.layers.GradientScaleLayer< T >, MyCaffe.layers.HingeLossLayer< T >, MyCaffe.layers.Im2colLayer< T >, MyCaffe.layers.InfogainLossLayer< T >, MyCaffe.layers.InnerProductLayer< T >, MyCaffe.layers.InputLayer< T >, MyCaffe.layers.LabelMappingLayer< T >, MyCaffe.layers.LogLayer< T >, MyCaffe.layers.LRNLayer< T >, MyCaffe.layers.LSTMSimpleLayer< T >, MyCaffe.layers.LSTMUnitLayer< T >, MyCaffe.layers.MathLayer< T >, MyCaffe.layers.MemoryLossLayer< T >, MyCaffe.layers.MultinomialLogisticLossLayer< T >, MyCaffe.layers.MVNLayer< T >, MyCaffe.layers.ParameterLayer< T >, MyCaffe.layers.PoolingLayer< T >, MyCaffe.layers.PowerLayer< T >, MyCaffe.layers.PReLULayer< T >, MyCaffe.layers.RecurrentLayer< T >, MyCaffe.layers.ReductionLayer< T >, MyCaffe.layers.ReLULayer< T >, MyCaffe.layers.ReshapeLayer< T >, MyCaffe.layers.ScaleLayer< T >, MyCaffe.layers.SigmoidCrossEntropyLossLayer< T >, MyCaffe.layers.SigmoidLayer< T >, MyCaffe.layers.SilenceLayer< T >, MyCaffe.layers.SliceLayer< T >, MyCaffe.layers.SoftmaxCrossEntropy2LossLayer< T >, MyCaffe.layers.SoftmaxCrossEntropyLossLayer< T >, MyCaffe.layers.SoftmaxLayer< T >, MyCaffe.layers.SoftmaxLossLayer< T >, MyCaffe.layers.SplitLayer< T >, MyCaffe.layers.SPPLayer< T >, MyCaffe.layers.SwishLayer< T >, MyCaffe.layers.TanhLayer< T >, MyCaffe.layers.ThresholdLayer< T >, and MyCaffe.layers.TileLayer< T >.

|

protected |

|

protected |

Called by the Layer::Setup function to check the number of bottom (input) and top (output) Blobs provided match the expected number of blobs expected via the {EactNum,Min,Max}{Bottom,Top}Blobs functions.

| colBottom | Specifies the collection of bottom (input) Blobs. |

| colTop | Specifies the collection of top (output) Blobs. |

|

protected |

Compare the shapes of the top and bottom and if the same, return true, otherwise false.

| colBottom | Specifies the bottom blobs. |

| colTop | Specifies the top blobs. |

|

virtual |

Called to connect the loss OnLoss event to a specified layer (typically the data layer).

When connected, the OnLoss event is called on each forward pass during the loss function.

| layer | Specifies the layer to connect the OnLoss event to. |

Reimplemented in MyCaffe.layers.tft.DataTemporalLayer< T >.

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

Called to convert a parent LayerParameterEx, used in blob sharing, with a child layer parameter.

| pChild | Specifies the child layer parameter. |

| pParent | Specifies the parent layer parameter. |

| void MyCaffe.layers.Layer< T >.ConvertToBase | ( | BlobCollection< T > | col | ) |

|

static |

Create a new Layer based on the LayerParameter.

| cuda | Specifies the CudaDnn connection to Cuda. |

| log | Specifies the Log for output. |

| p | Specifies the LayerParameter that contains the LayerType to create. |

| evtCancel | Specifies the CancelEvent used by some Layers when created. |

| db | Optionally, specifies the in-memory MyCaffeDatabase used by data Layers. |

| trxinput | Optionally, specifies the transfer input object used by some of the data Layers. |

DEPRECIATED - soon to be replaced by SOFTMAXCROSSENTROPY2_LOSS

| void MyCaffe.layers.Layer< T >.Dispose | ( | ) |

|

protectedvirtual |

Releases all GPU and host resources used by the Layer.

Reimplemented in MyCaffe.layers.beta.AccuracyDecodeLayer< T >, MyCaffe.layers.beta.AccuracyEncodingLayer< T >, MyCaffe.layers.AttentionLayer< T >, MyCaffe.layers.beta.ConvolutionOctaveLayer< T >, MyCaffe.layers.beta.DataSequenceLayer< T >, MyCaffe.layers.beta.DecodeLayer< T >, MyCaffe.layers.beta.GlobResNormLayer< T >, MyCaffe.layers.beta.KnnLayer< T >, MyCaffe.layers.LSTMAttentionLayer< T >, MyCaffe.layers.beta.MeanErrorLossLayer< T >, MyCaffe.layers.beta.MergeLayer< T >, MyCaffe.layers.beta.ModelDataLayer< T >, MyCaffe.layers.beta.Normalization1Layer< T >, MyCaffe.layers.beta.TextDataLayer< T >, MyCaffe.layers.beta.TransposeLayer< T >, MyCaffe.layers.beta.TripletLossLayer< T >, MyCaffe.layers.beta.UnPoolingLayer< T >, MyCaffe.layers.beta.UnPoolingLayer1< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer2< T >, MyCaffe.layers.gpt.LayerNormLayer< T >, MyCaffe.layers.gpt.MultiheadAttentionLayer< T >, MyCaffe.layers.gpt.NLLLossLayer< T >, MyCaffe.layers.gpt.PositionalEncodingLayer< T >, MyCaffe.layers.gpt.TokenizedDataLayer< T >, MyCaffe.layers.gpt.TokenizedDataPairsLayer< T >, MyCaffe.layers.gpt.TransformerBlockLayer< T >, MyCaffe.layers.hdf5.HDF5DataLayer< T >, MyCaffe.layers.lnn.CfcLayer< T >, MyCaffe.layers.lnn.CfcUnitLayer< T >, MyCaffe.layers.lnn.LtcUnitLayer< T >, MyCaffe.layers.nt.OneHotLayer< T >, MyCaffe.layers.ssd.AnnotatedDataLayer< T >, MyCaffe.layers.ssd.DetectionEvaluateLayer< T >, MyCaffe.layers.ssd.DetectionOutputLayer< T >, MyCaffe.layers.ssd.MultiBoxLossLayer< T >, MyCaffe.layers.ssd.Normalization2Layer< T >, MyCaffe.layers.ssd.PermuteLayer< T >, MyCaffe.layers.ssd.PriorBoxLayer< T >, MyCaffe.layers.ssd.SmoothL1LossLayer< T >, MyCaffe.layers.ssd.VideoDataLayer< T >, MyCaffe.layers.tft.CategoricalTransformationLayer< T >, MyCaffe.layers.tft.ChannelEmbeddingLayer< T >, MyCaffe.layers.tft.DataTemporalLayer< T >, MyCaffe.layers.tft.GateAddNormLayer< T >, MyCaffe.layers.tft.GluLayer< T >, MyCaffe.layers.tft.GrnLayer< T >, MyCaffe.layers.tft.MultiHeadAttentionInterpLayer< T >, MyCaffe.layers.tft.NumericTransformationLayer< T >, MyCaffe.layers.tft.QuantileAccuracyLayer< T >, MyCaffe.layers.QuantileLossLayer< T >, MyCaffe.layers.tft.ReshapeTemporalLayer< T >, MyCaffe.layers.tft.VarSetNetLayer< T >, MyCaffe.layers.AccuracyLayer< T >, MyCaffe.layers.BaseConvolutionLayer< T >, MyCaffe.layers.BaseDataLayer< T >, MyCaffe.layers.BasePrefetchingDataLayer< T >, MyCaffe.layers.BatchNormLayer< T >, MyCaffe.layers.BatchReindexLayer< T >, MyCaffe.layers.BiasLayer< T >, MyCaffe.layers.ContrastiveLossLayer< T >, MyCaffe.layers.ConvolutionLayer< T >, MyCaffe.layers.CropLayer< T >, MyCaffe.layers.DataLayer< T >, MyCaffe.layers.DataNormalizerLayer< T >, MyCaffe.layers.DebugLayer< T >, MyCaffe.layers.DeconvolutionLayer< T >, MyCaffe.layers.DropoutLayer< T >, MyCaffe.layers.EltwiseLayer< T >, MyCaffe.layers.ELULayer< T >, MyCaffe.layers.EmbedLayer< T >, MyCaffe.layers.EuclideanLossLayer< T >, MyCaffe.layers.HingeLossLayer< T >, MyCaffe.layers.Im2colLayer< T >, MyCaffe.layers.ImageDataLayer< T >, MyCaffe.layers.InfogainLossLayer< T >, MyCaffe.layers.InnerProductLayer< T >, MyCaffe.layers.LRNLayer< T >, MyCaffe.layers.LSTMSimpleLayer< T >, MyCaffe.layers.LSTMUnitLayer< T >, MyCaffe.layers.MemoryDataLayer< T >, MyCaffe.layers.MemoryLossLayer< T >, MyCaffe.layers.MVNLayer< T >, MyCaffe.layers.PoolingLayer< T >, MyCaffe.layers.PReLULayer< T >, MyCaffe.layers.RecurrentLayer< T >, MyCaffe.layers.ReductionLayer< T >, MyCaffe.layers.ReLULayer< T >, MyCaffe.layers.ScaleLayer< T >, MyCaffe.layers.SigmoidCrossEntropyLossLayer< T >, MyCaffe.layers.SigmoidLayer< T >, MyCaffe.layers.SoftmaxCrossEntropy2LossLayer< T >, MyCaffe.layers.SoftmaxCrossEntropyLossLayer< T >, MyCaffe.layers.SoftmaxLayer< T >, MyCaffe.layers.SoftmaxLossLayer< T >, MyCaffe.layers.SPPLayer< T >, MyCaffe.layers.SwishLayer< T >, and MyCaffe.layers.TanhLayer< T >.

|

protected |

|

protected |

|

protected |

| double MyCaffe.layers.Layer< T >.Forward | ( | BlobCollection< T > | colBottom, |

| BlobCollection< T > | colTop | ||

| ) |

Given the bottom (input) Blobs, this function computes the top (output) Blobs and the loss.

The Forward function calls the overriden forward function implemented by each specific Layer derivative to compute the top (output) Blob's values given the bottom (input) Blobs. If the layer has any non-zero

this function then computes and returns the loss.

| colBottom | Specifies the collection of bottom (input) Blobs, whos data fields store the input data for this layers' outputs. |

| colTop | Specifies the collection of preshaped top (output) Blobs, whos data fields will store this layers' outputs. |

|

protectedpure virtual |

This forward abstract function must be overriden by each derived Layer class to compute the top (output) Blobs for this layer.

| colBottom | Specifies the collection of bottom (input) Blobs, whos data fields store the input data for this layers' outputs. |

| colTop | Specifies the collection of preshaped top (output) Blobs, whos data fields will store this layers' outputs. |

Implemented in MyCaffe.layers.beta.AccuracyDecodeLayer< T >, MyCaffe.layers.beta.AccuracyEncodingLayer< T >, MyCaffe.layers.AttentionLayer< T >, MyCaffe.layers.beta.ConvolutionOctaveLayer< T >, MyCaffe.layers.CopyLayer< T >, MyCaffe.layers.beta.DataSequenceLayer< T >, MyCaffe.layers.beta.DecodeLayer< T >, MyCaffe.layers.beta.GatherLayer< T >, MyCaffe.layers.beta.GlobResNormLayer< T >, MyCaffe.layers.beta.InterpLayer< T >, MyCaffe.layers.beta.KnnLayer< T >, MyCaffe.layers.LSTMAttentionLayer< T >, MyCaffe.layers.beta.MeanErrorLossLayer< T >, MyCaffe.layers.beta.MergeLayer< T >, MyCaffe.layers.beta.MishLayer< T >, MyCaffe.layers.beta.ModelDataLayer< T >, MyCaffe.layers.beta.Normalization1Layer< T >, MyCaffe.layers.beta.SerfLayer< T >, MyCaffe.layers.beta.SqueezeLayer< T >, MyCaffe.layers.beta.TextDataLayer< T >, MyCaffe.layers.beta.TransposeLayer< T >, MyCaffe.layers.beta.TripletLossLayer< T >, MyCaffe.layers.beta.UnPoolingLayer< T >, MyCaffe.layers.beta.UnPoolingLayer1< T >, MyCaffe.layers.beta.UnsqueezeLayer< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer2< T >, MyCaffe.layers.gpt.GeluLayer< T >, MyCaffe.layers.gpt.LayerNormLayer< T >, MyCaffe.layers.gpt.MultiheadAttentionLayer< T >, MyCaffe.layers.gpt.NLLLossLayer< T >, MyCaffe.layers.gpt.PositionalEncodingLayer< T >, MyCaffe.layers.gpt.TokenizedDataLayer< T >, MyCaffe.layers.gpt.TokenizedDataPairsLayer< T >, MyCaffe.layers.gpt.TransformerBlockLayer< T >, MyCaffe.layers.hdf5.HDF5DataLayer< T >, MyCaffe.layers.lnn.CfcLayer< T >, MyCaffe.layers.lnn.CfcUnitLayer< T >, MyCaffe.layers.lnn.LeCunLayer< T >, MyCaffe.layers.lnn.LtcUnitLayer< T >, MyCaffe.layers.lnn.SiLULayer< T >, MyCaffe.layers.lnn.SoftPlusLayer< T >, MyCaffe.layers.nt.EventLayer< T >, MyCaffe.layers.nt.GramLayer< T >, MyCaffe.layers.nt.OneHotLayer< T >, MyCaffe.layers.nt.ScalarLayer< T >, MyCaffe.layers.nt.TVLossLayer< T >, MyCaffe.layers.ssd.DetectionEvaluateLayer< T >, MyCaffe.layers.ssd.DetectionOutputLayer< T >, MyCaffe.layers.ssd.MultiBoxLossLayer< T >, MyCaffe.layers.ssd.Normalization2Layer< T >, MyCaffe.layers.ssd.PermuteLayer< T >, MyCaffe.layers.ssd.PriorBoxLayer< T >, MyCaffe.layers.ssd.SmoothL1LossLayer< T >, MyCaffe.layers.tft.CategoricalTransformationLayer< T >, MyCaffe.layers.tft.ChannelEmbeddingLayer< T >, MyCaffe.layers.tft.DataTemporalLayer< T >, MyCaffe.layers.tft.GateAddNormLayer< T >, MyCaffe.layers.tft.GluLayer< T >, MyCaffe.layers.tft.GrnLayer< T >, MyCaffe.layers.tft.MultiHeadAttentionInterpLayer< T >, MyCaffe.layers.tft.NumericTransformationLayer< T >, MyCaffe.layers.tft.QuantileAccuracyLayer< T >, MyCaffe.layers.QuantileLossLayer< T >, MyCaffe.layers.tft.ReshapeTemporalLayer< T >, MyCaffe.layers.tft.VarSetNetLayer< T >, MyCaffe.layers.AbsValLayer< T >, MyCaffe.layers.AccuracyLayer< T >, MyCaffe.layers.ArgMaxLayer< T >, MyCaffe.layers.BasePrefetchingDataLayer< T >, MyCaffe.layers.BatchNormLayer< T >, MyCaffe.layers.BatchReindexLayer< T >, MyCaffe.layers.BiasLayer< T >, MyCaffe.layers.BNLLLayer< T >, MyCaffe.layers.ClipLayer< T >, MyCaffe.layers.ConcatLayer< T >, MyCaffe.layers.ConstantLayer< T >, MyCaffe.layers.ContrastiveLossLayer< T >, MyCaffe.layers.ConvolutionLayer< T >, MyCaffe.layers.CropLayer< T >, MyCaffe.layers.DataNormalizerLayer< T >, MyCaffe.layers.DebugLayer< T >, MyCaffe.layers.DeconvolutionLayer< T >, MyCaffe.layers.DropoutLayer< T >, MyCaffe.layers.DummyDataLayer< T >, MyCaffe.layers.EltwiseLayer< T >, MyCaffe.layers.ELULayer< T >, MyCaffe.layers.EmbedLayer< T >, MyCaffe.layers.EuclideanLossLayer< T >, MyCaffe.layers.ExpLayer< T >, MyCaffe.layers.FilterLayer< T >, MyCaffe.layers.FlattenLayer< T >, MyCaffe.layers.GradientScaleLayer< T >, MyCaffe.layers.HingeLossLayer< T >, MyCaffe.layers.Im2colLayer< T >, MyCaffe.layers.InfogainLossLayer< T >, MyCaffe.layers.InnerProductLayer< T >, MyCaffe.layers.InputLayer< T >, MyCaffe.layers.LabelMappingLayer< T >, MyCaffe.layers.LogLayer< T >, MyCaffe.layers.LRNLayer< T >, MyCaffe.layers.LSTMSimpleLayer< T >, MyCaffe.layers.LSTMUnitLayer< T >, MyCaffe.layers.MathLayer< T >, MyCaffe.layers.MemoryDataLayer< T >, MyCaffe.layers.MemoryLossLayer< T >, MyCaffe.layers.MultinomialLogisticLossLayer< T >, MyCaffe.layers.MVNLayer< T >, MyCaffe.layers.ParameterLayer< T >, MyCaffe.layers.PoolingLayer< T >, MyCaffe.layers.PowerLayer< T >, MyCaffe.layers.PReLULayer< T >, MyCaffe.layers.RecurrentLayer< T >, MyCaffe.layers.ReductionLayer< T >, MyCaffe.layers.ReLULayer< T >, MyCaffe.layers.ReshapeLayer< T >, MyCaffe.layers.ScaleLayer< T >, MyCaffe.layers.SigmoidCrossEntropyLossLayer< T >, MyCaffe.layers.SigmoidLayer< T >, MyCaffe.layers.SilenceLayer< T >, MyCaffe.layers.SliceLayer< T >, MyCaffe.layers.SoftmaxCrossEntropy2LossLayer< T >, MyCaffe.layers.SoftmaxCrossEntropyLossLayer< T >, MyCaffe.layers.SoftmaxLayer< T >, MyCaffe.layers.SoftmaxLossLayer< T >, MyCaffe.layers.SplitLayer< T >, MyCaffe.layers.SPPLayer< T >, MyCaffe.layers.SwishLayer< T >, MyCaffe.layers.TanhLayer< T >, MyCaffe.layers.ThresholdLayer< T >, and MyCaffe.layers.TileLayer< T >.

|

protected |

|

protectedvirtual |

Returns the WorkspaceArgs used to share a workspace between Layers.

Reimplemented in MyCaffe.layers.BaseConvolutionLayer< T >.

|

pure virtual |

Performs Layer specific setup. Derived layers should override this function as well as the Reshape function.

This method should perform one-time Layer specific setup. This may include reading and processing relevant parameters from teh

. Setting up the shapes of top (output) blobs and internal buffers should be done in the

function, which will be called before the Forward pass to adjust the top (input) Blob sizes.

| colBottom | Specifies the collection of bottom (input) Blobs to this Layer. |

| colTop | Specifies the collection of allocated but unshaped top (output) Blobs. |

Implemented in MyCaffe.layers.beta.AccuracyDecodeLayer< T >, MyCaffe.layers.beta.AccuracyEncodingLayer< T >, MyCaffe.layers.AttentionLayer< T >, MyCaffe.layers.beta.ConvolutionOctaveLayer< T >, MyCaffe.layers.CopyLayer< T >, MyCaffe.layers.beta.DataSequenceLayer< T >, MyCaffe.layers.beta.DecodeLayer< T >, MyCaffe.layers.beta.GatherLayer< T >, MyCaffe.layers.beta.GlobResNormLayer< T >, MyCaffe.layers.beta.InterpLayer< T >, MyCaffe.layers.beta.KnnLayer< T >, MyCaffe.layers.LSTMAttentionLayer< T >, MyCaffe.layers.beta.MeanErrorLossLayer< T >, MyCaffe.layers.beta.MergeLayer< T >, MyCaffe.layers.beta.ModelDataLayer< T >, MyCaffe.layers.beta.Normalization1Layer< T >, MyCaffe.layers.beta.SqueezeLayer< T >, MyCaffe.layers.beta.TextDataLayer< T >, MyCaffe.layers.beta.TransposeLayer< T >, MyCaffe.layers.beta.TripletLossLayer< T >, MyCaffe.layers.beta.UnPoolingLayer< T >, MyCaffe.layers.beta.UnPoolingLayer1< T >, MyCaffe.layers.beta.UnsqueezeLayer< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer2< T >, MyCaffe.layers.gpt.LayerNormLayer< T >, MyCaffe.layers.gpt.MultiheadAttentionLayer< T >, MyCaffe.layers.gpt.NLLLossLayer< T >, MyCaffe.layers.gpt.PositionalEncodingLayer< T >, MyCaffe.layers.gpt.TokenizedDataLayer< T >, MyCaffe.layers.gpt.TokenizedDataPairsLayer< T >, MyCaffe.layers.gpt.TransformerBlockLayer< T >, MyCaffe.layers.hdf5.HDF5DataLayer< T >, MyCaffe.layers.lnn.CfcLayer< T >, MyCaffe.layers.lnn.CfcUnitLayer< T >, MyCaffe.layers.lnn.LtcUnitLayer< T >, MyCaffe.layers.nt.EventLayer< T >, MyCaffe.layers.nt.GramLayer< T >, MyCaffe.layers.nt.OneHotLayer< T >, MyCaffe.layers.nt.ScalarLayer< T >, MyCaffe.layers.nt.TVLossLayer< T >, MyCaffe.layers.ssd.DetectionEvaluateLayer< T >, MyCaffe.layers.ssd.DetectionOutputLayer< T >, MyCaffe.layers.ssd.MultiBoxLossLayer< T >, MyCaffe.layers.ssd.Normalization2Layer< T >, MyCaffe.layers.ssd.PermuteLayer< T >, MyCaffe.layers.ssd.PriorBoxLayer< T >, MyCaffe.layers.ssd.SmoothL1LossLayer< T >, MyCaffe.layers.tft.CategoricalTransformationLayer< T >, MyCaffe.layers.tft.ChannelEmbeddingLayer< T >, MyCaffe.layers.tft.DataTemporalLayer< T >, MyCaffe.layers.tft.GateAddNormLayer< T >, MyCaffe.layers.tft.GluLayer< T >, MyCaffe.layers.tft.GrnLayer< T >, MyCaffe.layers.tft.MultiHeadAttentionInterpLayer< T >, MyCaffe.layers.tft.NumericTransformationLayer< T >, MyCaffe.layers.tft.QuantileAccuracyLayer< T >, MyCaffe.layers.QuantileLossLayer< T >, MyCaffe.layers.tft.ReshapeTemporalLayer< T >, MyCaffe.layers.tft.VarSetNetLayer< T >, MyCaffe.layers.AccuracyLayer< T >, MyCaffe.layers.ArgMaxLayer< T >, MyCaffe.layers.BaseConvolutionLayer< T >, MyCaffe.layers.BaseDataLayer< T >, MyCaffe.layers.BasePrefetchingDataLayer< T >, MyCaffe.layers.BatchNormLayer< T >, MyCaffe.layers.BatchReindexLayer< T >, MyCaffe.layers.BiasLayer< T >, MyCaffe.layers.ConcatLayer< T >, MyCaffe.layers.ConstantLayer< T >, MyCaffe.layers.ContrastiveLossLayer< T >, MyCaffe.layers.ConvolutionLayer< T >, MyCaffe.layers.CropLayer< T >, MyCaffe.layers.DataNormalizerLayer< T >, MyCaffe.layers.DebugLayer< T >, MyCaffe.layers.DeconvolutionLayer< T >, MyCaffe.layers.DropoutLayer< T >, MyCaffe.layers.DummyDataLayer< T >, MyCaffe.layers.EltwiseLayer< T >, MyCaffe.layers.ELULayer< T >, MyCaffe.layers.EmbedLayer< T >, MyCaffe.layers.EuclideanLossLayer< T >, MyCaffe.layers.ExpLayer< T >, MyCaffe.layers.FilterLayer< T >, MyCaffe.layers.FlattenLayer< T >, MyCaffe.layers.GradientScaleLayer< T >, MyCaffe.layers.Im2colLayer< T >, MyCaffe.layers.InfogainLossLayer< T >, MyCaffe.layers.InnerProductLayer< T >, MyCaffe.layers.InputLayer< T >, MyCaffe.layers.LabelMappingLayer< T >, MyCaffe.layers.LogLayer< T >, MyCaffe.layers.LossLayer< T >, MyCaffe.layers.LRNLayer< T >, MyCaffe.layers.LSTMSimpleLayer< T >, MyCaffe.layers.LSTMUnitLayer< T >, MyCaffe.layers.MathLayer< T >, MyCaffe.layers.MemoryLossLayer< T >, MyCaffe.layers.MVNLayer< T >, MyCaffe.layers.NeuronLayer< T >, MyCaffe.layers.ParameterLayer< T >, MyCaffe.layers.PoolingLayer< T >, MyCaffe.layers.PowerLayer< T >, MyCaffe.layers.PReLULayer< T >, MyCaffe.layers.RecurrentLayer< T >, MyCaffe.layers.ReductionLayer< T >, MyCaffe.layers.ReLULayer< T >, MyCaffe.layers.ReshapeLayer< T >, MyCaffe.layers.ScaleLayer< T >, MyCaffe.layers.SigmoidCrossEntropyLossLayer< T >, MyCaffe.layers.SigmoidLayer< T >, MyCaffe.layers.SilenceLayer< T >, MyCaffe.layers.SliceLayer< T >, MyCaffe.layers.SoftmaxCrossEntropy2LossLayer< T >, MyCaffe.layers.SoftmaxCrossEntropyLossLayer< T >, MyCaffe.layers.SoftmaxLayer< T >, MyCaffe.layers.SoftmaxLossLayer< T >, MyCaffe.layers.SplitLayer< T >, MyCaffe.layers.SPPLayer< T >, MyCaffe.layers.SwishLayer< T >, MyCaffe.layers.TanhLayer< T >, MyCaffe.layers.ThresholdLayer< T >, and MyCaffe.layers.TileLayer< T >.

| double MyCaffe.layers.Layer< T >.loss | ( | int | nTopIdx | ) |

| bool MyCaffe.layers.Layer< T >.param_propagate_down | ( | int | nParamIdx | ) |

|

virtual |

The PostProcessFullOutput allows derivative data layers to post-process the results, usually be detokenizing the data in the blobSoftmax.

| blobSoftmax | Specifies the data to be post processed. |

Reimplemented in MyCaffe.layers.gpt.TokenizedDataPairsLayer< T >.

|

virtual |

The PostProcessLogitsOutput allows derivative data layers to post-process the results, converting them back into text results (e.g., detokenizing).

| nCurIdx | Specifies the current index being processed, or -1 for the last index. |

| blobLogits | Specifies the logits blob output by the last inner product layer of the network. |

| softmax | Specifies the softmax layer used to post process the logits. |

| nAxis | Specifies the axis of the softmax layer. |

| nK | Optionally, specifies the K top items to return (default = 1). |

Reimplemented in MyCaffe.layers.gpt.TokenizedDataLayer< T >, and MyCaffe.layers.gpt.TokenizedDataPairsLayer< T >.

|

virtual |

The PostProcessOutput allows derivative data layers to post-process the results, converting them back into text results (e.g., detokenizing).

| blobSofmtax | Specifies the softmax blob output by the network. |

| nK | Optionally, specifies the K top items to return (default = 1). |

Reimplemented in MyCaffe.layers.beta.TextDataLayer< T >.

|

virtual |

Convert the index to the word.

| nIdx | Specifies the index to convert. |

Reimplemented in MyCaffe.layers.beta.TextDataLayer< T >.

|

virtual |

The PreprocessInput allows derivative data layers to convert a property set of input data into the bottom blob collection used as intput.

| customInput | Specifies the custom input data. |

| nSeqLen | Returns the sequence length. |

| colBottom | Optionally, specifies the bottom data to fill. |

The blobs returned should match the blob descriptions returned in the LayerParameter's overrides for 'PrepareRunModelInputs' and 'PrepareRunModel'.

Reimplemented in MyCaffe.layers.beta.TextDataLayer< T >, MyCaffe.layers.gpt.TokenizedDataLayer< T >, and MyCaffe.layers.gpt.TokenizedDataPairsLayer< T >.

|

virtual |

Preprocess the input data for the RUN phase.

| strEncInput | Specifies the encoder input. |

| nDecInput | Specifies the decoder input. |

| colBottom | Specifies the bottom blob where the preprocessed data is placed where colBottom[0] contains the preprocessed decoder input. colBottom[1] contains the preprocessed encoder input (depending on param settings), colBottom[2] contains the preprocessed encoder input reversed (depending on param settings) |

NOTE: the LayerSetup must be called before preprocessing input, for during LayerSetup the vocabulary is loaded.

Reimplemented in MyCaffe.layers.gpt.TokenizedDataLayer< T >, MyCaffe.layers.gpt.TokenizedDataPairsLayer< T >, and MyCaffe.layers.beta.TextDataLayer< T >.

|

virtual |

Re-initialize the parameters of the layer.

| target | Specifies the weights to target (e.g. weights, bias or both). |

Reimplemented in MyCaffe.layers.AttentionLayer< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer2< T >, MyCaffe.layers.gpt.MultiheadAttentionLayer< T >, MyCaffe.layers.gpt.TransformerBlockLayer< T >, MyCaffe.layers.lnn.CfcLayer< T >, MyCaffe.layers.lnn.CfcUnitLayer< T >, MyCaffe.layers.lnn.LtcUnitLayer< T >, MyCaffe.layers.BaseConvolutionLayer< T >, MyCaffe.layers.BatchNormLayer< T >, MyCaffe.layers.BiasLayer< T >, MyCaffe.layers.EmbedLayer< T >, MyCaffe.layers.InnerProductLayer< T >, MyCaffe.layers.LSTMSimpleLayer< T >, MyCaffe.layers.PReLULayer< T >, and MyCaffe.layers.ScaleLayer< T >.

|

virtual |

Reset the OnDebug event, disabling it.

| fn | Specifies the event function to call when the OnDebug event fires. |

Reimplemented in MyCaffe.layers.RecurrentLayer< T >.

|

pure virtual |

Adjust the shapes of top blobs and internal buffers to accomodate the shapes of the bottom blobs.

This method should reshape top blobs as needed according to the shapes of the bottom (input) Blobs, as well as reshaping any internal buffers and making any other necessary adjustments so that the layer can accomodate the bottom (input) Blobs.

| colBottom | Specifies the collection of bottom (input) Blobs, with requested input shapes. |

| colTop | Specifies the collection of top (output) Blobs, which should be reshaped as needed by the Layer. |

Implemented in MyCaffe.layers.beta.AccuracyDecodeLayer< T >, MyCaffe.layers.beta.AccuracyEncodingLayer< T >, MyCaffe.layers.AttentionLayer< T >, MyCaffe.layers.beta.ConvolutionOctaveLayer< T >, MyCaffe.layers.CopyLayer< T >, MyCaffe.layers.beta.DataSequenceLayer< T >, MyCaffe.layers.beta.DecodeLayer< T >, MyCaffe.layers.beta.GatherLayer< T >, MyCaffe.layers.beta.GlobResNormLayer< T >, MyCaffe.layers.beta.InterpLayer< T >, MyCaffe.layers.beta.KnnLayer< T >, MyCaffe.layers.LSTMAttentionLayer< T >, MyCaffe.layers.beta.MeanErrorLossLayer< T >, MyCaffe.layers.beta.MergeLayer< T >, MyCaffe.layers.beta.ModelDataLayer< T >, MyCaffe.layers.beta.Normalization1Layer< T >, MyCaffe.layers.beta.SqueezeLayer< T >, MyCaffe.layers.beta.TextDataLayer< T >, MyCaffe.layers.beta.TransposeLayer< T >, MyCaffe.layers.beta.TripletLossLayer< T >, MyCaffe.layers.beta.UnPoolingLayer< T >, MyCaffe.layers.beta.UnPoolingLayer1< T >, MyCaffe.layers.beta.UnsqueezeLayer< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer2< T >, MyCaffe.layers.gpt.LayerNormLayer< T >, MyCaffe.layers.gpt.MultiheadAttentionLayer< T >, MyCaffe.layers.gpt.NLLLossLayer< T >, MyCaffe.layers.gpt.PositionalEncodingLayer< T >, MyCaffe.layers.gpt.TokenizedDataLayer< T >, MyCaffe.layers.gpt.TokenizedDataPairsLayer< T >, MyCaffe.layers.gpt.TransformerBlockLayer< T >, MyCaffe.layers.hdf5.HDF5DataLayer< T >, MyCaffe.layers.lnn.CfcLayer< T >, MyCaffe.layers.lnn.CfcUnitLayer< T >, MyCaffe.layers.lnn.LtcUnitLayer< T >, MyCaffe.layers.nt.EventLayer< T >, MyCaffe.layers.nt.GramLayer< T >, MyCaffe.layers.nt.OneHotLayer< T >, MyCaffe.layers.nt.TVLossLayer< T >, MyCaffe.layers.ssd.DetectionEvaluateLayer< T >, MyCaffe.layers.ssd.DetectionOutputLayer< T >, MyCaffe.layers.ssd.MultiBoxLossLayer< T >, MyCaffe.layers.ssd.Normalization2Layer< T >, MyCaffe.layers.ssd.PermuteLayer< T >, MyCaffe.layers.ssd.PriorBoxLayer< T >, MyCaffe.layers.ssd.SmoothL1LossLayer< T >, MyCaffe.layers.tft.CategoricalTransformationLayer< T >, MyCaffe.layers.tft.ChannelEmbeddingLayer< T >, MyCaffe.layers.tft.DataTemporalLayer< T >, MyCaffe.layers.tft.GateAddNormLayer< T >, MyCaffe.layers.tft.GluLayer< T >, MyCaffe.layers.tft.GrnLayer< T >, MyCaffe.layers.tft.MultiHeadAttentionInterpLayer< T >, MyCaffe.layers.tft.NumericTransformationLayer< T >, MyCaffe.layers.tft.QuantileAccuracyLayer< T >, MyCaffe.layers.QuantileLossLayer< T >, MyCaffe.layers.tft.ReshapeTemporalLayer< T >, MyCaffe.layers.tft.VarSetNetLayer< T >, MyCaffe.layers.AccuracyLayer< T >, MyCaffe.layers.ArgMaxLayer< T >, MyCaffe.layers.BaseConvolutionLayer< T >, MyCaffe.layers.BaseDataLayer< T >, MyCaffe.layers.BatchNormLayer< T >, MyCaffe.layers.BatchReindexLayer< T >, MyCaffe.layers.BiasLayer< T >, MyCaffe.layers.ConcatLayer< T >, MyCaffe.layers.ConstantLayer< T >, MyCaffe.layers.ContrastiveLossLayer< T >, MyCaffe.layers.ConvolutionLayer< T >, MyCaffe.layers.CropLayer< T >, MyCaffe.layers.DataNormalizerLayer< T >, MyCaffe.layers.DebugLayer< T >, MyCaffe.layers.DeconvolutionLayer< T >, MyCaffe.layers.DropoutLayer< T >, MyCaffe.layers.DummyDataLayer< T >, MyCaffe.layers.EltwiseLayer< T >, MyCaffe.layers.ELULayer< T >, MyCaffe.layers.EmbedLayer< T >, MyCaffe.layers.EuclideanLossLayer< T >, MyCaffe.layers.FilterLayer< T >, MyCaffe.layers.FlattenLayer< T >, MyCaffe.layers.Im2colLayer< T >, MyCaffe.layers.InfogainLossLayer< T >, MyCaffe.layers.InnerProductLayer< T >, MyCaffe.layers.InputLayer< T >, MyCaffe.layers.LossLayer< T >, MyCaffe.layers.LRNLayer< T >, MyCaffe.layers.LSTMSimpleLayer< T >, MyCaffe.layers.LSTMUnitLayer< T >, MyCaffe.layers.MemoryDataLayer< T >, MyCaffe.layers.MemoryLossLayer< T >, MyCaffe.layers.MultinomialLogisticLossLayer< T >, MyCaffe.layers.MVNLayer< T >, MyCaffe.layers.NeuronLayer< T >, MyCaffe.layers.ParameterLayer< T >, MyCaffe.layers.PoolingLayer< T >, MyCaffe.layers.PReLULayer< T >, MyCaffe.layers.RecurrentLayer< T >, MyCaffe.layers.ReductionLayer< T >, MyCaffe.layers.ReLULayer< T >, MyCaffe.layers.ReshapeLayer< T >, MyCaffe.layers.ScaleLayer< T >, MyCaffe.layers.SigmoidCrossEntropyLossLayer< T >, MyCaffe.layers.SigmoidLayer< T >, MyCaffe.layers.SilenceLayer< T >, MyCaffe.layers.SliceLayer< T >, MyCaffe.layers.SoftmaxCrossEntropy2LossLayer< T >, MyCaffe.layers.SoftmaxCrossEntropyLossLayer< T >, MyCaffe.layers.SoftmaxLayer< T >, MyCaffe.layers.SoftmaxLossLayer< T >, MyCaffe.layers.SplitLayer< T >, MyCaffe.layers.SPPLayer< T >, MyCaffe.layers.SwishLayer< T >, MyCaffe.layers.TanhLayer< T >, and MyCaffe.layers.TileLayer< T >.

|

protectedvirtual |

Tests the shapes of both the bottom and top blobs and if they are the same as the previous sizing, returns false indicating that no reshape is needed.

| colBottom | Specifies the bottom blobs. |

| colTop | Specifies the top blobs. |

| bReset | Specifies to reset the test (set to false when using in second derivative classes, e.g. set to true in BaseConvolutionLayer, and false in ConvolutionLayer). |

Reimplemented in MyCaffe.layers.tft.MultiHeadAttentionInterpLayer< T >, and MyCaffe.layers.ConvolutionLayer< T >.

| void MyCaffe.layers.Layer< T >.set_loss | ( | int | nTopIdx, |

| double | dfLoss | ||

| ) |

| void MyCaffe.layers.Layer< T >.set_param_propagate_down | ( | int | nParamIdx, |

| bool | bPropagate | ||

| ) |

| void MyCaffe.layers.Layer< T >.SetEnablePassthrough | ( | bool | bEnable | ) |

|

protected |

Called by Layer::Setup to initialize the weights associated with any top (output) Blobs in the loss function ans store non-zero loss weights in the diff Blob.

| colTop | Specifies the collection of top (output) Blobs. |

|

virtual |

This function allows other layers to gather needed information from the NetParameters if any, and is called when initialzing the Net.

| np | Specifies the NetParameter. |

Reimplemented in MyCaffe.layers.LabelMappingLayer< T >.

| void MyCaffe.layers.Layer< T >.SetNetReshapeRequest | ( | ) |

|

virtual |

Set the OnDebug event.

| fn | Specifies the event function to call when the OnDebug event fires. |

Reimplemented in MyCaffe.layers.RecurrentLayer< T >.

| void MyCaffe.layers.Layer< T >.SetPhase | ( | Phase | phase | ) |

|

protected |

| void MyCaffe.layers.Layer< T >.Setup | ( | BlobCollection< T > | colBottom, |

| BlobCollection< T > | colTop | ||

| ) |

Implements common Layer setup functionality.

Checks that the number of bottom and top blobs are correct. Calls LayerSetup to do Layer specific setup for each layer type, followed by Reshape to setup the sizes of the top Blobs and internal buffers. Shes up the loss weight multiplier blobs for any non-zero loss weights.

| colBottom | Specifies the collection of preshaped bottom (input) Blobs. |

| colTop | Specifies the collection of allocated but unshaped top (output) Blobs. |

|

protectedvirtual |

Derivative layers should add all internal blobws to the 'col' provided.

| col | Specifies the blob collection where internal blobs are added. |

Reimplemented in MyCaffe.layers.beta.AccuracyEncodingLayer< T >, MyCaffe.layers.AttentionLayer< T >, MyCaffe.layers.beta.DecodeLayer< T >, MyCaffe.layers.beta.GlobResNormLayer< T >, MyCaffe.layers.beta.KnnLayer< T >, MyCaffe.layers.LSTMAttentionLayer< T >, MyCaffe.layers.beta.Normalization1Layer< T >, MyCaffe.layers.beta.TransposeLayer< T >, MyCaffe.layers.beta.TripletLossLayer< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer< T >, MyCaffe.layers.gpt.CausalSelfAttentionLayer2< T >, MyCaffe.layers.gpt.LayerNormLayer< T >, MyCaffe.layers.gpt.MultiheadAttentionLayer< T >, MyCaffe.layers.gpt.NLLLossLayer< T >, MyCaffe.layers.gpt.PositionalEncodingLayer< T >, MyCaffe.layers.gpt.TokenizedDataPairsLayer< T >, MyCaffe.layers.gpt.TransformerBlockLayer< T >, MyCaffe.layers.lnn.CfcLayer< T >, MyCaffe.layers.lnn.CfcUnitLayer< T >, MyCaffe.layers.lnn.LtcUnitLayer< T >, MyCaffe.layers.ssd.DetectionOutputLayer< T >, MyCaffe.layers.ssd.MultiBoxLossLayer< T >, MyCaffe.layers.ssd.Normalization2Layer< T >, MyCaffe.layers.ssd.PermuteLayer< T >, MyCaffe.layers.ssd.SmoothL1LossLayer< T >, MyCaffe.layers.tft.CategoricalTransformationLayer< T >, MyCaffe.layers.tft.ChannelEmbeddingLayer< T >, MyCaffe.layers.tft.DataTemporalLayer< T >, MyCaffe.layers.tft.GateAddNormLayer< T >, MyCaffe.layers.tft.GluLayer< T >, MyCaffe.layers.tft.GrnLayer< T >, MyCaffe.layers.tft.MultiHeadAttentionInterpLayer< T >, MyCaffe.layers.tft.NumericTransformationLayer< T >, MyCaffe.layers.tft.QuantileAccuracyLayer< T >, MyCaffe.layers.tft.ReshapeTemporalLayer< T >, MyCaffe.layers.tft.VarSetNetLayer< T >, MyCaffe.layers.BaseConvolutionLayer< T >, MyCaffe.layers.BatchNormLayer< T >, MyCaffe.layers.BatchReindexLayer< T >, MyCaffe.layers.BiasLayer< T >, MyCaffe.layers.CropLayer< T >, MyCaffe.layers.DropoutLayer< T >, MyCaffe.layers.EltwiseLayer< T >, MyCaffe.layers.EmbedLayer< T >, MyCaffe.layers.InfogainLossLayer< T >, MyCaffe.layers.InnerProductLayer< T >, MyCaffe.layers.LRNLayer< T >, MyCaffe.layers.LSTMSimpleLayer< T >, MyCaffe.layers.LSTMUnitLayer< T >, MyCaffe.layers.MVNLayer< T >, MyCaffe.layers.PoolingLayer< T >, MyCaffe.layers.PReLULayer< T >, MyCaffe.layers.RecurrentLayer< T >, MyCaffe.layers.ReductionLayer< T >, MyCaffe.layers.ScaleLayer< T >, MyCaffe.layers.SoftmaxCrossEntropy2LossLayer< T >, MyCaffe.layers.SoftmaxLayer< T >, and MyCaffe.layers.SoftmaxLossLayer< T >.

|

protectedvirtual |

Sets the workspace size (in items) and returns true if set, false otherwise.

| lSizeInBytes | Specifies the size of the workspace data in bytes. |

Reimplemented in MyCaffe.layers.BaseConvolutionLayer< T >.

|

protected |

Attempts to share a Layer Blob if another parameter Blob with the same name and acceptable size is found.

| b | Specifies the Blob to share. |

| rgMinShape | Specifies the minimum shape requried to share. |

|

protected |

Attempts to share the Layer blobs and internal_blobs with matching names and sizes with those in another matching layer.

| layer | Specifies the layer who will use the shared blobs and internal blobs from the shared layer. |

|

protected |

Attempts to share a parameter Blob if another parameter Blob with the same name and accpetable size is found.

| b | Specifies the Blob to share. |

| rgMinShape | Specifies the minimum shape requried to share. |

| bAllowEndsWithComparison | Optionally, allow name comparison where end of blob 'b' name is compared with the share blob names (default = false). |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

protected |

|

get |

Return whether "anonymous" top (output) Blobs are created automatically by the Layer.

If this method returns true, Net::Init will create enough "anonymous" top Blobs to fulfill the requirement specified by ExactNumTopBlobs() or MinTopBlobs().

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

|

get |

| EventHandler<GetWorkBlobArgs<T> > MyCaffe.layers.Layer< T >.OnDebug |

Specifies the OnGetWorkBlob event that is only supported when debugging to get a work blob from the primary Net holding this layer.

When implemented, this event causes a nan/inf check at the end of each forward and backward pass and is only recommended use during debugging.

| EventHandler<GetIterationArgs> MyCaffe.layers.Layer< T >.OnGetIteration |

| EventHandler<WorkspaceArgs> MyCaffe.layers.Layer< T >.OnGetWorkspace |

Specifies the OnGetWorkspace event that fires when the getWorkspace() function is called by a layer to get a shareable workspace to conserve GPU memory.

| EventHandler<WorkspaceArgs> MyCaffe.layers.Layer< T >.OnSetWorkspace |

Specifies the OnSetWorkspace event that fires when the setWorkspace() function is called by a layer to get a shareable workspace to conserve GPU memory.