In our latest release, version 0.10.0.190, we have added Neural Style Transfer as described by [1] using the VGG model [2].

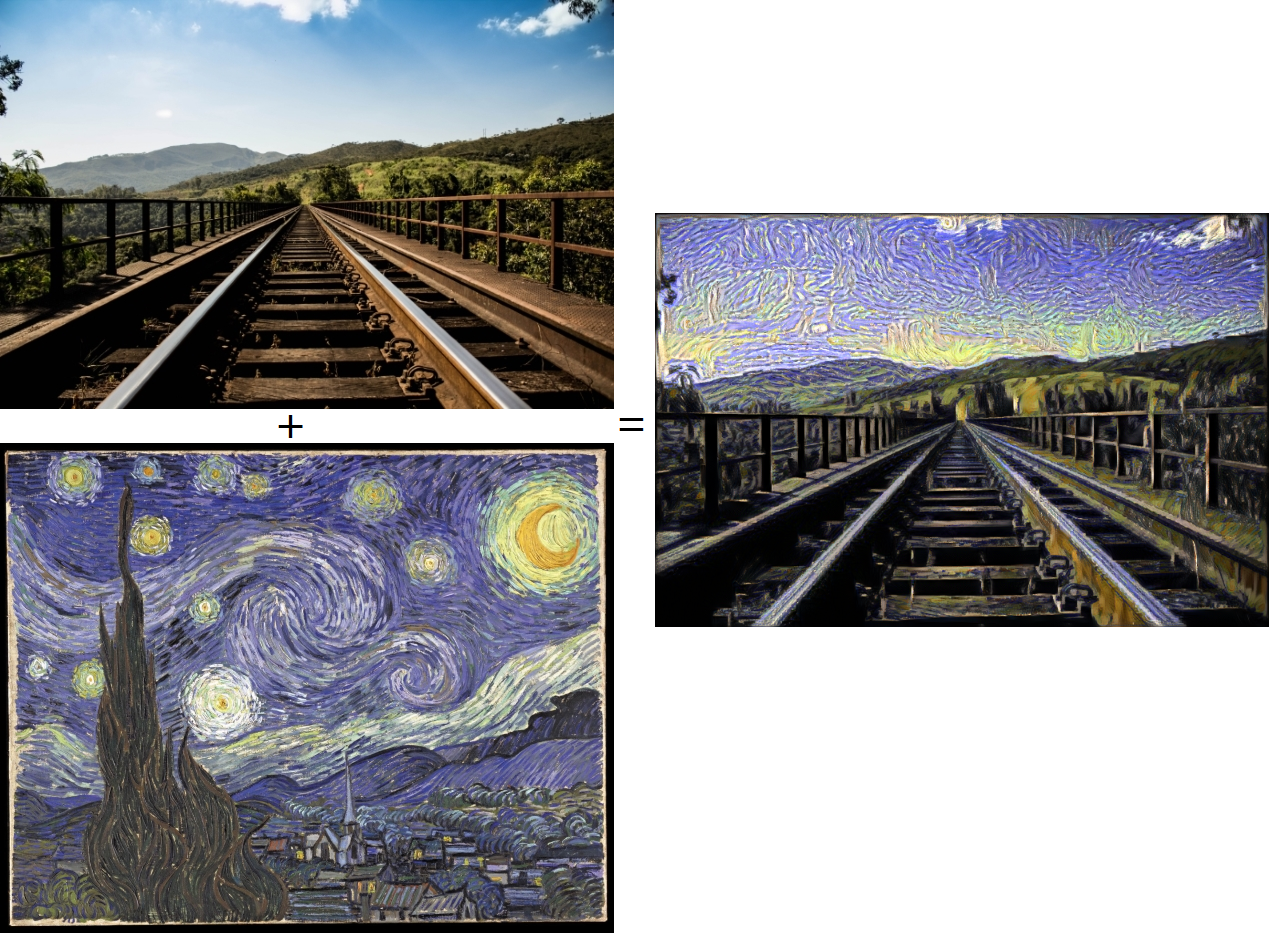

With Neural Style Transfer, the style of one photo (such as Vincent Van Gogh’s Starry Night) is learned by the AI model and applied to a content photo (such as the photo of train tracks) to produce a new art piece!

For example, selecting Edvard Munch’s The Scream as a style tells the application to learn the style of his painting and apply it to the content photo to produce a new and different art piece from the one created with Van Gogh’s style.

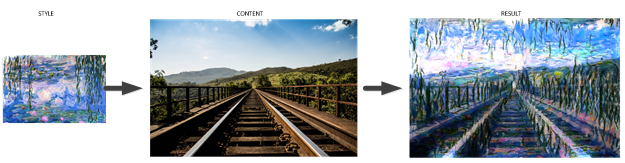

In another example, using Claude Monet’s Water Lilies 1916 as the style creates another new art piece.

How Does This Work?

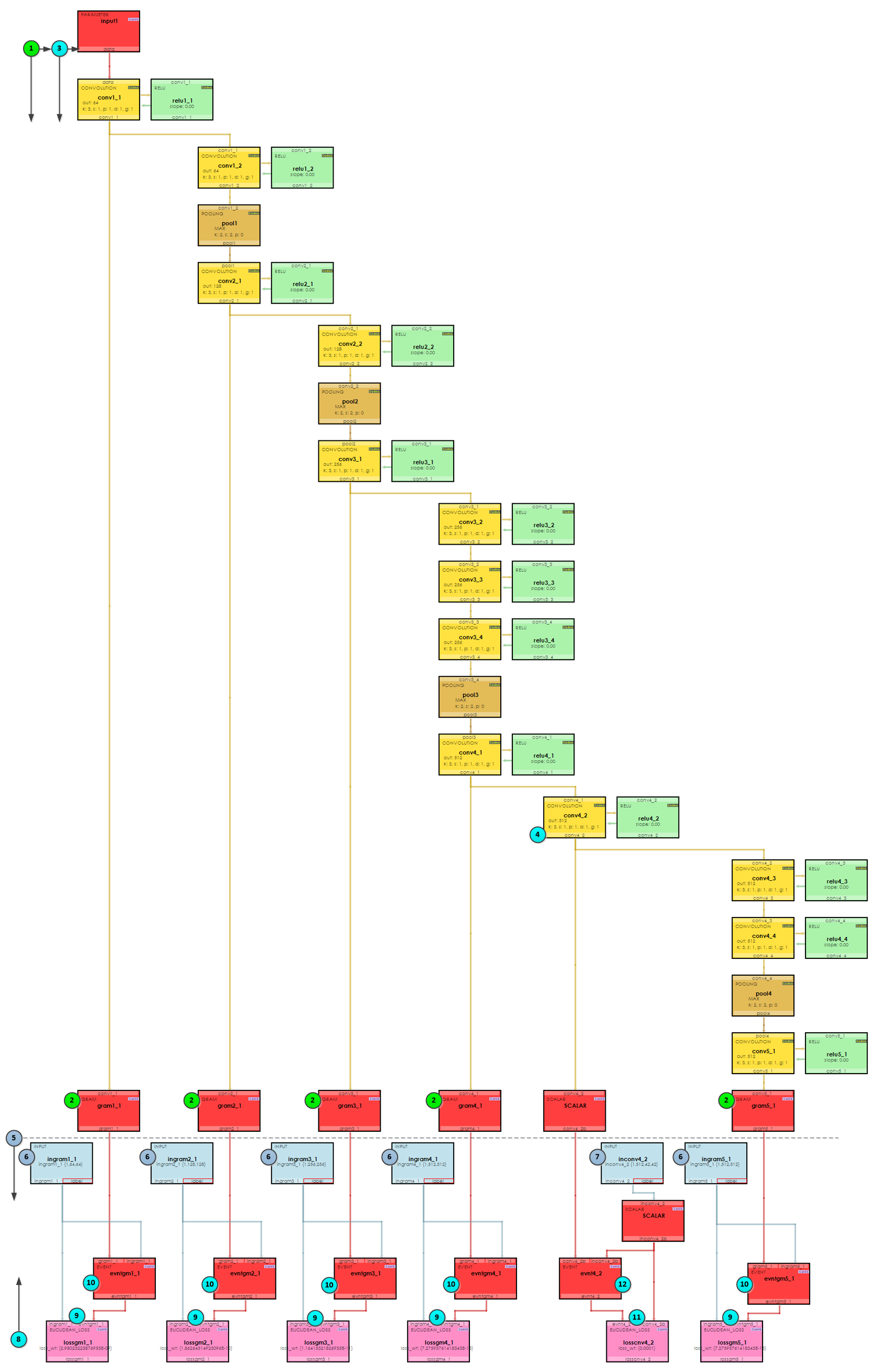

As shown above, the neural style algorithm learns the style and applies it to the content, but how does this work? When running a neural style transfer, the model used (e.g. VGG19) is augmented by adding a few new layers that allow for learning the style and then back-propagating both the style and content features back to create the final image. As described by Li [3], the Gram matrix is the main work-horse used to learn the style in that it, in theory, “is equivalent to minimizing the Maximum Mean Discrepancy” and shows that that neural style transfer is “a process of distribution alignment of the neural activations between images.” The Gram matrix is implemented by the GRAM layer. Two additional layers play important roles in the process. The EVENT layer is used to only apply the gradients that matter (e.g. zeroing out the others) and the PARAMETER layer holds the final learned results that produce the end result – a neural ‘stylized’ image.

To show how this works a little better, the model below displays a visualization of the actual augmented model (augmented from VGG19 and originally inspired by [4]) used for the neural style transfer. With this example, the layers used to learn the style are: conv1_1, conv2_1, conv3_1, conv4_1 and conv5_1. And, the layer used to learn the content is: conv4_2. You can use whatever layers you want to create different output effects, but these are the ones used in this discussion.

During the neural style transfer process, the following steps take place.

- First, the style image is loaded into the data blob and a forward pass is performed on the augmented VGG19 model with GRAM layers already added.

- Next, the GRAM layer output blobs gram1_1, gram2_1, gram3_1, gram4_1 and gram5_1 are saved.

- The content image is then loaded into the data blob and another forward pass is performed on the augmented model.

- This time, the output blob of the conv4_2 CONVOLUTION output layer is saved.

- The model is further augmented by adding INPUT, EVENT and EUCLIDEAN_LOSS layers to match each GRAM layer. A SCALAR layer is added to the content layer to help data scaling, and weight learning is disabled on the CONVOLUTION layers.

- Next, the style output blobs saved in steps #2 above are restored back into the solver model, augmented in step #5 above.

- And the content output blob saved in step #4 above is restored back into the augmented solver model as well.

- The solver is then directed to solve the model which begins the forward/backward set of iterations to solve the neural style transfer.

- On each forward pass, the Euclidean loss is calculated between the style INPUT and GRAM layers (EVENT layer acts as a passthrough on the forward pass).

- During the backward pass, the gradient calculated in step #9 is passed back through the EVENT layer which then applies only the gradients that have actually changed and zeros out the rest.

- The same process occurs with the content layer, but in this case the Euclidean loss is calculated between the content INPUT and the CONVOLUTION layer conv4_2 output.

- Again the gradient calculated in step #11 is passed back through an EVENT layer which then applies only the gradients that have actually changed and zeros out the rest.

The back propagation continues on up the network and deposits the final changes into the PARAMETER layer input1. After running numerous iterations (sometimes just around 200), the style of the style image paints the content provided by the content image to produce a new piece of art!

New Features

The following new features have been added to this release.

- Added new Neural Style Transfer Evaluator.

- Added Database Optimizer.

Bug Fixes

The following bugs have been fixed in this release.

- Fixed bugs in Import Project dialog including slow processing.

To try out this model and train it yourself, just check out our Tutorials for easy step-by-step instructions that will get you started quickly! For cool example videos, including an ATARI Pong video and Cart-Pole balancing video, check out our Examples page.

[1] Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge, A Neural Algorithm of Artistic Style, 2015, arXiv:1508:06576.

[2] Karen Simonyan and Andrew Zisserman, Very Deep Convolutional Networks for Large-Scale Image Recognition, 2014, arXiv:1409.1556.

[3] Yanghao Li, Naiyan Wang, Jiaying Liu and Xiaodi Hou, Demystifying Neural Style Transfer, 2017, arXiv:1701.01036.

[4] ftokarev, GitHub:ftokarev/caffe-neural-style, 2017, GitHub.

The ‘Train Bridge‘ photo is provided by www.pexels.com under the CC0 License.

Each of the paintings, Starry Night by Vincent Van Gogh, The Scream by Edvund Munch and Water Lilies 1916 by Claude Monet were are all provided as public domain by commons.wikimedia.org.