minGPT, created by Andrej Karpathy, is a simplified implementation of the original OpenAI GPT-2 open-source project.

GPT has proven very useful in solving many Natural Language Processing problems (NLP) and as shown by Karpathy and others, also used to solve tasks outside of the NLP domain such as generative image processing and classification.

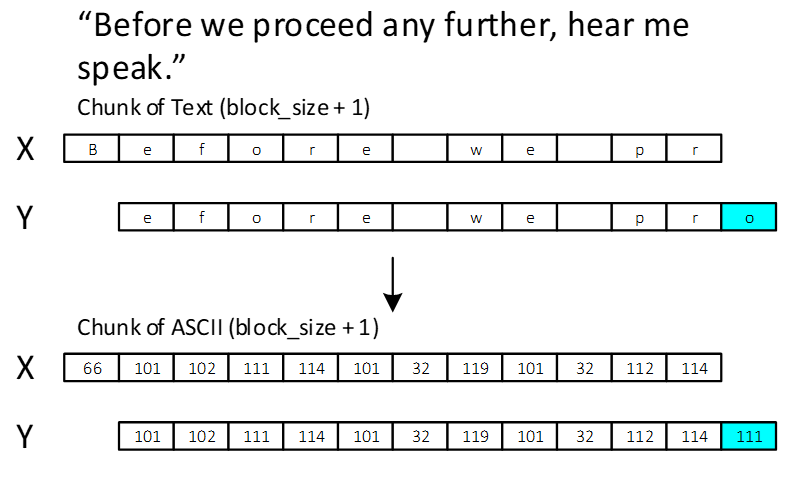

One of the samples created by Karpathy uses minGPT to learn how to model and create Shakespeare sonnets similar to a CharRnn. Chunks of text are randomly extracted from the input, converted into ASCII numbers and fed into the model.

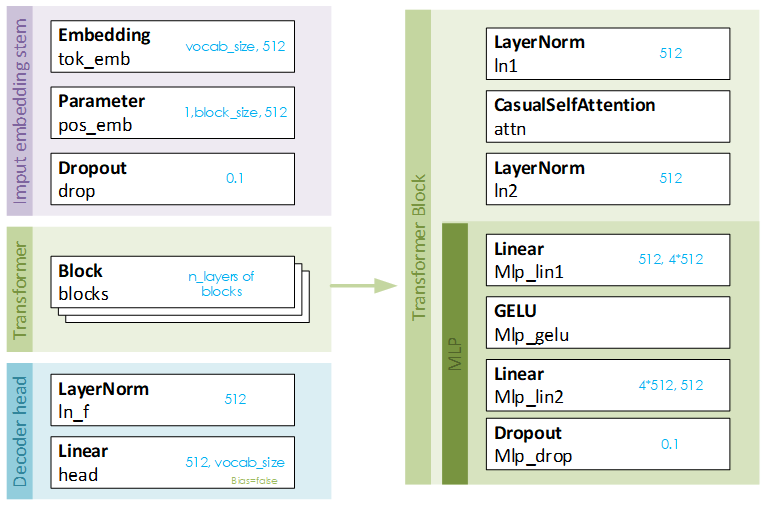

Batches of randomly selected input ‘chunks’ are fed into the minGPT model by first converting each input into an embedding, then feeding the embeddings through a set of 8 Transformer Blocks whose results are then decoded by the decoder head.

Each Transformer Block normalizes the inputs before sending it to the CasualSelfAttention layer and then on to the MLP layers.

During training, the logits produced by the Decoder head are sent along with the targets to a CrossEntropyLoss layer to produce the overall loss.

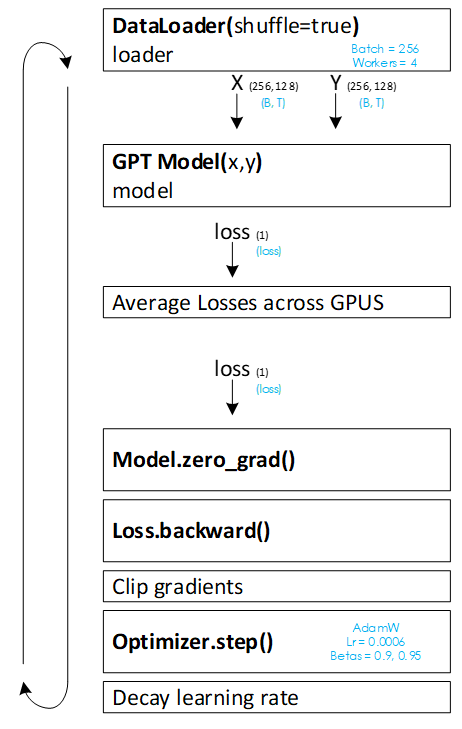

Each training cycle uses a custom DataLoader to load random chunks from the input text file. These input chunks are fed into the GPT model which produces the loss. Next, the model gradients are zeroed, and the loss back propagates back through the model. Gradients are clipped and the optimizer step applies the gradients to the weights. And finally, the learning rate is decayed based on the training progress.

Other examples provided by Karpathy, show how to use the same GPT model architecture to generate images in the CIFAR-10 dataset. This is accomplished by converting a subset of pixels from each image into a stream of numbers that are then fed into the GPT model much in the same way as character-based solution described above.

To see the full presentation that describes the data flow and CasualSelfAttention layer in detail, see the minGPT – How It Works presentation.

Happy Deep Learning!