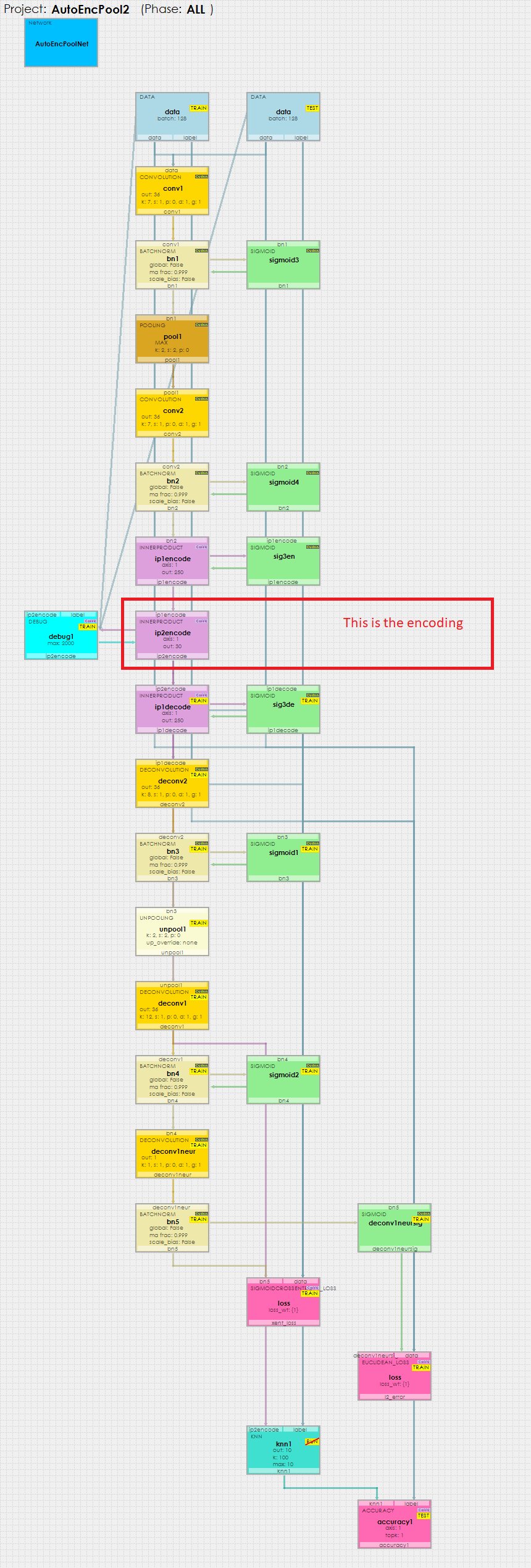

In our latest release, version 0.9.2.122, we now support deep convolutional auto-encoders with pooling as described by [1], and do so with the new ly released CUDA 9.2.148/cuDNN 7.1.4.

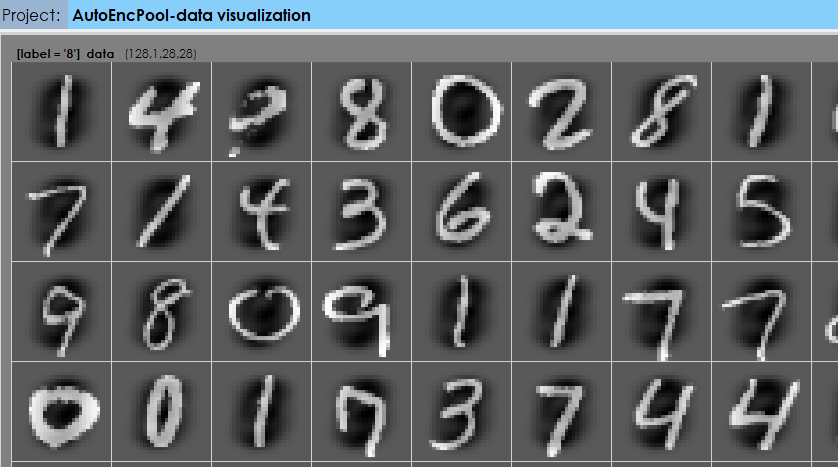

Auto-encoders are models that learn how to re-create the input fed into them. In our example shown here, the MNIST dataset is fed into our model,…

…a Deep Convolutional Auto-Encoder Model named AutoEncPool2.

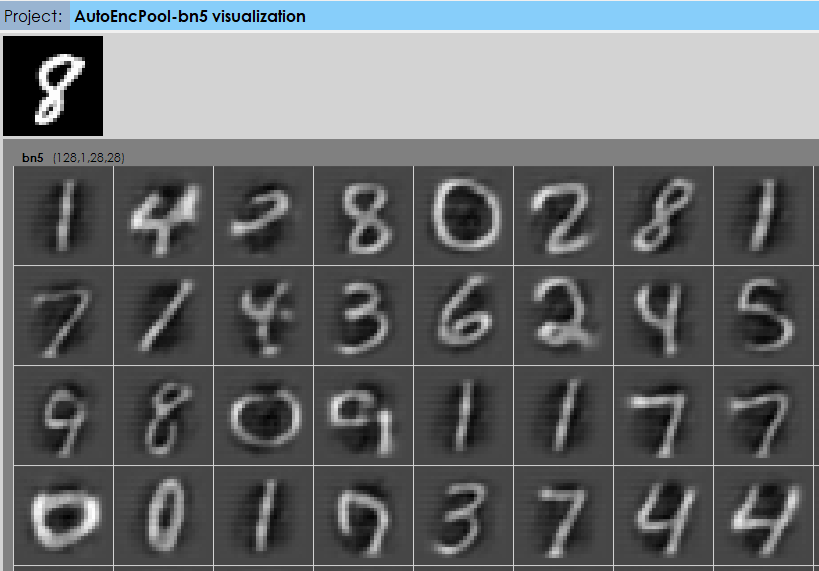

The AutoEncPool2 model learns to re-create the MNIST inputs as shown below, which is the visualization of the last BATCHNORM output layer, bn5.

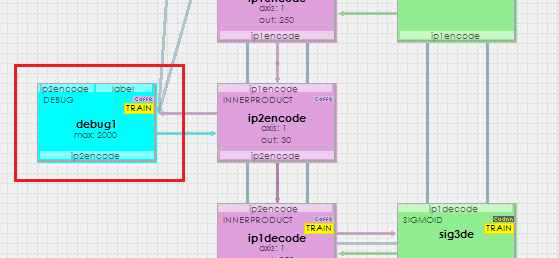

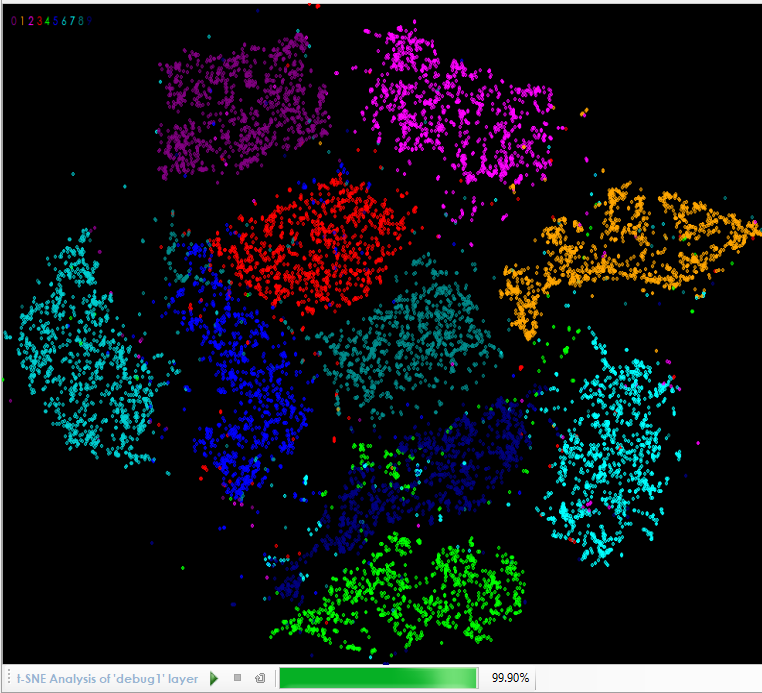

The magic occurs in the ip2encode layer which contains the 30 element encoding learned from the MNIST dataset. To view the encoding, merely inspect the DEBUG layer attached to it.

Inspecting the DEBUG layer produces a t-SNE analysis of the embedding that clearly shows data separation for each class within the learned encoding.

Such auto-encoder’s can be helpful in pre-training models and performing data reduction tasks.

If you would like to try the Auto-Encoder model yourself, just follow the easy steps in the Auto-Encoder tutorial.

References

[1] Volodymyr Turchenko, Eric Chalmers, Artur Luczac, A Deep Convolutional Auto-Encoder with Pooling – Unpooling Layers in Caffe. arXiv, 2017.