In our latest release, version 0.10.2.38, we have added support for Siamese Nets as described by [1] and [2], and do so with the newly released CUDA 10.2 and cuDNN 7.6.5!

The Siamese Net provides the ability to perform ‘One-Shot’ learning where an image that the network has never seen before, is quickly matched with already learned classifications – if such a match exists. Several examples using Siamese Nets for one-shot learning include: Image retrieval described by [3]; Content based retrieval described by [4]; and Railway asset detection described by [5].

How Does This Work?

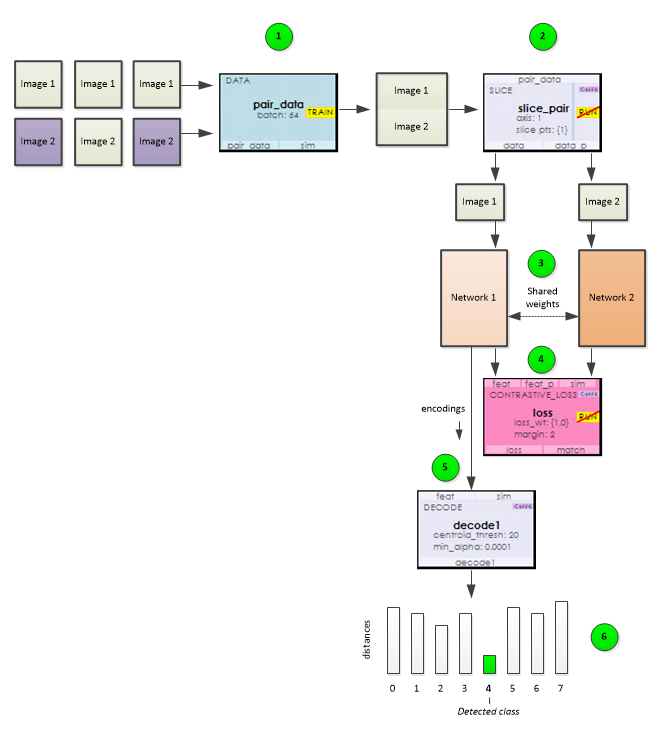

Siamese Nets use two parallel networks that both share the same weights which are learned while digesting pairs of images. The following sequence of steps occur while training a Siamese Net.

1.) Using the Data Layer, data is fed into the net as image pairs where each image is stacked one after the other along the data input channels. In order to provide a balanced training, pairs of images alternate between two images of the same class followed by two images of different classes.

2.) During training, the pairs of images loaded by the Data Layer are split by the Slice Layer which then feeds each image into one of two parallel networks that both share a set of learnable weights.

3.) Each network produces an encoding for each image fed to it.

4.) These encodings are then sent to the Contrastive Loss layer which calculates the loss from the distance between the two encodings where the loss is set to the squared distance between the two images when they are from the same class, and the squared difference between the margin and the distance when they are from different classes; which moves the image encodings toward one another when they are from the same class and further apart when they are not.

5.) During training, a Decode Layer calculates and stores the centroid encoding for each class.

6.) When running the network, the Decode Layer’s stored centroids are used to determine the shortest distance between the input image’s encoding and each classes encoding centroid. A minimum distance between the input image and a given class indicates that the input image matches the class.

In summary, training a Siamese Net directs encodings of similar classes to group together and encodings of different classes to move away from one another. When running the Siamese Net, the input image is converted into its encoding which is then matched with the class for which it is closest. In the event the encoding is not ‘close’ to any other class encoding centroids, the image is determined to be a part of an unknown, or new class.

Siamese Net to learn MNIST

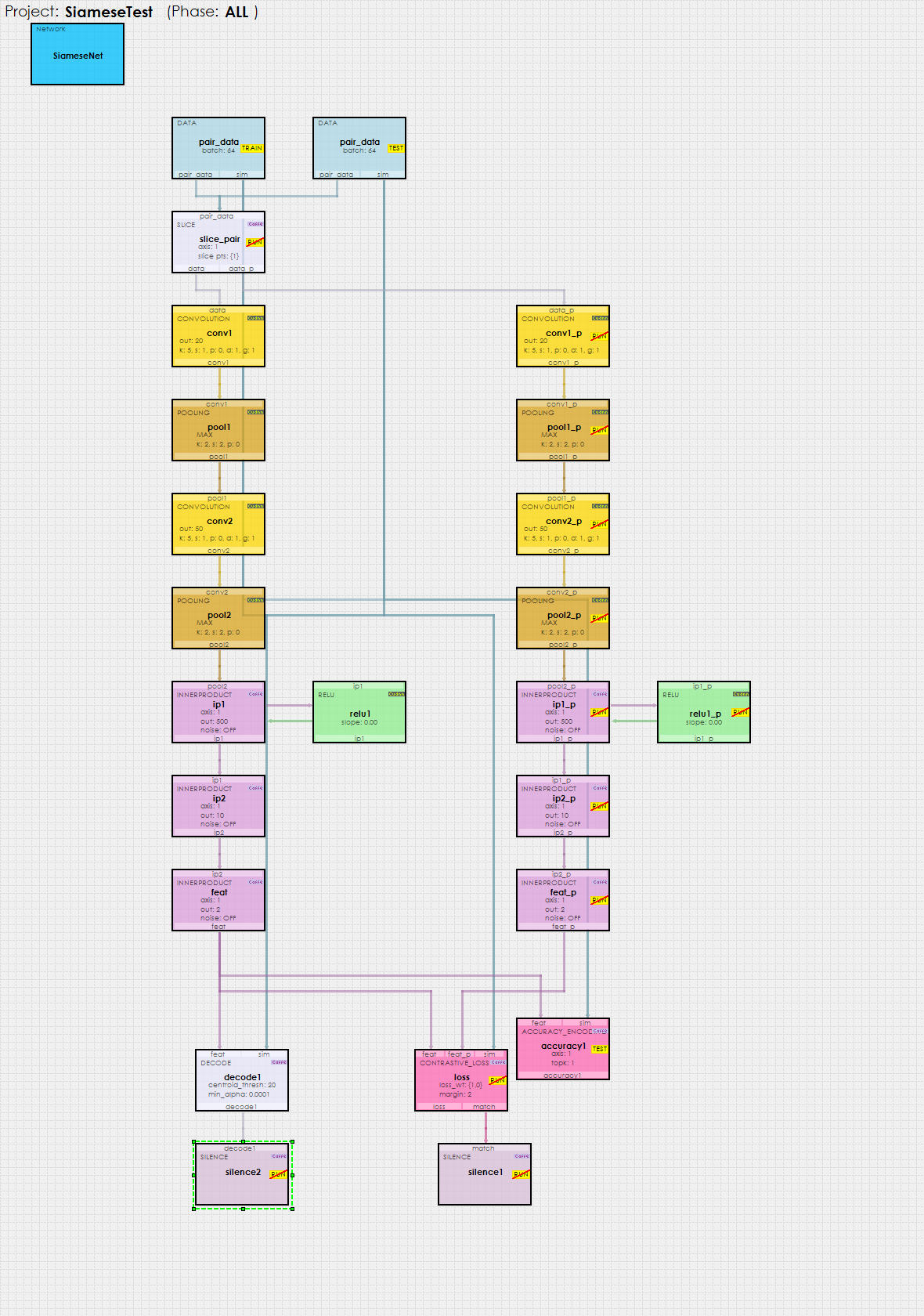

The following Siamese Net is used to learn the MNIST dataset.

As shown above, the model uses two parallel networks joined at the bottom by a Contrastive Loss layer. Pairs of images are fed into the top of each network which then each produce the ‘feat‘ and ‘feat_p‘ encodings for the images. These encoding pairs are then sent to the Contrastive Loss layer where the learning begins.

New Features

The following new features have been added to this release.

- Added CUDA 10.2.89/cuDNN7.6.5.32 support.

- Added label query hit percent and label query epoch information to projects.

- Added new AccuracyEncoding layer for Siamese Nets.

- Added new Decode layer for Siamese Nets.

- Added new multiple image support to Data Layer for Siamese Nets.

- Added new layer weight map visualization.

Bug Fixes

The following bugs have been fixed in this release.

- Fixed bug related to VOC0712 Dataset Creator.

- Fixed bug related to data overlaps occurring within Data layer.

- Optimized cuDNN handle usage.

- Optimized pinned memory usage.

To try out this model and train it yourself, just check out our Tutorials for easy step-by-step instructions that will get you started quickly! For cool example videos, including an ATARI Pong video and Cart-Pole balancing video, check out our Examples page.

[1] Berkeley Artificial Intelligence (BAIR), Siamese Network Training with Caffe.

[2] G. Koch, R. Zemel and R. Salakhutdinov, Siamese Neural Networks for One-shot Image Recognition, ICML 2015 Deep Learning Workshop, 2015.

[3] K. L. Wiggers, A. S. Britto, L. Heutte, A. L. Koerich and L. S. Oliveira, Image Retrieval and Pattern Spotting using Siamese Neural Network, arXiv, vol. 1906.09513, 2019.

[4] Y.-A. Chung and W.-H. Weng, Learning Deep Representations of Medical Images using Siamese CNNs with Application to Content-Based Image Retrieval, arXiv, vol. 1711.08490, 2017.

[5] D. J. Rao, S. Mittal and S. Ritika, Siamese Neural Networks for One-shot detection of Railway Track Switches, arXiv, vol. 1712.08036, 2017.