In our latest release, version 0.10.2.124, we have added support for K-Nearest Neighbor (KNN) to the Siamese Net as described by [1] and [2].

KNN provides the Siamese Net with another way to perform ‘One-Shot’ learning on difficult datasets that include images the network has never seen before.

How Does This Work?

Most of the steps are very similar to what was discussed in the last blog entry on the Siamese Net. However, when using KNN, the Decode Layer builds a cache in GPU memory of encodings received for each label.

During training, the encoding cache is filled, and then during testing and running, the cache is used to find the encodings with the largest number of ‘nearest neighbors’ (e.g. shortest distances) to the current encoding from the data we seek to find a label for.

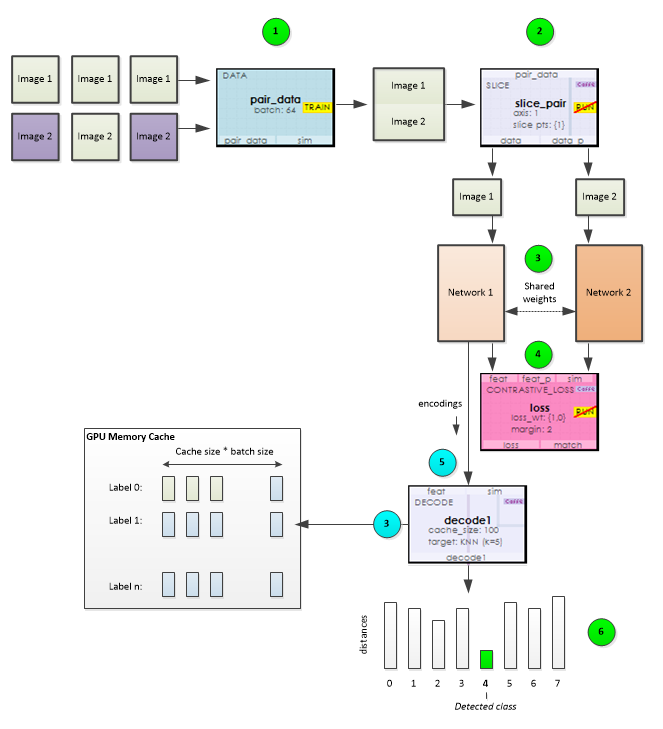

The following steps take place within the KNN based Siamese Net:

1.) During training, the Data Layer is fed a pair of images where each image is stacked one after the other in the channel.

2.) Internally, the model splits the data into two separate data streams using the Slice Layer. Each data stream is then fed into each of the two parallel nets making up the Siamese Net.

3.) Within the first network, the Decode Layer caches the encodings that it receives and stores the encodings by label. The encoding cache is stored within GPU memory and persisted along with the model weights so that the cache can be used later when the net is run.

4.) As with the CENTROID based Siamese Net, the encodings are sent to the Contrastive Loss layer which calculates the loss from the distance between the two encodings received from each of the two parallel nets.

5.) When running the net, the Decode layer compares its input encodings(that we seek to find a label for) with each of the encodings within the label cache. The distance between each is determined and the distances are sorted for each label and ordered by smallest distance first. A set of the k smallest distances for each label are averaged and the label with the smallest average distance is determined to be the detected label for the data.

6.) When running, the label distance averages are returned and the label with the smallest distance is determined to be the label detected.

Programming MyCaffe in Python

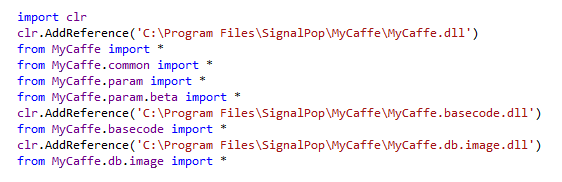

Using pythonnet makes programming MyCaffe in Python extremely easy and gives you access to virtually all aspects of MyCaffe. To get started, just install pythonnet by running the following command from with in your 64-bit Python environment.

|

1 |

pip install pythonnet |

Once installed, each MyCaffe namespace is accessed by adding a reference to each namespace via the clr. For example the following references are used by the One-Shot Python Sample on GitHub.

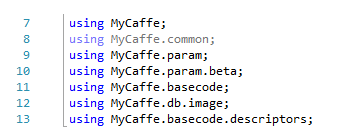

These Python references are equivalent to the following references used in the C# sample.

After referencing the MyCaffe DLLs, each object within the referenced DLLs are just about as easy to use in Python as they are in C#! For more discussion on using Python to program MyCaffe, see the section on Training and Testing with Python in the MyCaffe Programming Guide.

For an example on programming the MyCaffeControl with C# to learn the MNIST dataset using a Siamese Net with KNN, see the C# Siamese Net Sample on GitHub.

For an example on programming the MyCaffeControl with Python to learn the MNIST dataset using a Siamese Net with KNN, see the Python Siamese Net Sample on GitHub.

New Features

The following new features have been added to this release.

- Added MyCaffe Image Database version 2 support with faster background loading and new query state support.

- Added VerifyCompute to verify that the current device matches the compute requirement of the currently used CudaDnnDll.

- Added multi-label support to Debug Layer inspection.

- Added boost query hit percentage support to project properties.

- Added new KNN support the Decode layer for use with Siamese Nets.

- Added new AccuracyDecode layer for use with Siamese Nets.

- Added boost persistence support for saving and loading image boost settings.

Bug Fixes

The following bugs have been fixed in this release.

- Project results are now properly displayed after importing into a new project.

- Project results are now deletable after closing a project.

- Data loading optimized for faster image loading.

- Fixed bugs in MyCaffe Image Database related to superboost probability and label balancing.

To try out this model and train it yourself, just check out our Tutorials for easy step-by-step instructions that will get you started quickly! For cool example videos, including an ATARI Pong video and Cart-Pole balancing video, check out our Examples page.

[1] Berkeley Artificial Intelligence (BAIR), Siamese Network Training with Caffe.

[2] G. Koch, R. Zemel and R. Salakhutdinov, Siamese Neural Networks for One-shot Image Recognition, ICML 2015 Deep Learning Workshop, 2015.