In our latest release, version 0.11.4.60, we have improved and expanded our support for Sequence-to-Sequence[1] (Seq2Seq) models with Attention[2][3], and do so with the newly released CUDA 11.4.2/cuDNN 8.2.4 from NVIDIA.

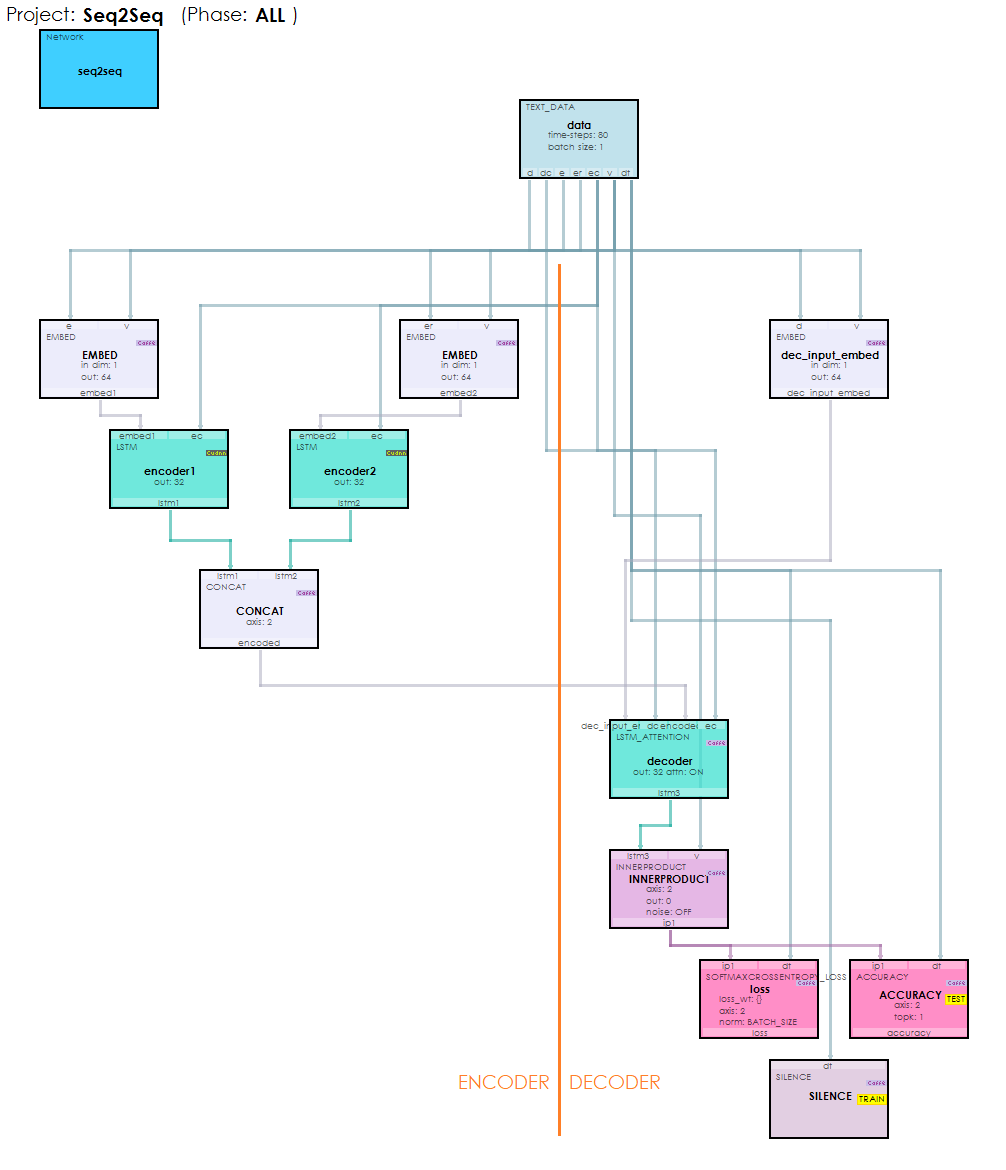

Using its drag-n-drop, visual editor, the SignalPop AI Designer now directly supports building Seq2Seq models like the one shown above. A new TEXT_DATA input layer provides easy text input management and feeds the data sequences to the model during training and testing creating a powerful visual environment for Seq2Seq!

With this release, we have also released a new Seq2Seq sample that builds on and improves the original Chat-bot sample posted during our last release.

Seq2SeqChatBot2 – in this sample, a Seq2Seq encoder/decoder model with attention is used to learn the question/response patterns of a chat-bot that allow the user to have a conversation with the Chat-bot. The model learns embeddings for the input data that are then encoded with two LSTM layers and then fed into the LSTMAttention layer along with the encoded decoder input to produce the output sequence.

To try out the Seq2Seq model yourself, check out the new Seq2Seq Chat-bot Tutorial.

New Features

The following new features have been added to this release.

- CUDA 11.4.2.471/cuDNN 8.2.4.15/nvapi 470/driver 471.96

- Windows 21H1, OS Build 19043.1202, SDK 10.0.19041.0

- Added new TextData Layer support.

- Added new Seq2Seq model support.

- Added Seq2Seq model templates.

- Added support for MODEL dataset type.

- Enhanced weight visualization to show all weights.

- Added layer inspection for EMBED layers.

- Added layer inspection for LSTM layers.

- Added layer inspection for LSTM_ATTENTION layers.

- Added layer inspection for INNERPRODUCT layers.

- Added dataset coverage analysis visualization.

- Added optional load method for project exports.

Bug Fixes

- Fixed bug in debug layer where data items were less than seed.

- Fixed bug in error handling on Results window.

- Fixed bug in network visualization blob title alignments.

- Fixed bug in IMPORT.VID where image creation would stall.

- Fixed bug caused when loading Getting Started document.

- Fixed bug in TestMany where one class was always triggered.

- Fixed bug in CreateResults where classes were not correct.

- Fixed bug in TestMany when used with MULTIBOX types.

Known Issues

The following are known issues in this release.

- Exporting projects to ONNX and re-importing has a known issue. To work around this issue, the weights of an ONNX model can be imported directly to a similar model.

- Loading and saving LSTMAttention models using the MyCaffe weights has known issues. Instead, the learnable_blobs of the model can be loaded and saved directly.

Happy deep learning with attention!

[1] Ilya Sutskever, Oriol Vinyals, and Quoc V. Le, Sequence to Sequence Learning with Neural Networks, 2014, arXiv:1409.3215.

[2] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, and Illia Polosukhin, Attention Is All You Need, 2017, arXiv:1706:03762.

[3] Jay Alammar, The Illustrated Transformer, 2017-2020, Jay Alammar Blog.