In our latest release, version 0.11.0.188, we now support distributed AI via the SignalPop Universal Miner, and do so with the recent CUDA 11.0.3 and cuDNN 8.0.3 release!

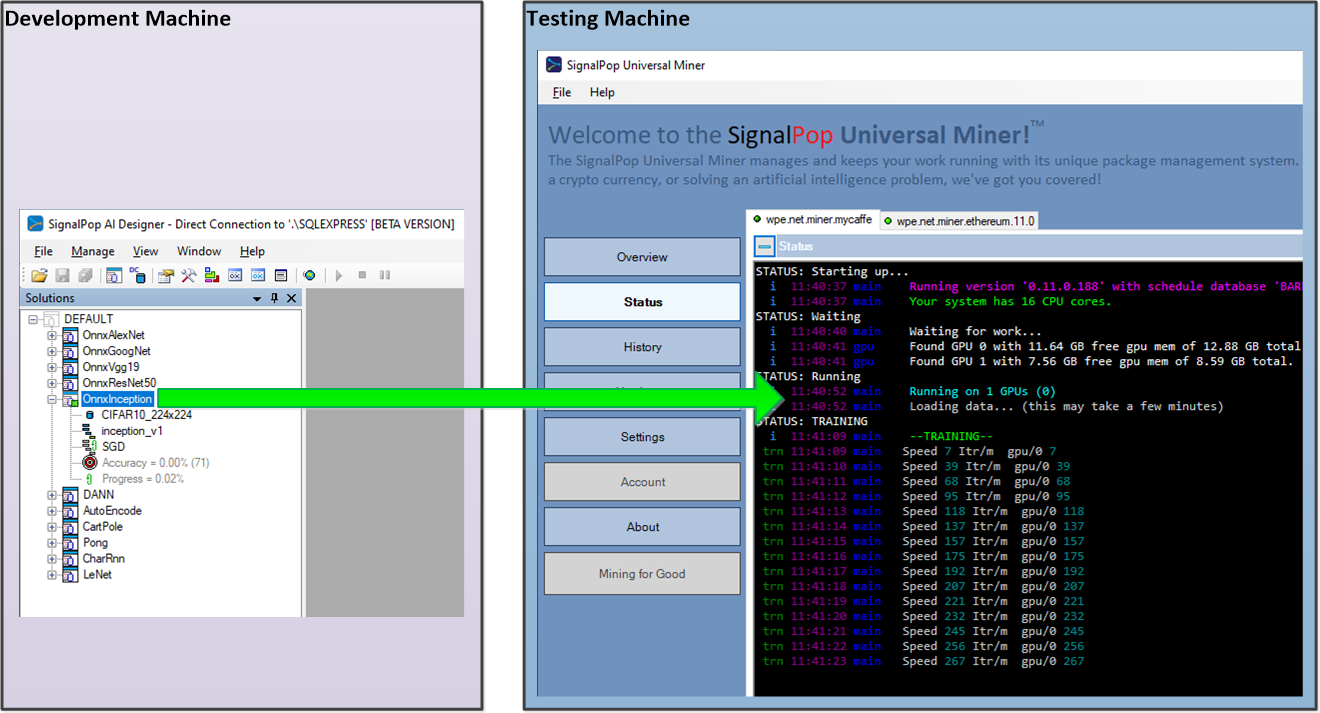

The SignalPop AI Designer now allows scheduling AI projects which are then loaded and trained by a separate instance of the SignalPop Universal Miner running on a remote machine.

With this configuration you can now easily develop your AI projects on your development machine and then train those same projects on your remote testing machine thus freeing up your development machine for more development work.

How does this work?

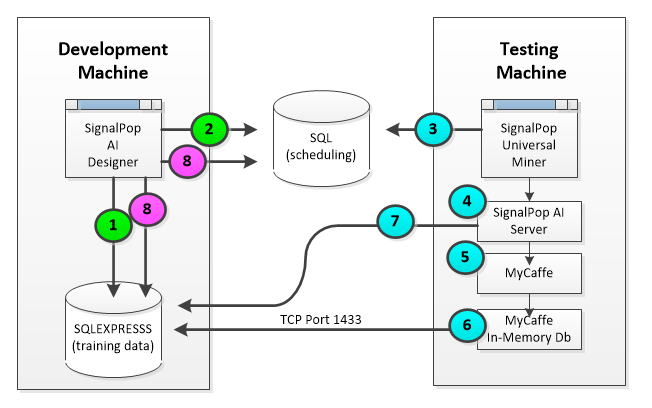

When scheduling a project, the project is placed into the scheduling database where it is later picked up by the SignalPop Universal Miner for training. During training, the SignalPop Universal Miner uses the same underlying SignalPop AI Server software to train the model using the MyCaffe AI Platform and MyCaffe In-Memory Database. Upon completion, the trained weights are placed back in the scheduling database allowing the user on the development machine to copy the results back into their project.

The following steps occur when running a distributed AI solution.

- First the designer uses the SignalPop AI Designer on the Development Machine to create the dataset and work-package data (model and solver descriptors) which are stored on the local of Microsoft SQLEXPRESS, running on the same machine as the SignalPop AI Designer application.

- Next, the designer uses the SignalPop AI Designer to schedule the project by adding a new work-package to the scheduling database. The work-package contains encrypted data describing the location of the dataset and work-package data to be used by the remote Testing Machine during training.

- On the Testing Machine, the SignalPop Universal Miner is assigned the scheduled work-package.

- Upon being assigned to the project, the SignalPop Uiversal Miner on the Testing Machine uses the SignalPop AI Server to load the work-package data and uses it to open and start training the project.

- During loading of the project, the SignalPop AI Server creates an instance of MyCaffe and loads the project into it.

- In addition, the SignalPop AI Server creates an instance of the MyCaffe In-Memory Database and sets its connection credentials to those specified within the scheduled work-package thus allowing the in-memory database to access the training data residing on the designers Development Machine.

- After the training of the model completes, the SignalPop Universal Miner running on the Testing Machine saves the weights and state back to the developers Development Machine and then marks the work-package as completed in the scheduling database.

- Back on the designer’s Development Machine, when the SignalPop AI Designer detects that the project is done, the project is displayed as completed with results. At this point the designer may copy the scheduled results from the work-package data into the projects local results residing on the local SQLEXPRESS database used by the SignalPop AI Designer.

Since both the SignalPop AI Designer and SignalPop Universal Miner both use the same SignalPop AI Server for training AI projects, the results are the as if the project were trained locally on the designer’s Development Machine.

To get started using distributed AI, see the ‘Scheduling Projects‘ section of the SignalPop AI Designer Getting Started document.

New Features

The following new features have been added to this release.

- CUDA 11.0.3/cuDNN 8.0.3 support added.

- Added ability to schedule projects for distributed AI remote training.

- Added load limit refresh rate.

- Added load limit refresh percentage.

- Added easy switching between convolution default, convolution optimized for speed and convolution optimized for memory.

- Optimized convolution forward pass.

Bug Fixes

The following bugs have been fixed in this release.

- Fixed bugs related to visualizing net and model with LoadLimit > 0.

- Fixed bugs related to last TSNE image disappearing.

- Fixed bugs related to exporting a project while the project is open.

- Fixed bug caused when exiting while training Pong.

For other great examples, including beating ATARI Pong, check out our Examples page.